Linux学习笔记——Hadoop安装部署

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

5.10、大数据集群Hadoop生态安装部署

5.10.1、简介

1、Hadoop是一个由Apache基金会所开发的分布式系统基础架构。

2、主要解决海量数据的存储和海量数据的分析计算问题。

- Hadoop HDFS提供分布式海量数据存储能力

- Hadoop YARN提供分布式集群资源管理能力

- Hadoop MapReduce提供分布式海量数据计算能力

5.10.1.1、前置要求

- 请确保完成了集群化环境前置准备章节的内容

- 即JDK、SSH免密、关闭防火墙、配置主机名映射等前置操作

若未完成请点击集群化环境前置准备

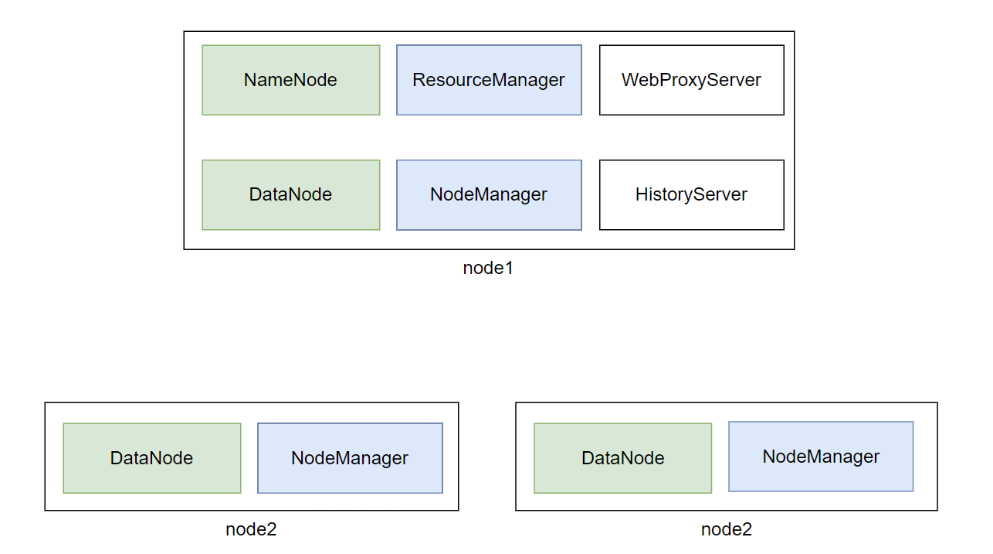

5.10.1.2、Hadoop集群角色

Hadoop生态体系中总共会出现如下进程角色

1、Hadoop HDFS的管理角色Namenode进程仅需一个即可管理者一个就够

2、Hadoop HDFS的工作角色Datanode进程需要多个工人越多越好一个机器启动一个

3、Hadoop YARN的管理角色ResourceManager进程仅需1个即可管理者一个就够

4、Hadoop HDFS的工作角色NodeManager进程需要多个工人越多越好一个机器启动一个

5、Hadoop历史记录服务器角色HistoryServer进程仅需1个即可功能进程无需太多1个足够

6、Hadoop代理服务器角色WebProxyServer进程仅需1个即可功能进程无需太多1个足够

7、ZooKeeper的进程QuorumPeerMain进程仅需1个即可zookeeper的工作者越多越好

5.10.1.3、角色和节点分配

角色分配如下

1、node1:Namenode、Datanode、ResourceManager、NodeManager、HistoryServer、WebProxyServer、QuorumPeerMain

2、node2:Datanode、NodeManager、QuorumPeerMain

3、node3:Datanode、NodeManager、QuorumPeerMain

5.10.2、安装

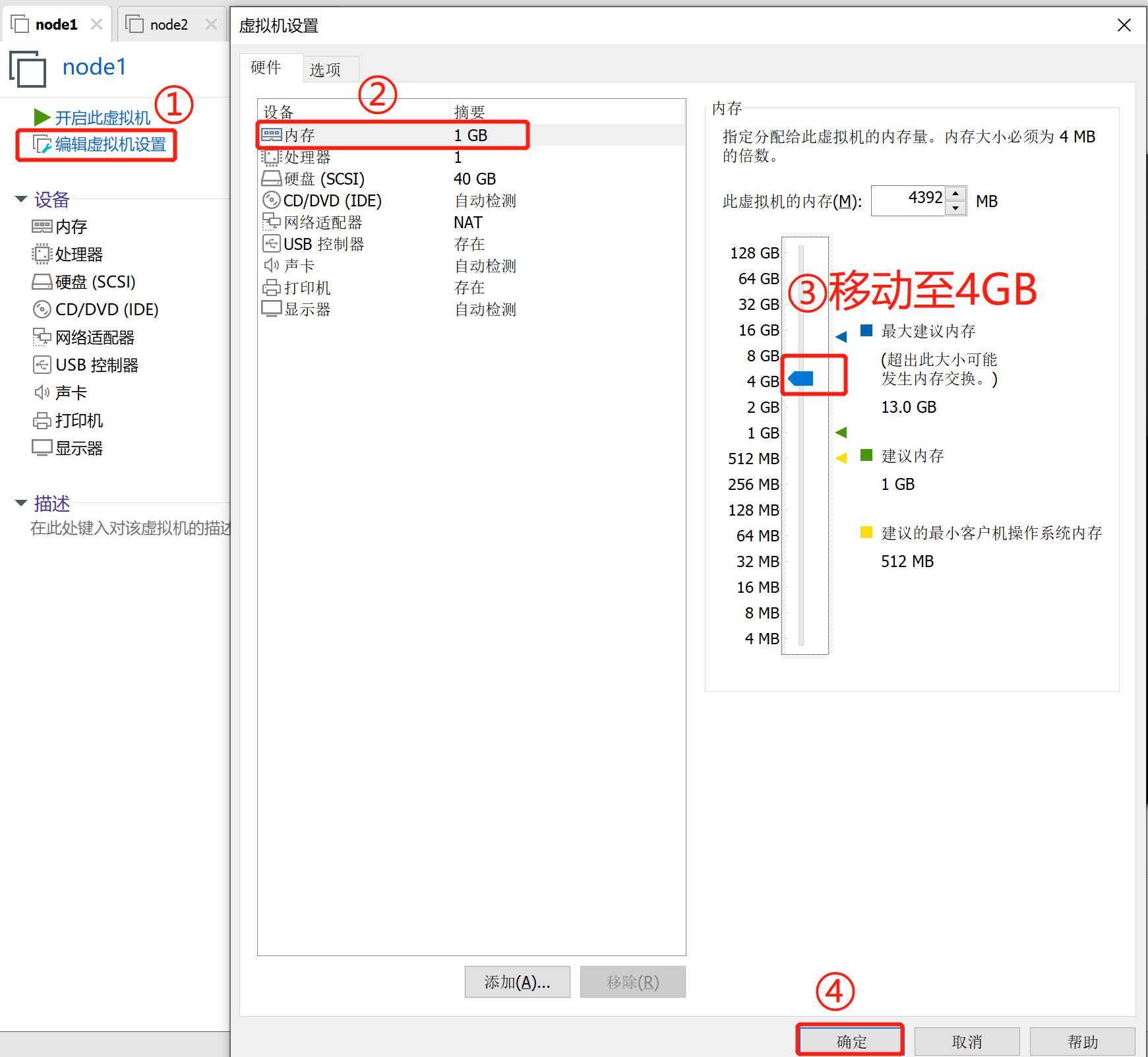

5.10.2.1、调整虚拟机内存

如上图可以看出node1承载了太多的压力。同时node2和node3也同时运行了不少程序。

为了确保集群的稳定需要对虚拟机进行内存设置。

请在VMware中对

-

node1设置4GB或以上内存

-

node2和node3设置2GB或以上内存

大数据的软件本身就是集群化一堆服务器一起运行的。

现在我们在一台电脑中以多台虚拟机来模拟集群确实会有很大的内存压力哦。

5.10.2.2、Zookeeper集群部署

5.10.2.3、Hadoop集群部署

1、【在node1操作】下载Hadoop安装包、解压、配置软链接

# 1. 下载

wget http://archive.apache.org/dist/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz

# 2. 解压

# 请确保目录/export/server存在

tar -zxvf hadoop-3.3.0.tar.gz -C /export/server/

# 3. 构建软链接

ln -s /export/server/hadoop-3.3.0 /export/server/hadoop

2、【在node1操作】修改配置文件hadoop-env.sh

Hadoop的配置文件要修改的地方很多请细心

cd 进入到/export/server/hadoop/etc/hadoop文件夹中配置文件都在这里

cd /export/server/hadoop/etc/hadoop

修改hadoop-env.sh文件

vim hadoop-env.sh

此文件是配置一些Hadoop用到的环境变量

这些是临时变量在Hadoop运行时有用

如果要永久生效需要写到/etc/profile中

# 在文件开头加入

# 配置Java安装路径

export JAVA_HOME=/export/server/jdk

# 配置Hadoop安装路径

export HADOOP_HOME=/export/server/hadoop

# Hadoop hdfs配置文件路径

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

# Hadoop YARN配置文件路径

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

# Hadoop YARN 日志文件夹

export YARN_LOG_DIR=$HADOOP_HOME/logs/yarn

# Hadoop hdfs 日志文件夹

export HADOOP_LOG_DIR=$HADOOP_HOME/logs/hdfs

# Hadoop的使用启动用户配置

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export YARN_PROXYSERVER_USER=root

3、【在node1操作】修改配置文件core-site.xml

vim core-site.xml

如下清空文件填入如下内容

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:8020</value>

<description></description>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<description></description>

</property>

</configuration>

为了能够上述内容全部粘贴至配置文件请在文件处于可写入内容的状态下进行粘贴。

4、【在node1操作】配置hdfs-site.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>700</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/nn</value>

<description>Path on the local filesystem where the NameNode stores the namespace and transactions logs persistently.</description>

</property>

<property>

<name>dfs.namenode.hosts</name>

<value>node1,node2,node3</value>

<description>List of permitted DataNodes.</description>

</property>

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

<description></description>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

<description></description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/dn</value>

</property>

</configuration>

5、【在node1操作】配置mapred-env.sh文件

# 在文件的开头加入如下环境变量设置

export JAVA_HOME=/export/server/jdk

export HADOOP_JOB_HISTORYSERVER_HEAPSIZE=1000

export HADOOP_MAPRED_ROOT_LOGGER=INFO,RFA

6、【在node1操作】配置mapred-site.xml文件

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description></description>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>node1:10020</value>

<description></description>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>node1:19888</value>

<description></description>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/data/mr-history/tmp</value>

<description></description>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/data/mr-history/done</value>

<description></description>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

</configuration>

7、【在node1操作】配置yarn-env.sh文件

# 在文件的开头加入如下环境变量设置

export JAVA_HOME=/export/server/jdk

export HADOOP_HOME=/export/server/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_LOG_DIR=$HADOOP_HOME/logs/yarn

export HADOOP_LOG_DIR=$HADOOP_HOME/logs/hdfs

8、【在node1操作】配置yarn-site.xml文件

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.log.server.url</name>

<value>http://node1:19888/jobhistory/logs</value>

<description></description>

</property>

<property>

<name>yarn.web-proxy.address</name>

<value>node1:8089</value>

<description>proxy server hostname and port</description>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

<description>Configuration to enable or disable log aggregation</description>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/tmp/logs</value>

<description>Configuration to enable or disable log aggregation</description>

</property>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node1</value>

<description></description>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

<description></description>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/data/nm-local</value>

<description>Comma-separated list of paths on the local filesystem where intermediate data is written.</description>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/data/nm-log</value>

<description>Comma-separated list of paths on the local filesystem where logs are written.</description>

</property>

<property>

<name>yarn.nodemanager.log.retain-seconds</name>

<value>10800</value>

<description>Default time (in seconds) to retain log files on the NodeManager Only applicable if log-aggregation is disabled.</description>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>Shuffle service that needs to be set for Map Reduce applications.</description>

</property>

</configuration>

9、【在node1操作】修改workers文件

# 全部内容如下

node1

node2

node3

10、【在node1操作】分发hadoop到其它机器

# 在node1执行

cd /export/server

# 将hadoop-3.3.0分发到node2、node3

scp -r hadoop-3.3.0 node2:`pwd`/

scp -r hadoop-3.3.0 node3:`pwd`/

11、【在node2、node3执行】

# 创建软链接

ln -s /export/server/hadoop-3.3.0 /export/server/hadoop

12、创建所需目录

-

在node1执行

mkdir -p /data/nn mkdir -p /data/dn mkdir -p /data/nm-log mkdir -p /data/nm-local -

在node2执行

mkdir -p /data/dn mkdir -p /data/nm-log mkdir -p /data/nm-local -

在node3执行

mkdir -p /data/dn mkdir -p /data/nm-log mkdir -p /data/nm-local

13、配置环境变量

在node1、node2、node3修改/etc/profile

# 编辑/etc/profile

vim /etc/profile

# 在文件末尾添加如下内容

export HADOOP_HOME=/export/server/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

执行source /etc/profile生效

source /etc/profile

14、【在node1操作】格式化NameNode

hadoop namenode -format

hadoop这个命令来自于$HADOOP_HOME/bin中的程序

由于配置了环境变量PATH所以可以在任意位置执行hadoop命令哦

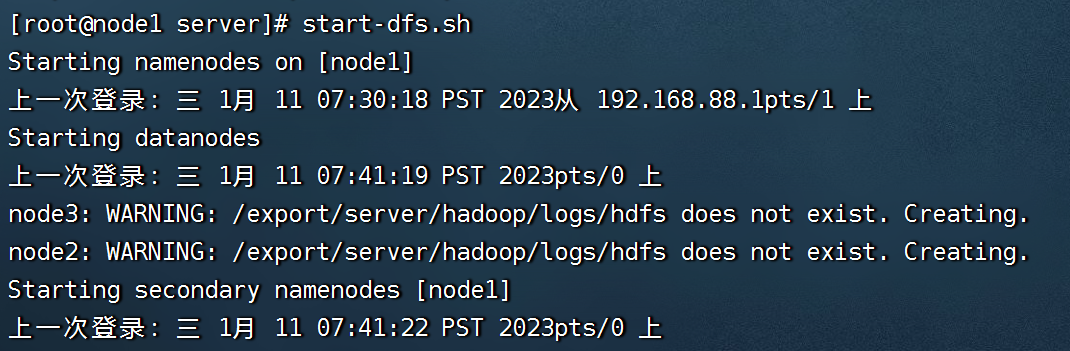

15、【在node1操作】启动hadoop的hdfs集群在node1执行即可

start-dfs.sh

# 如需停止可以执行

stop-dfs.sh

start-dfs.sh这个命令来自于$HADOOP_HOME/sbin中的程序

由于配置了环境变量PATH所以可以在任意位置执行start-dfs.sh命令哦

16、【在node1操作】启动hadoop的yarn集群在node1执行即可

start-yarn.sh

# 如需停止可以执行

stop-yarn.sh

17、启动历史服务器

mapred --daemon start historyserver

# 如需停止将start更换为stop

18、启动web代理服务器

yarn-daemon.sh start proxyserver

# 如需停止将start更换为stop

yarn-daemon.sh stop proxyserver

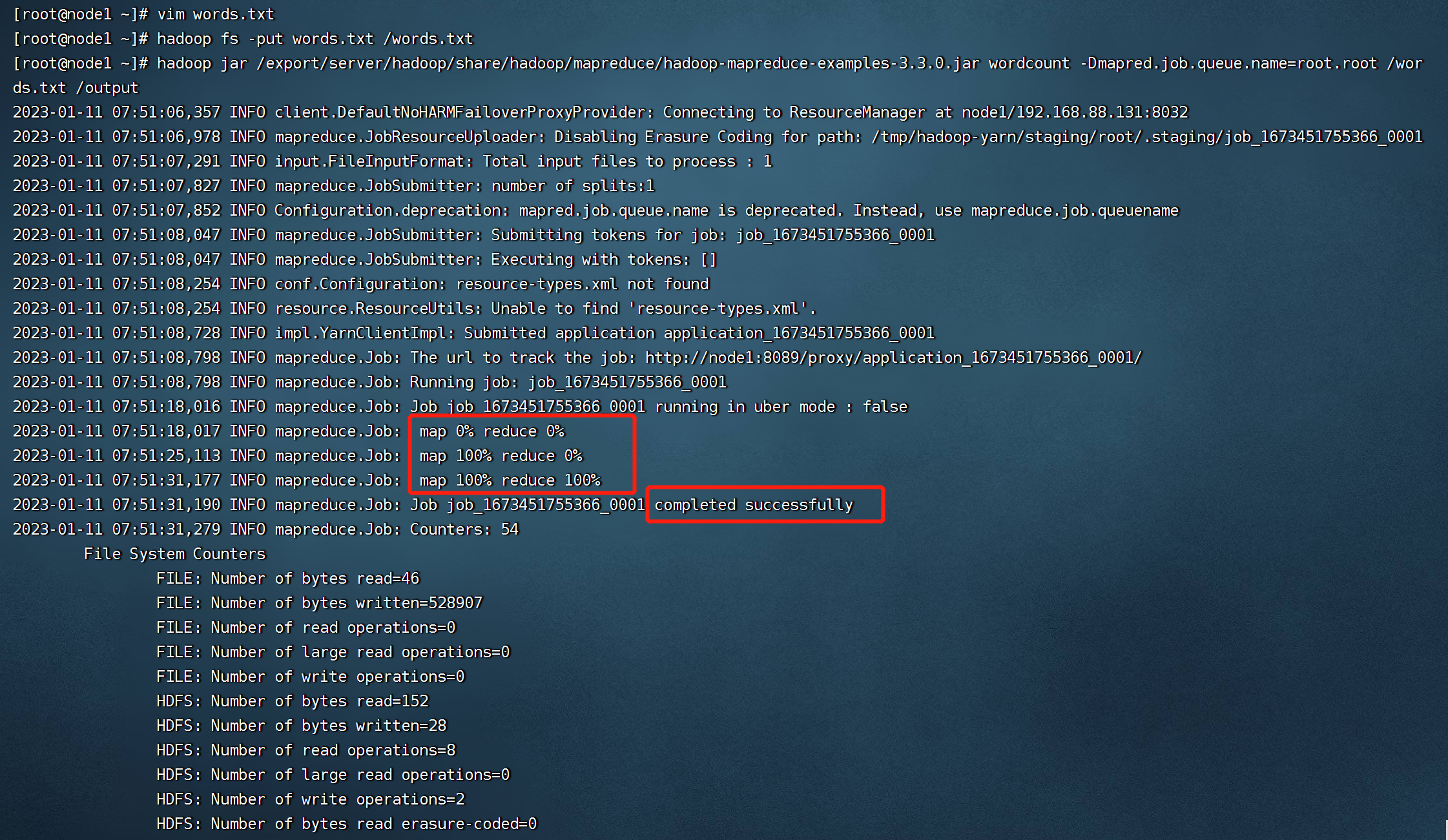

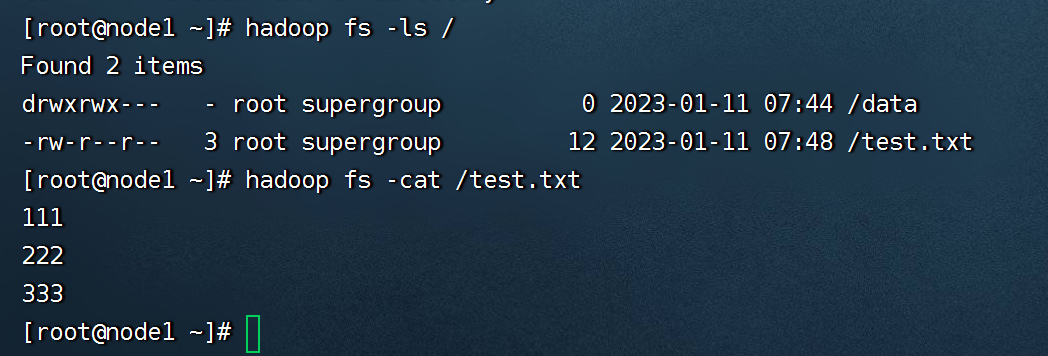

5.10.2.4、验证Hadoop集群运行情况

1、在node1、node2、node3上通过jps验证进程是否都启动成功

2、验证HDFS浏览器打开http://node1:9870

创建文件test.txt随意填入内容并执行

hadoop fs -put test.txt /test.txt

hadoop fs -cat /test.txt

3、验证YARN浏览器打开http://node1:8088

执行

# 创建文件words.txt填入如下内容

itheima itcast hadoop

itheima hadoop hadoop

itheima itcast

# 将文件上传到HDFS中

hadoop fs -put words.txt /words.txt

# 执行如下命令验证YARN是否正常

hadoop jar /export/server/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.0.jar wordcount -Dmapred.job.queue.name=root.root /words.txt /output