CDHCDH-5.14、jindo-sdk-4.6.5 执行sql 报错权限不足

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

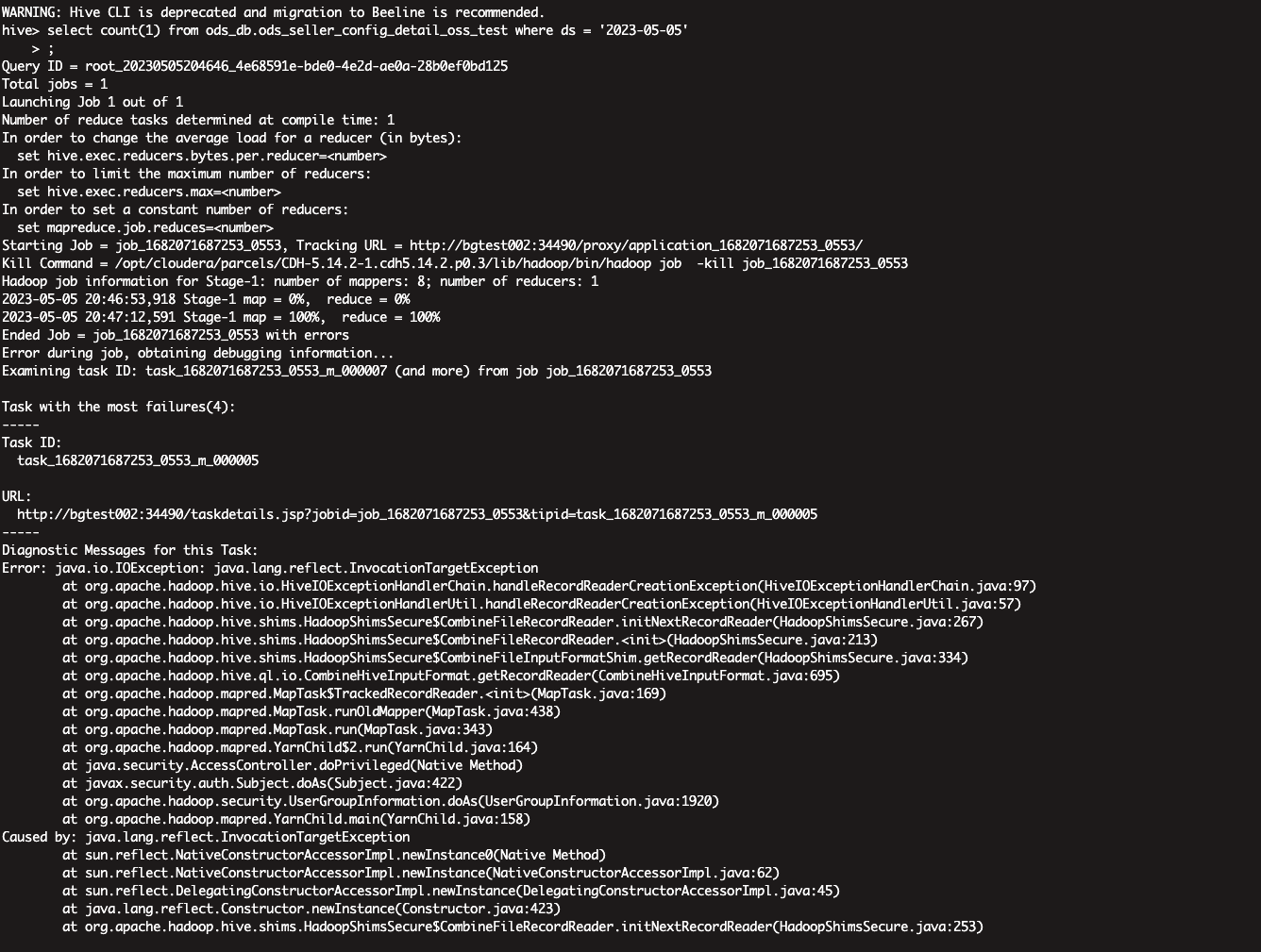

原因:HIve-MR报错权限不足

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class com.aliyun.jindodata.oss.JindoOssFileSystem not found

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2349)

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2790)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2810)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:98)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2853)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2835)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:387)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.hadoop.mapred.LineRecordReader.<init>(LineRecordReader.java:108)

at org.apache.hadoop.mapred.TextInputFormat.getRecordReader(TextInputFormat.java:67)

at org.apache.hadoop.hive.ql.io.CombineHiveRecordReader.<init>(CombineHiveRecordReader.java:68)

... 16 more

Caused by: java.lang.ClassNotFoundException: Class com.aliyun.jindodata.oss.JindoOssFileSystem not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2255)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2347)

... 26 more

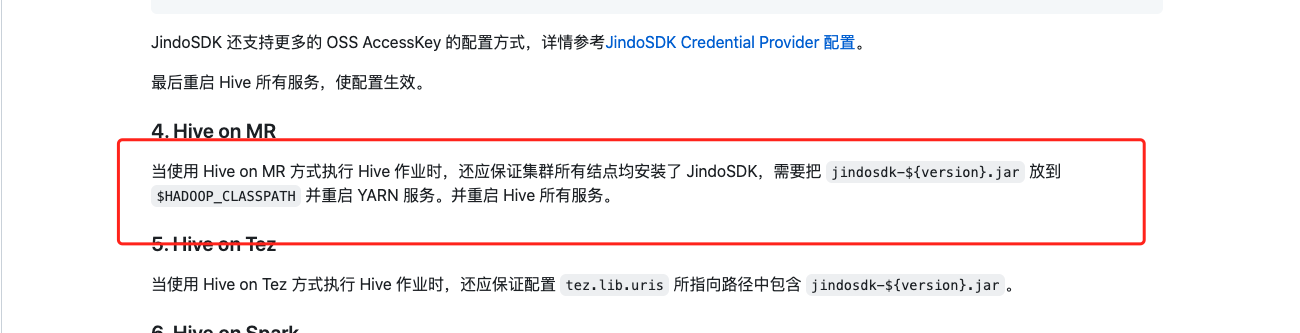

解决方法:

思路:第一次执行 select * from table_name 不出报错

第二次执行 select count(1) from table_name出现报错权限不足,分析可能是走MR过程导致的,查阅资料

建立软连接:

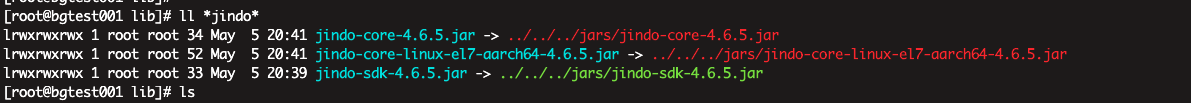

进入hive,lib目录下

[root@bgtest001 parcels]# cd CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hive/lib/

查看jindosdk

[root@bgtest001 lib]# ls /opt/jindosdk-4.6.5-linux/lib/

jindo-core-4.6.5.jar jindo-core-linux-el7-aarch64-4.6.5.jar jindo-sdk-4.6.5.jar native/

建立软连接

[root@bgtest001 lib]# ln -s ../../../jars/jindo-core-linux-el7-aarch64-4.6.5.jar jindo-core-linux-el7-aarch64-4.6.5.jar

You have mail in /var/spool/mail/root

[root@bgtest001 lib]# ln -s ../../../jars/jindo-core-4.6.5.jar jindo-core-4.6.5.jar

查看是否成功

[root@bgtest001 lib]# ll *jindo*

lrwxrwxrwx 1 root root 34 May 5 20:41 jindo-core-4.6.5.jar -> ../../../jars/jindo-core-4.6.5.jar

lrwxrwxrwx 1 root root 52 May 5 20:41 jindo-core-linux-el7-aarch64-4.6.5.jar -> ../../../jars/jindo-core-linux-el7-aarch64-4.6.5.jar

lrwxrwxrwx 1 root root 33 May 5 20:39 jindo-sdk-4.6.5.jar -> ../../../jars/jindo-sdk-4.6.5.jar

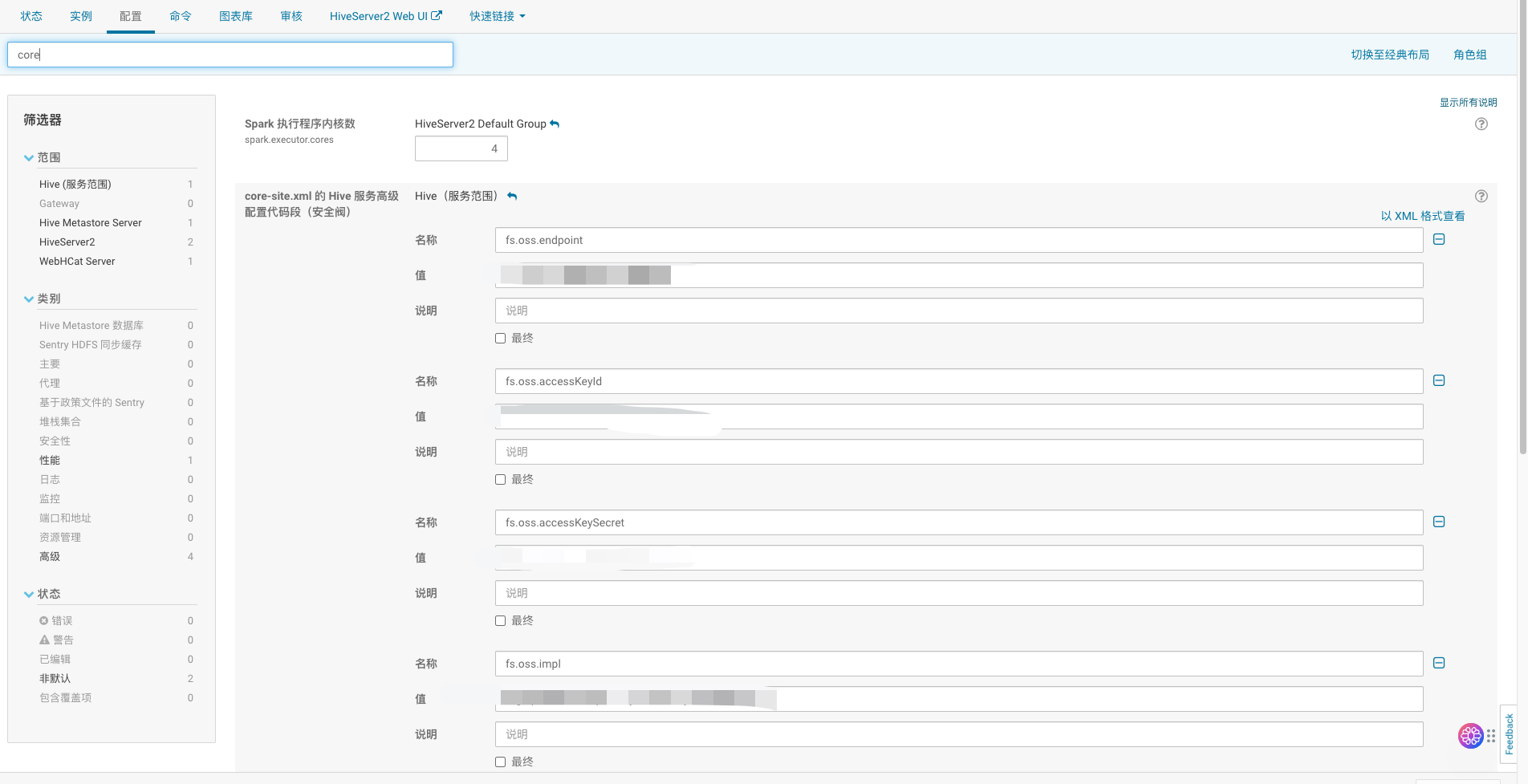

由于我用的是CDH版本所以需要进行配置配置完成后进行重启Hive服务:

<property>

<name>fs.oss.endpoint</name>

<value>cn-shanghai.oss-dls.aliyuncs.com</value>

</property>

<property>

<name>fs.oss.accessKeyId</name>

<value>LTAI5tEoRwgUxNAmv8wZ6SGc</value>

</property>

<property>

<name>fs.oss.accessKeySecret</name>

<value>AlLrEHxtnG33qRiviPmaspi7rsetT9</value>

</property>

<property>

<name>fs.oss.impl</name>

<value>org.apache.hadoop.fs.aliyun.oss.AliyunOSSFileSystem</value>

</property>

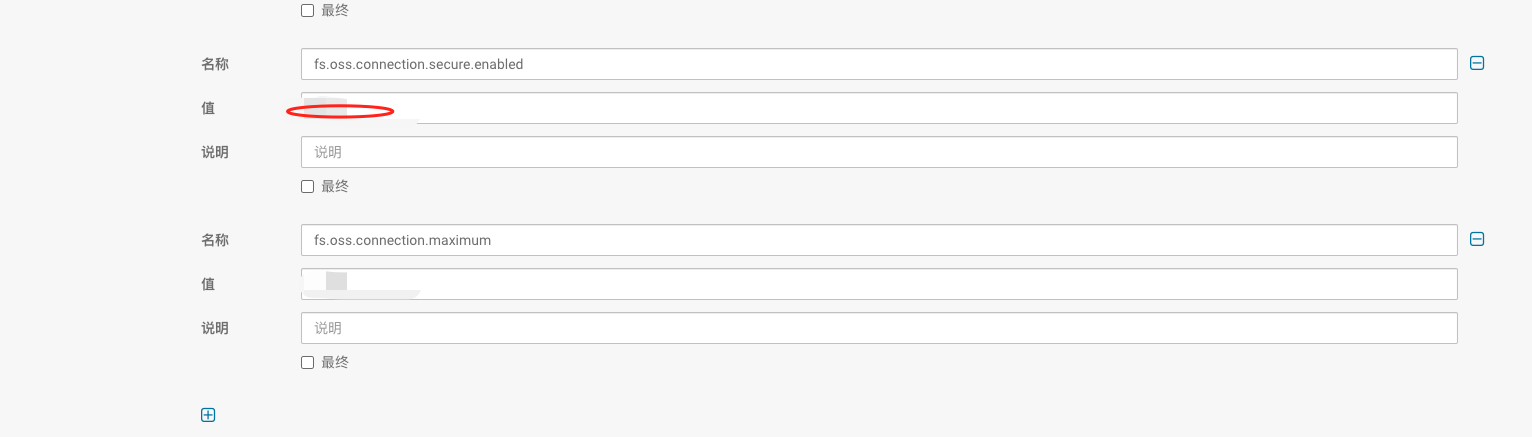

<property>

<name>fs.oss.connection.secure.enabled</name>

<value>false</value>

</property>

<property>

<name>fs.oss.connection.maximum</name>

<value>10000</value>

</property>

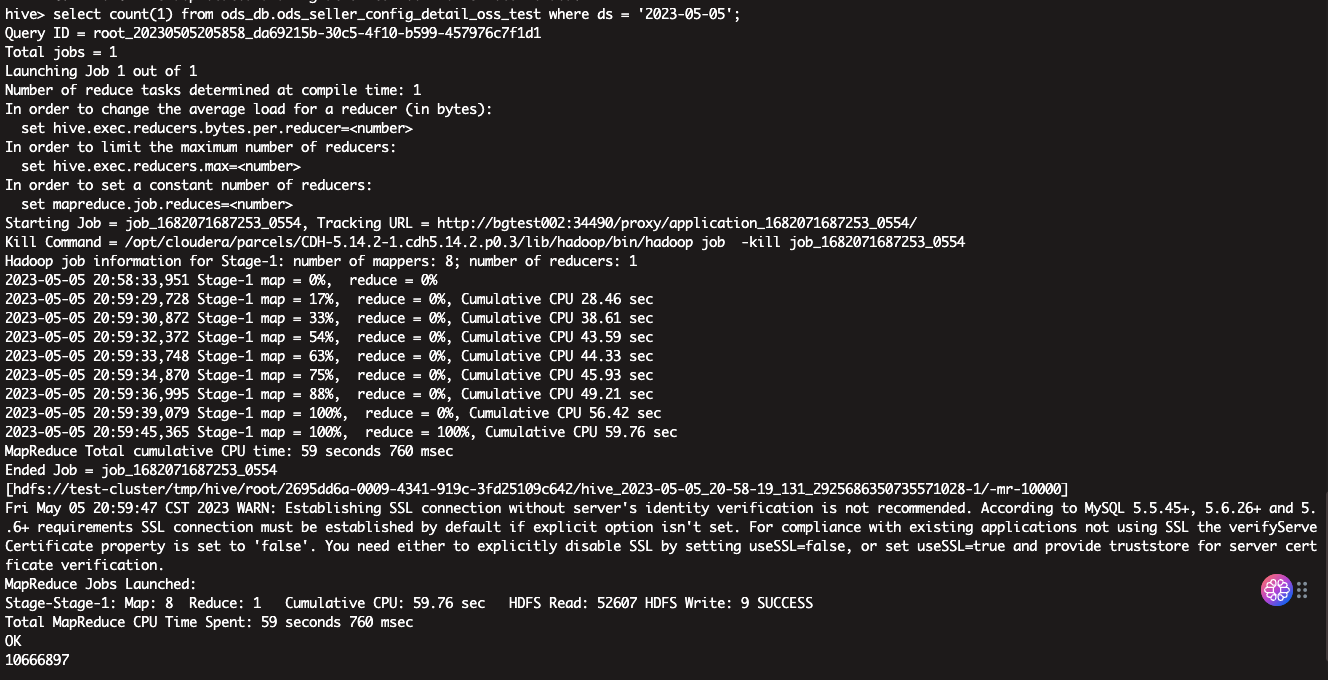

以上配置完成后启动Hive 执行SQL解决问题:

hive> select count(1) from ods_db.ods_seller_config_detail_oss_test where ds = '2023-05-05';

Query ID = root_20230505205858_da69215b-30c5-4f10-b599-457976c7f1d1

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1682071687253_0554, Tracking URL = http://bgtest002:34490/proxy/application_1682071687253_0554/

Kill Command = /opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop/bin/hadoop job -kill job_1682071687253_0554

Hadoop job information for Stage-1: number of mappers: 8; number of reducers: 1

2023-05-05 20:58:33,951 Stage-1 map = 0%, reduce = 0%

2023-05-05 20:59:29,728 Stage-1 map = 17%, reduce = 0%, Cumulative CPU 28.46 sec

2023-05-05 20:59:30,872 Stage-1 map = 33%, reduce = 0%, Cumulative CPU 38.61 sec

2023-05-05 20:59:32,372 Stage-1 map = 54%, reduce = 0%, Cumulative CPU 43.59 sec

2023-05-05 20:59:33,748 Stage-1 map = 63%, reduce = 0%, Cumulative CPU 44.33 sec

2023-05-05 20:59:34,870 Stage-1 map = 75%, reduce = 0%, Cumulative CPU 45.93 sec

2023-05-05 20:59:36,995 Stage-1 map = 88%, reduce = 0%, Cumulative CPU 49.21 sec

2023-05-05 20:59:39,079 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 56.42 sec

2023-05-05 20:59:45,365 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 59.76 sec

MapReduce Total cumulative CPU time: 59 seconds 760 msec

Ended Job = job_1682071687253_0554

[hdfs://test-cluster/tmp/hive/root/2695dd6a-0009-4341-919c-3fd25109c642/hive_2023-05-05_20-58-19_131_2925686350735571028-1/-mr-10000]

Fri May 05 20:59:47 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

MapReduce Jobs Launched:

Stage-Stage-1: Map: 8 Reduce: 1 Cumulative CPU: 59.76 sec HDFS Read: 52607 HDFS Write: 9 SUCCESS

Total MapReduce CPU Time Spent: 59 seconds 760 msec

OK

10666897

Time taken: 88.877 seconds, Fetched: 1 row(s)

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |