MySQL Shell 逻辑备份恢复API

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

MySQL8.0开始提供的MySQL Shell功能是DBA推向了另一个高度除SQL外正式踏入Cloud数据库服务 和 shell操作数据库领域MGR是一个代表点。

日常DBA工作可以通过MySQL Shell更容易实现。因为MySQL Shell处理用JavaScript、Python和SQL编写的代码提供了一种交互式代码执行模式。Shell是一个新的知识点可用于Microsoft Windows、Linux和64位平台的macOS。并且也支持任何GA版本的MySQL 5.7或8.0一起使用。

MySQL8.0 推出Clone功能之后8.0.21增加了一种新的逻辑备份恢复API接口

- 多线程

- 控制速率

- 支持zstd压缩

- 支持chunk并行导出

- load data并行导入

- 输出执行信息行数进度条 等

- 备份到第三方存储设备中 如OCIS3等 ;

MySQL shell脚本里逻辑备份7个API接口

1. util.dumpInstance() 用于备份整个实例 2. util.dumpSchemas() 用于备份指定schema 3. util.dumpTables() 用于备份表 4. util.loadDump() 用于恢复备份 5. util.importTable() 用于导入表 6. util.importJson() 用户JSON导入 7. util.export_table() 用于导出表

在原有的逻辑备份恢复工具中mysqldumpmysqlpumpmydumper又多了一种并行备份恢复方式。

导出

不同级别的逻辑dump实例库表。

dump-schemas指定schema级别进行备份

输出信息中打印了 线程DDL rowsschematables Compressed 等信息。这些记录信息是可观判断进度的很好依据。

shell> mysqlsh shadmin@172.17.27.48:3380 -- util dump-schemas employees --outputUrl=/tmp/dump

Please provide the password for 'shadmin@172.17.27.48:3380': ******

Save password for 'shadmin@172.17.27.48:3380'? [Y]es/[N]o/Ne[v]er (default No): N

Acquiring global read lock

Global read lock acquired

Initializing - done

1 schemas will be dumped and within them 11 tables, 2 views.

Gathering information - done

All transactions have been started

Locking instance for backup

Global read lock has been released

Writing global DDL files

NOTE: Could not select columns to be used as an index for table `employees`.`employees01`. Chunking has been disabled for this table, data will be dumped to a single file.

Running data dump using 4 threads.

NOTE: Progress information uses estimated values and may not be accurate.

Writing schema metadata - done

Writing DDL - done

Writing table metadata - done

Starting data dump

100% (2.52M rows / ~2.51M rows), 744.64K rows/s, 28.66 MB/s uncompressed, 7.84 MB/s compressed

Dump duration: 00:00:03s

Total duration: 00:00:03s

Schemas dumped: 1

Tables dumped: 11

Uncompressed data size: 96.44 MB

Compressed data size: 27.11 MB

Compression ratio: 3.6

Rows written: 2518358

Bytes written: 27.11 MB

Average uncompressed throughput: 27.77 MB/s

Average compressed throughput: 7.81 MB/s MySQL服务器general日志记录里 RR隔离级别 多线程处理机制FTWRL等操作都包含。

2022-12-16T11:43:58.787526+08:00 24 Query FLUSH NO_WRITE_TO_BINLOG TABLES

2022-12-16T11:43:58.789720+08:00 24 Query FLUSH TABLES WITH READ LOCK

2022-12-16T11:43:58.789994+08:00 24 Query SET SESSION TRANSACTION ISOLATION LEVEL REPEATABLE READ

2022-12-16T11:43:58.790235+08:00 24 Query START TRANSACTION WITH CONSISTENT SNAPSHOT

2022-12-16T11:43:58.790951+08:00 24 Query SELECT @@GLOBAL.VERSION

2022-12-16T11:43:58.791192+08:00 24 Query EXPLAIN SELECT 1

2022-12-16T11:43:58.791488+08:00 24 Query SELECT SCHEMA_NAME,DEFAULT_COLLATION_NAME FROM information_schema.schemata WHERE (STRCMP(SCHEMA_NAME COLLATE utf8_bin,'employees'))=0

2022-12-16T11:43:58.792735+08:00 24 Query SELECT COUNT(*) FROM information_schema.schemata

2022-12-16T11:43:58.794541+08:00 24 Query SELECT TABLE_SCHEMA,TABLE_NAME,TABLE_TYPE,TABLE_ROWS,AVG_ROW_LENGTH,ENGINE,CREATE_OPTIONS,TABLE_COMMENT FROM information_schema.tables WHERE (STRCMP(TABLE_SCHEMA COLLATE utf8_bin,'employees'))=0

2022-12-16T11:43:58.797286+08:00 28 Connect shadmin@iZuf6178v14ipc59jbbpfnZ on using TCP/IP

2022-12-16T11:43:58.797528+08:00 26 Connect shadmin@iZuf6178v14ipc59jbbpfnZ on using TCP/IP

2022-12-16T11:43:58.797749+08:00 27 Connect shadmin@iZuf6178v14ipc59jbbpfnZ on using TCP/IP

2022-12-16T11:43:58.797988+08:00 25 Connect shadmin@iZuf6178v14ipc59jbbpfnZ on using TCP/IP导出表文件结构如下

[root@schouse dump]# tree

。。。

├── employees@titles@@0.tsv.zst #数据信息

├── employees@titles@@0.tsv.zst.idx #索引对应信息

├── employees@titles.json #表基础信息

├── employees@titles.sql #表创建语句

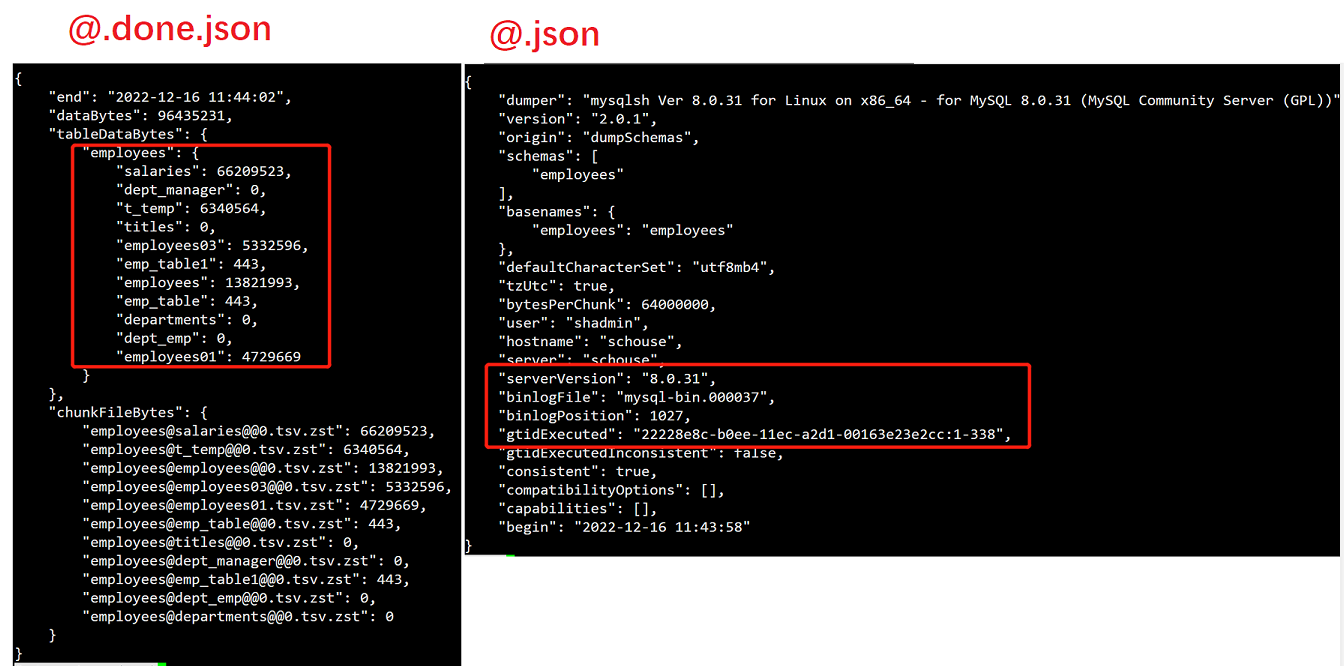

├── @.done.json #导出表名 表行数等信息

├── @.json #gtid binlog postion信息

├── @.post.sql #版本信息

└── @.sql

核心控制参数

| dump参数 | 说明 |

|---|---|

| dryRun | dump的内容以及MySQL数据库服务兼容性检查结果的信息 |

| showProgress | 显示true或隐藏false执行的进度信息。 |

| maxRate | dump期间每个线程每秒用于数据读取吞吐量的最大字节数。 |

| defaultCharacterSet | 导出时使用的字符集。 |

| consistent | 备份一致数据使用FLUSH TABLES WITH read lock语句设置全局读锁或者使用lock TABLES语句 |

| threads | 并行线程数。每个线程都有自己的MySQL实例连接。默认值为4。 |

| compression | 写入数据转储文件时使用的压缩算法“none”“gzip”、“zstd”。默认值“zstd”。 |

除此之外dump-instancedump-tables使用也比较简单

#util dump-instance <outputUrl> [<options>]

shell> mysqlsh root@localhost -- util dump-instance /tmp/dump --excludeSchemas=employees

#util dump-tables <schema> <tables> --outputUrl=<str> [<options>]

shell> mysqlsh shadmin@172.17.27.48:3380 -- util dump-tables employees employees03 --outputUrl=/tmp/dump导入

数据导入的接口loadDump

指定之前备份的文件路径整个过程执行ddl语句 load数据。

MySQL 127.0.0.1:3380 JS > util.loadDump("/tmp/dump/")

Loading DDL and Data from '/tmp/dump/' using 4 threads.

Opening dump...

Target is MySQL 8.0.31. Dump was produced from MySQL 8.0.31

NOTE: Load progress file detected. Load will be resumed from where it was left, assuming no external updates were made.

You may enable the 'resetProgress' option to discard progress for this MySQL instance and force it to be completely reloaded.

Scanning metadata - done

Executing common preamble SQL

Executing DDL - done

Executing view DDL - done

Starting data load

Executing common postamble SQL

100% (257 bytes / 257 bytes), 0.00 B/s, 2 / 2 tables done

Recreating indexes - done

2 chunks (11 rows, 257 bytes) for 2 tables in 1 schemas were loaded in 0 sec (avg throughput 257.00 B/s)

0 warnings were reported during the load. 单表结构创建数据导入操作

#单独导入表结构

util.loadDump("/tmp/dump", { includeTables: ["worldb.city"], loadDdl: true, loadData: false});

#单独导入数据

util.importTable ("/tmp/dump/worldb@city@@0.tsv.zst", { schema: "worldb", table: "city"});执行结果日志记录

shell# cat load-progress.22228e8c-b0ee-11ec-a2d1-00163e23e2cc.json

{"op":"SERVER-UUID","done":true,"timestamp":1672108993998,"uuid":"22228e8c-b0ee-11ec-a2d1-00163e23e2cc"}

{"op":"SCHEMA-DDL","done":false,"timestamp":1672109012555,"schema":"worldb"}

{"op":"TABLE-DDL","done":false,"timestamp":1672109012555,"schema":"worldb","table":"city"}

{"op":"TABLE-DDL","done":false,"timestamp":1672109012555,"schema":"worldb","table":"ct"}

{"op":"SCHEMA-DDL","done":true,"timestamp":1672109012578,"schema":"worldb"}

{"op":"TABLE-DATA","done":false,"timestamp":1672109012579,"schema":"worldb","table":"city","chunk":0}

{"op":"TABLE-DATA","done":false,"timestamp":1672109012579,"schema":"worldb","table":"ct","chunk":-1}

{"op":"TABLE-DATA","done":true,"timestamp":1672109012593,"schema":"worldb","table":"city","chunk":0,"bytes":210,"raw_bytes":148,"rows":9}util.loadDump 优点如下

- 多线程并行操作。

- 支持断点续传功能progressFile文件记录执行进度从上次完成的地方继续执行如上述load-progress…progress记录文件。如需从头开始resetProgress 设置为 true。

- 支持延迟创建二级索引。

- 通过LOAD DATA LOCAL INFILE 命令来导入数据一个事务。

- 如果单个文件过大自动进行切割以避免产生大事务。

其他

export_table

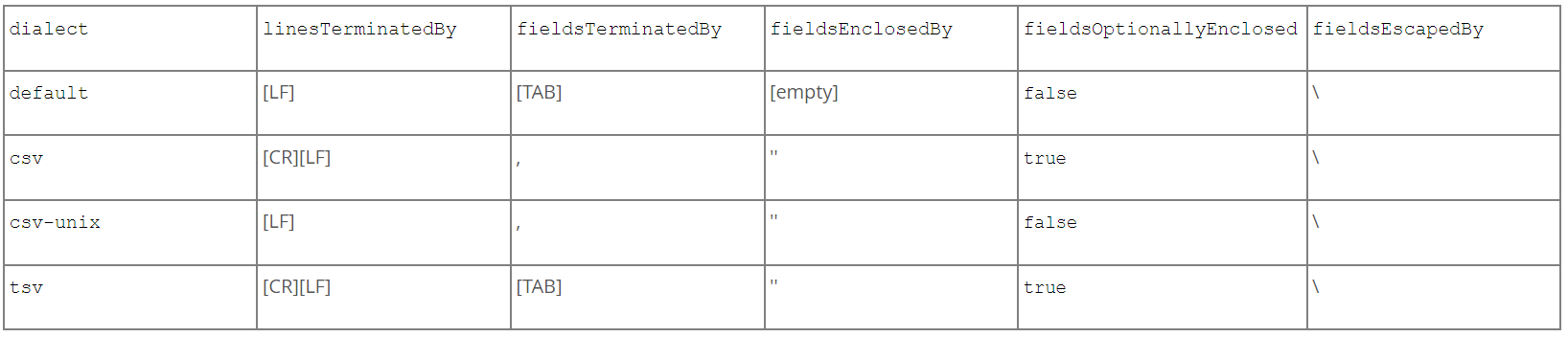

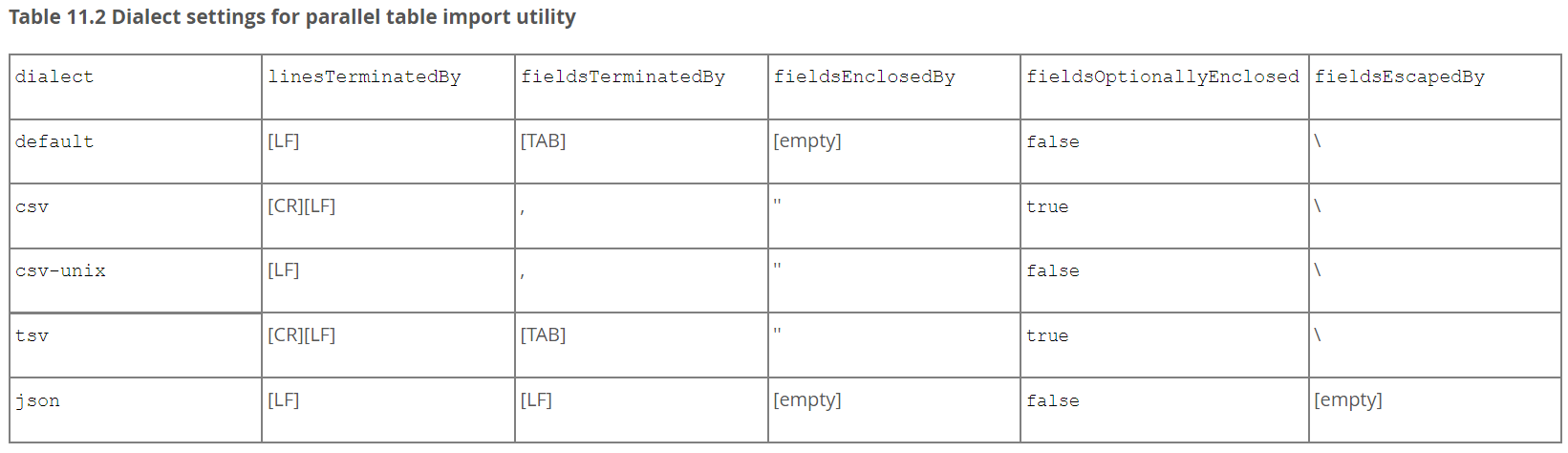

跟SQL【SELECT。。。INTO OUTFILE】语句中一样的功效支持多种文件格式[default|csv|csv unix|tsv]。

为导出数据文件的格式指定一组字段和行处理选项。还可以通过指定一个或多个行TerminatedBy、fieldsTerminatedByfieldsEnclosedBy、fieldsOptionallyEnclosed和fieldsScapedBy选项来更改设置。

MySQL 127.0.0.1:3380 JS > util.exportTable("test.employees", "/tmp/employees.txt")

Initializing - done

Gathering information - done

Running data dump using 1 thread.

NOTE: Progress information uses estimated values and may not be accurate.

Starting data dump

100% (300.02K rows / ~299.47K rows), 0.00 rows/s, 0.00 B/s

Dump duration: 00:00:00s

Total duration: 00:00:00s

Data size: 13.82 MB

Rows written: 300024

Bytes written: 13.82 MB

Average throughput: 13.82 MB/s

The dump can be loaded using:

util.importTable("/tmp/employees.txt", {

"characterSet": "utf8mb4",

"schema": "test",

"table": "employees"

})import-table

并行表导入实用程序使用LOAD DATA LOCAL INFILE语句上载数据因此目标服务器上的LOCAL_INFILE系统变量必须设置为ON。

MySQL 127.0.0.1:3380 JS > \sql SET GLOBAL local_infile = 1;

MySQL 127.0.0.1:3380 JS > util.importTable("/tmp/employees.txt",

{"characterSet": "utf8mb4","schema": "test","table": "employees" ,

"threads":4 , "showProgress": true})

Importing from file '/tmp/employees.txt' to table `test`.`employees` in MySQL Server at 127.0.0.1:3380 using 1 thread

[Worker000] employees.txt: Records: 300024 Deleted: 0 Skipped: 0 Warnings: 0

100% (13.82 MB / 13.82 MB), 2.46 MB/s

File '/tmp/employees.txt' (13.82 MB) was imported in 6.0851 sec at 2.27 MB/s

Total rows affected in test.employees: Records: 300024 Deleted: 0 Skipped: 0 Warnings: 0

总结

MySQL Shell备份恢复API按照官方Relase记录 8.0.27版本之后是一个比较稳定的版本。按照提供的速度和功能性方面可以说一些逻辑处理场景中MySQL shell应该是效率最高。目前API 支持5.7和8.0版本。

| mysqldump | mysqlpump | mydumper | mysql shell | |

|---|---|---|---|---|

| 线程 | 单线程 | 多线程 | 多线程 | 多线程 |

| 压缩 | 支持 | 支持 | 支持 | 支持 |

| 远程 | 支持 | 支持 | 支持 | 支持 |

| 备份速度 | 慢 | 快 | 快 | 快 |

| 恢复速度 | 慢 | 慢 | 快 | 快 |

| 分割 | 不支持 | 不支持 | 支持 | 支持 |

| 第三方存储 | 不支持 | 不支持 | 不支持 | 支持 |

参考

https://dev.mysql.com/doc/mysql-shell/8.0/en/mysql-shell-utilities.html

帮助指南

mysqlsh -- util dump-instance --help

mysqlsh -- util dump-schemas --help

mysqlsh -- util dump-tables --help

mysqlsh -- util import-table --help

mysqlsh -- util exportTable --help

mysqlsh -- util loadDump --help