Centos7.9基于Kubeasz部署k8s 1.27.1高可用集群

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

kubeasz 致力于提供快速部署高可用k8s集群的工具, 同时也努力成为k8s实践、使用的参考书

基于二进制方式部署和利用ansible-playbook实现自动化既提供一键安装脚本,

kubeasz 从每一个单独部件组装到完整的集群提供最灵活的配置能力几乎可以设置任何组件的任何参数

至自动化创建适合大规模集群的BGP Route Reflector网络模式。

GitHub - easzlab/kubeasz: 使用Ansible脚本安装K8S集群介绍组件交互原理方便直接不受国内网络环境影响

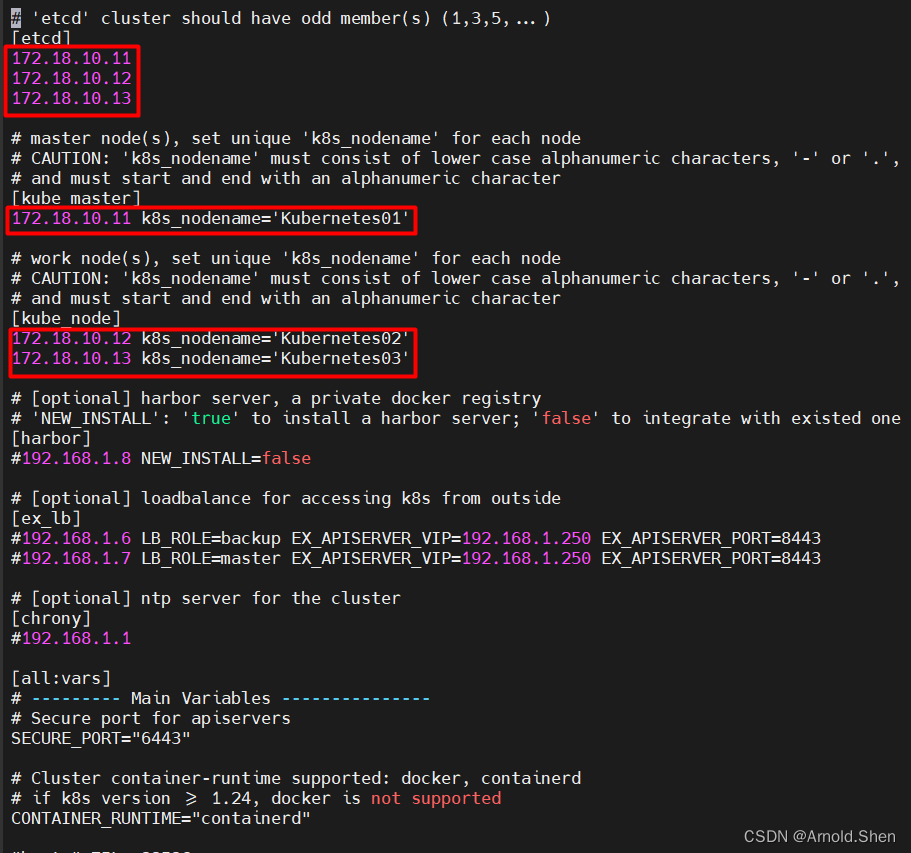

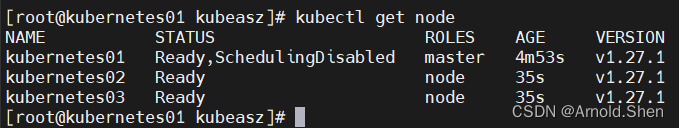

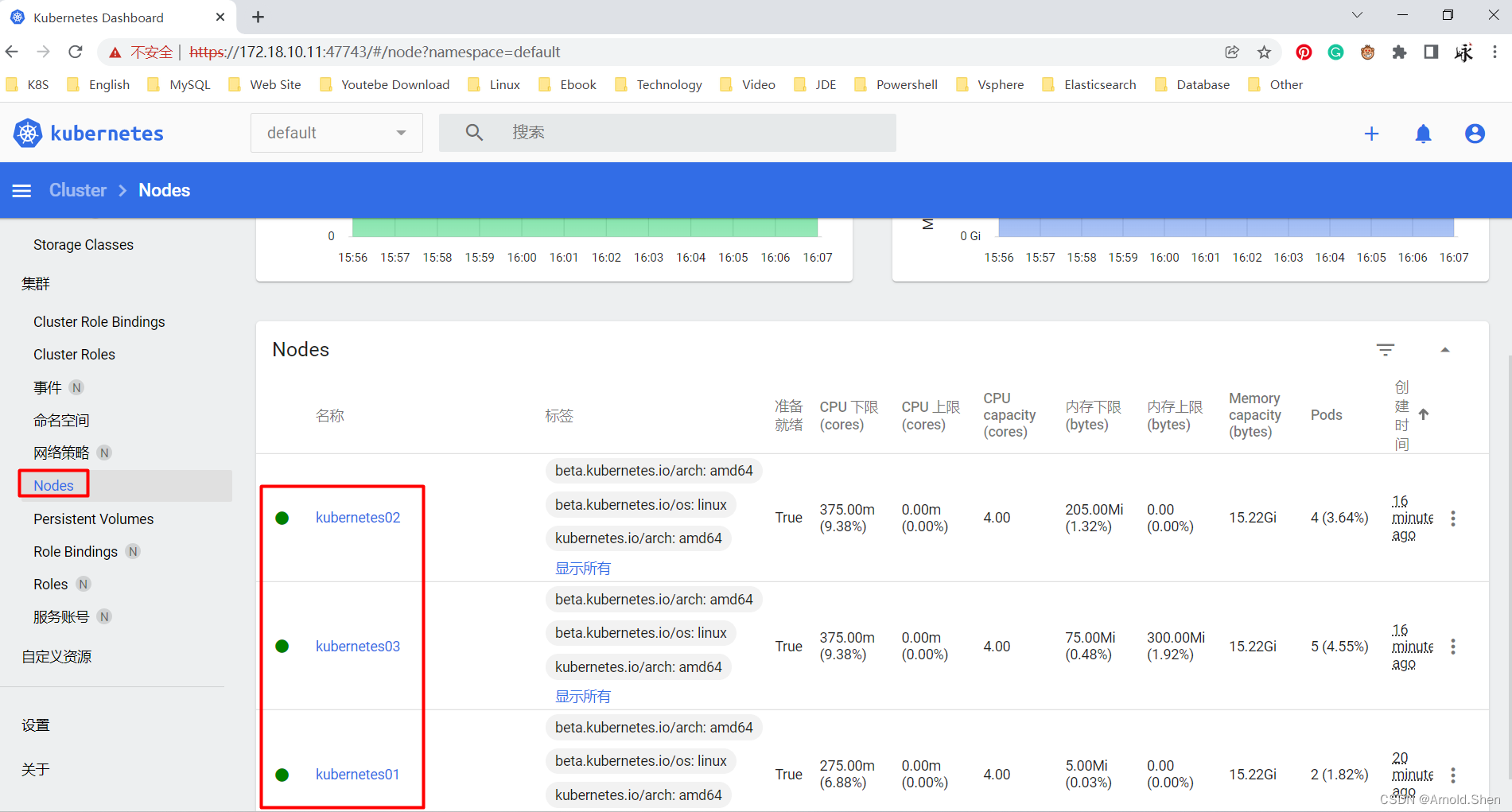

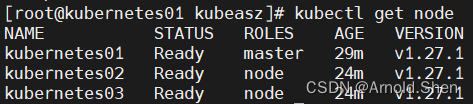

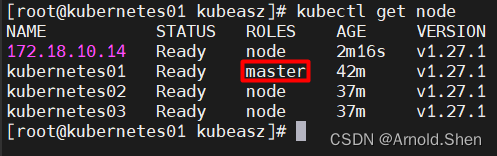

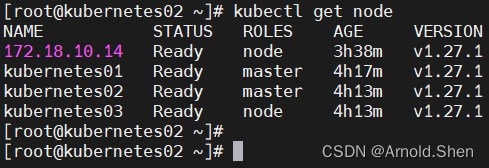

其中 Kubernetes01 作为 master 节点Kubernetes02/Kubernetes03 为 k8s的worker 节点

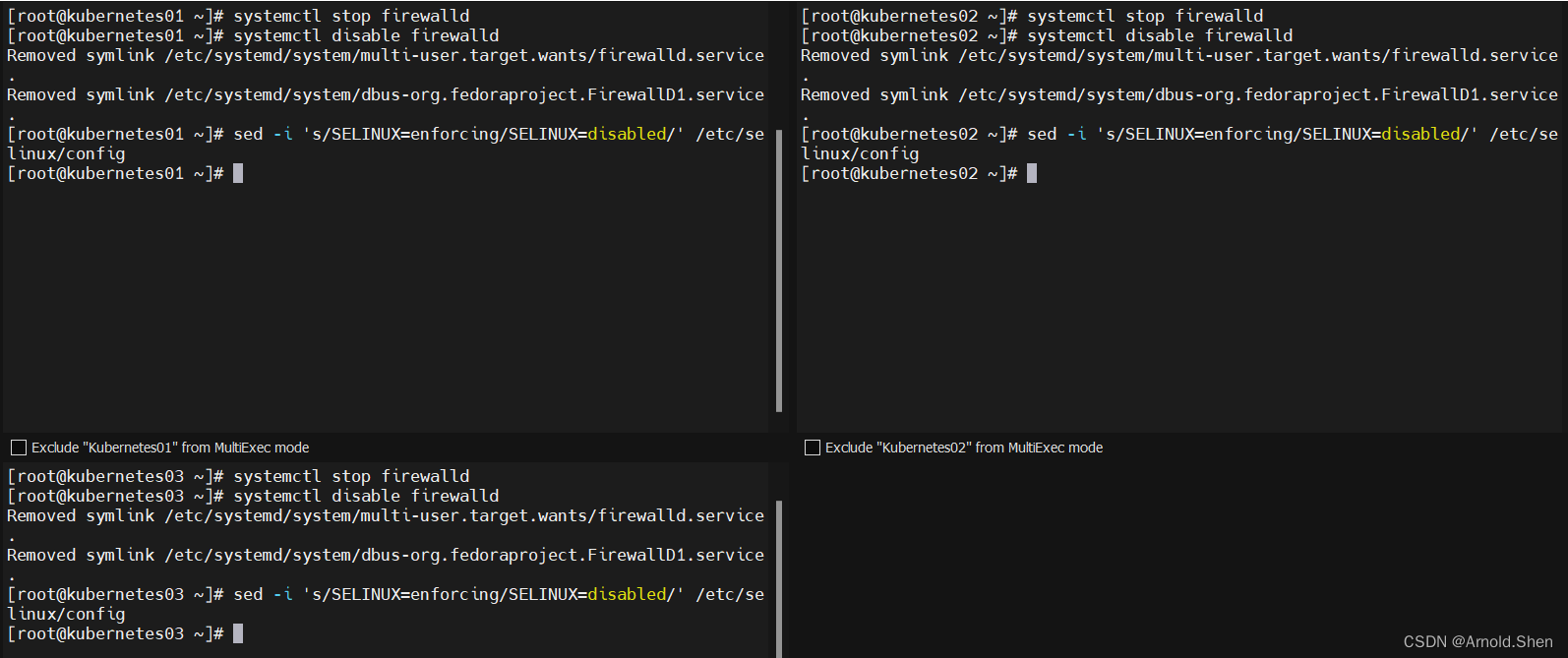

系统关闭selinux /firewalld 清空 iptables 规则

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

做好 系统的 ssh 无密钥认证:CentOS 7.9配置SSH免密登录无需合并authorized_keys_Arnold.Shen的博客-CSDN博客

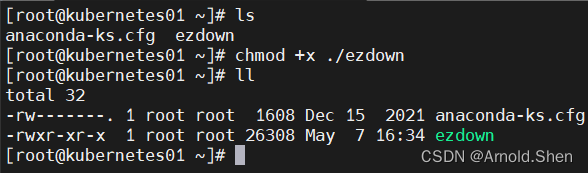

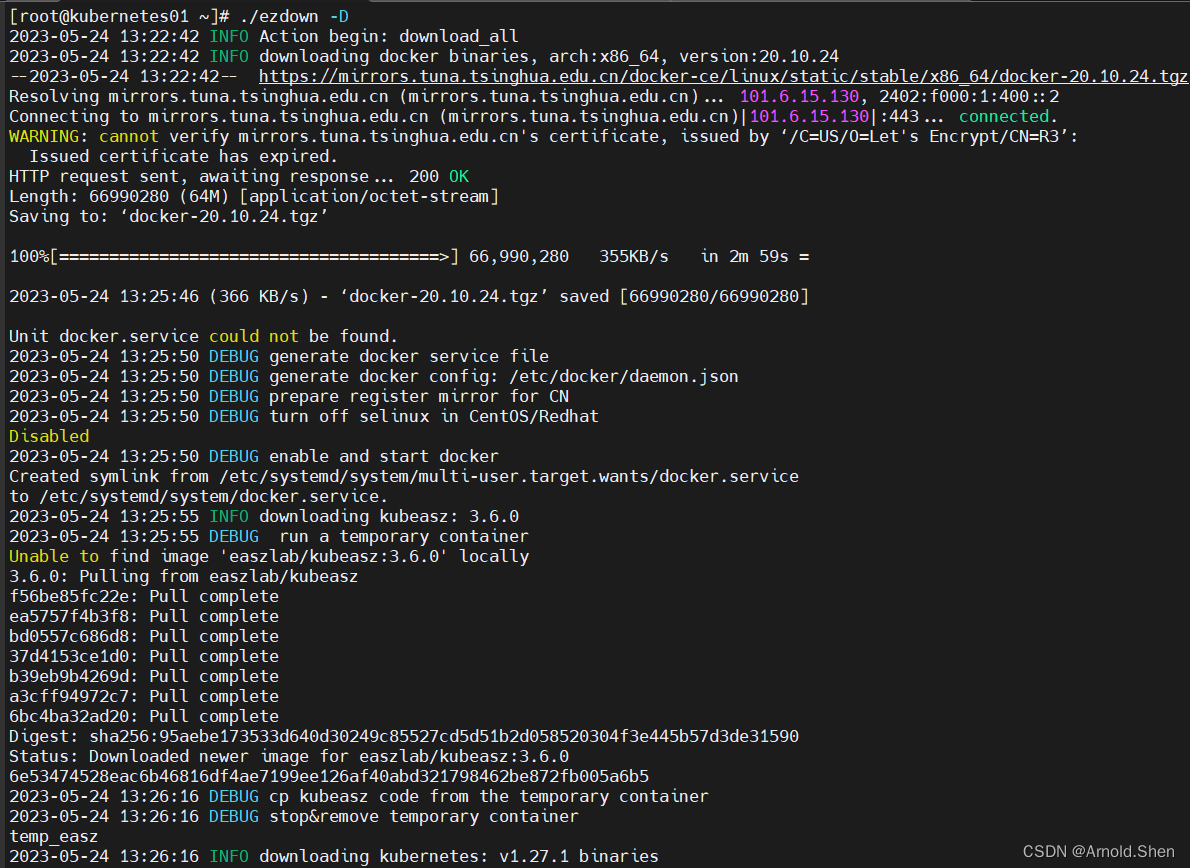

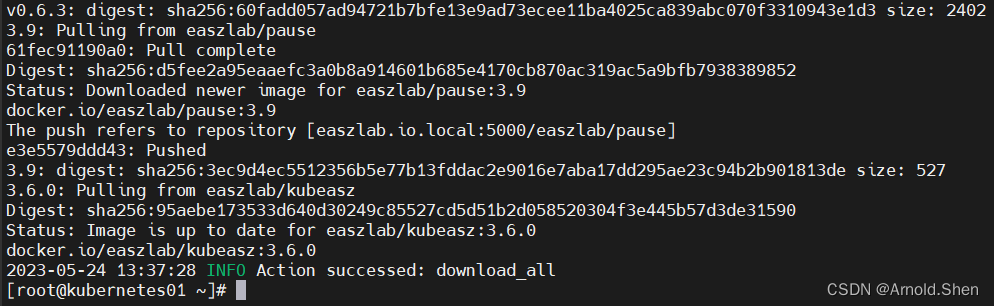

示例wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

wget https://github.com/easzlab/kubeasz/releases/download/3.6.0/ezdown

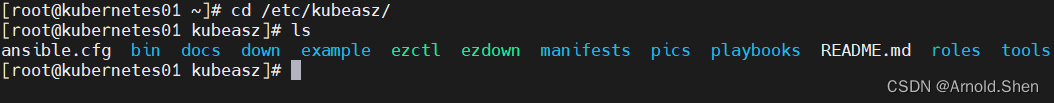

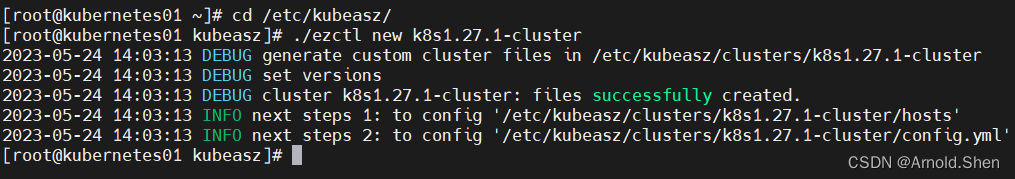

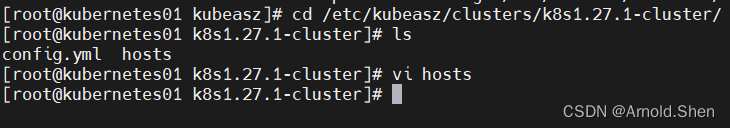

cd /etc/kubeasz/clusters/k8s1.27.1-cluster/

# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

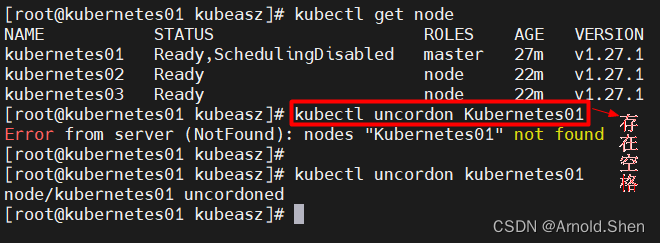

172.18.10.11 k8s_nodename='Kubernetes01'

# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

172.18.10.12 k8s_nodename='Kubernetes02'

172.18.10.13 k8s_nodename='Kubernetes03'

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

# --------- Main Variables ---------------

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

# K8S Service CIDR, not overlap with node(host) networking

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Deploy Directory (kubeasz workspace)

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s1.27.1-cluster"

# CA and other components cert/key Directory

# Default 'k8s_nodename' is empty

# 可选离线安装系统软件包 (offline|online)

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

# force to recreate CA and other certs, not suggested to set 'true'

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# set unique 'k8s_nodename' for each node, if not set(default:'') ip add will be used

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character (e.g. 'example.com'),

# regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \

{{ k8s_nodename|replace('_', '-')|lower }} \

ETCD_DATA_DIR: "/var/lib/etcd"

# role:runtime [containerd,docker]

# ------------------------------------------- containerd

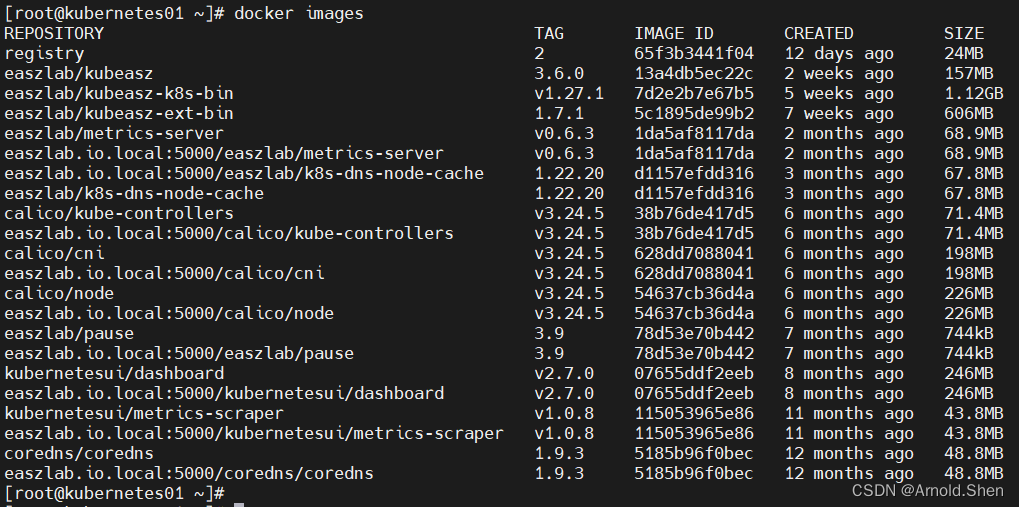

SANDBOX_IMAGE: "easzlab.io.local:5000/easzlab/pause:3.9"

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

DOCKER_STORAGE_DIR: "/var/lib/docker"

- "http://easzlab.io.local:5000"

- "https://{{ HARBOR_REGISTRY }}"

# k8s 集群 master 节点证书配置可以添加多个ip和域名比如增加公网ip和域名

# node 节点上 pod 网段掩码长度决定每个节点最多能分配的pod ip地址

# 如果flannel 使用 --kube-subnet-mgr 参数那么它将读取该设置为每个节点分配pod网段

# Flannel not using SubnetLen json key · Issue #847 · flannel-io/flannel · GitHub

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# 配置为kube组件kubelet,kube-proxy,dockerd等预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控了解系统的资源占用状况

# 并且随着系统运行时间需要适当增加资源预留数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机最小化安装系统服务如果使用高性能物理机可以适当增加预留

# 另外集群安装时候apiserver等资源占用会短时较大建议至少预留1g内存

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

# ------------------------------------------- calico

# [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事其他的话需要修改各自公有云的网络配置具体可以参考各个公有云说明)

# 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能同子网配置为Never即可.

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IPbgp邻居通过该地址建立可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# CALICO_RR_NODES 配置route reflectors的节点如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

# [calico]更新支持calico 版本: ["3.19", "3.23"]

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

cilium_connectivity_check: true

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制一般需要始终开启 ipinip自有环境可以设置为 "subnet"

# [kube-router]NetworkPolicy 支持开关

# [kube-router]kube-router 镜像版本

LOCAL_DNS_CACHE: "169.254.20.10"

dashboardMetricsScraperVer: "v1.0.8"

nfs_provisioner_namespace: "kube-system"

nfs_storage_class: "managed-nfs-storage"

network_check_schedule: "*/5 * * * *"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

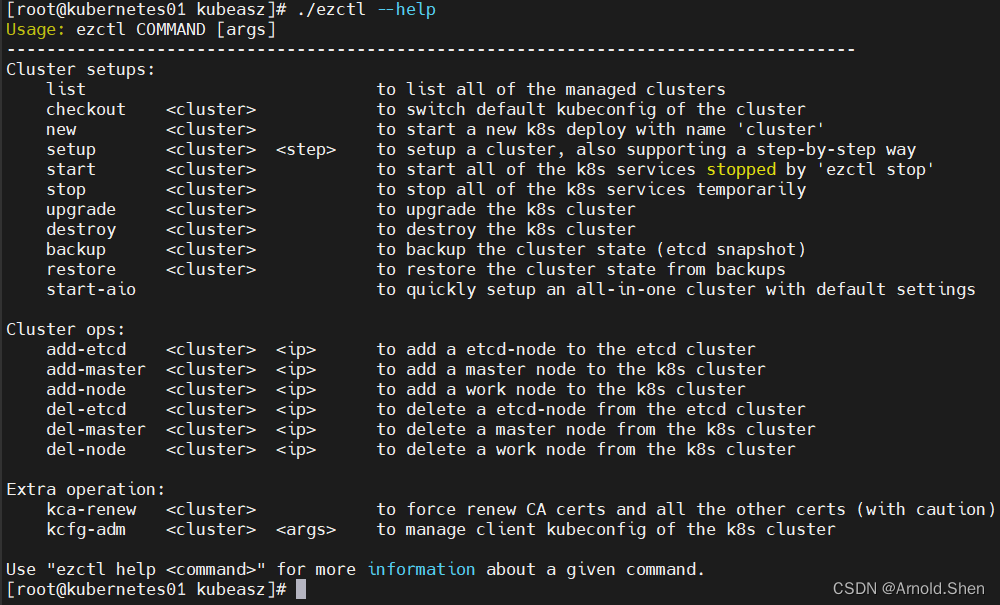

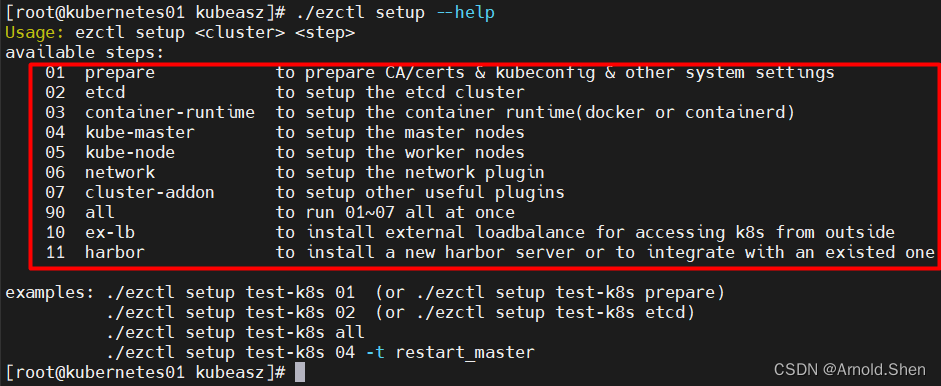

./ezctl setup --help(可以看到每步具体安装什么

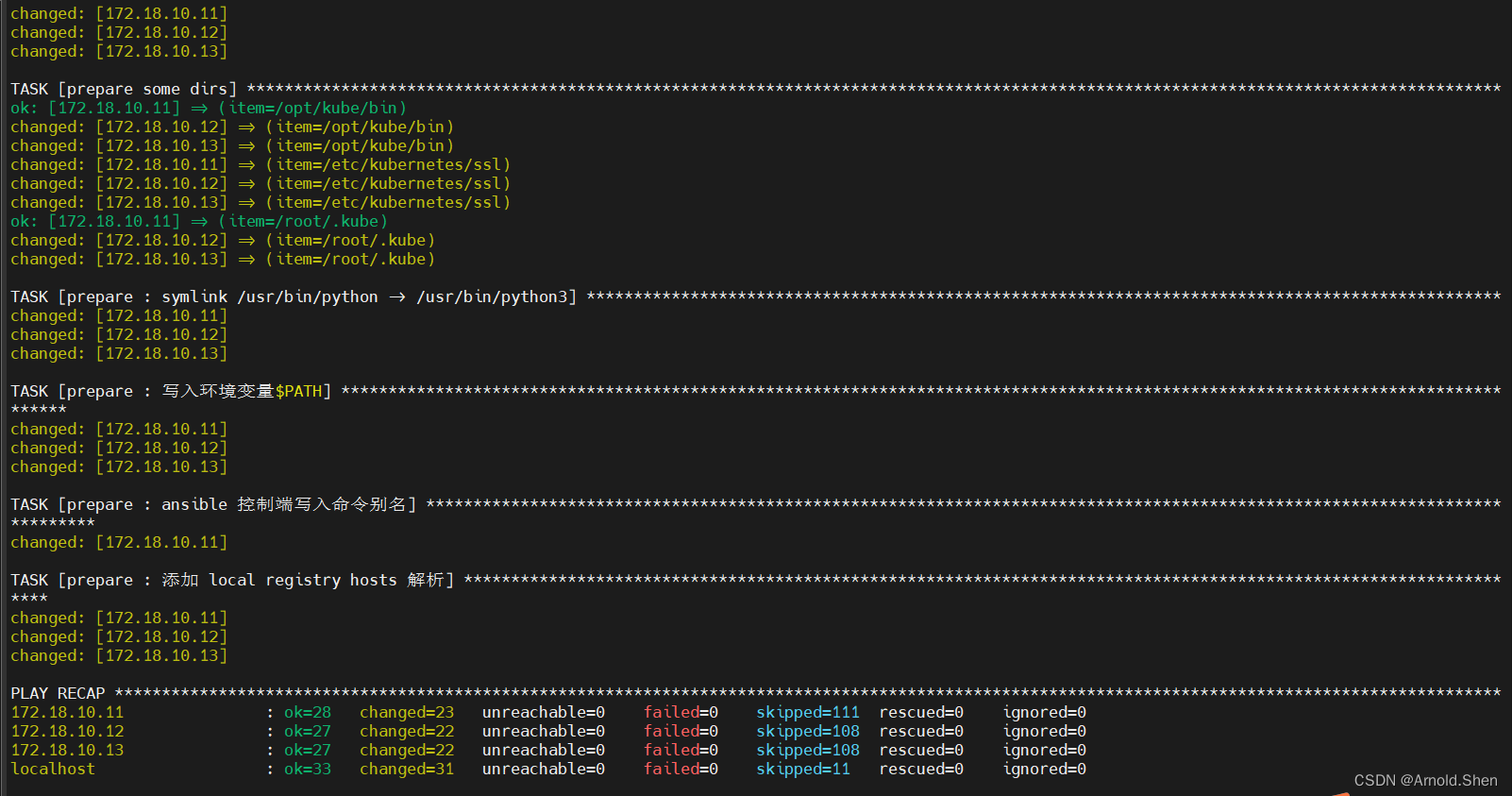

./ezctl setup k8s1.27.1-cluster 01 ---》 系统环境 初始化

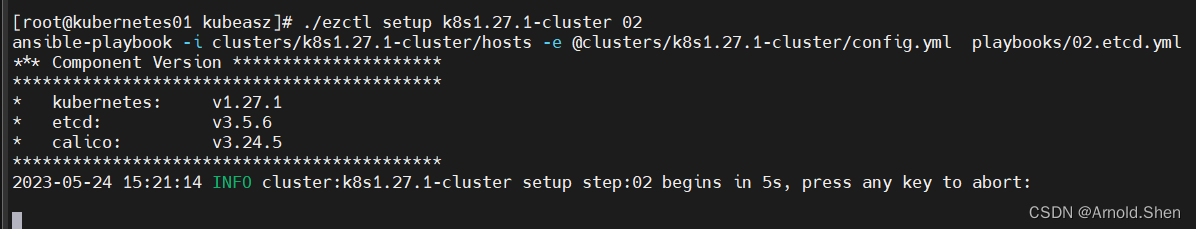

./ezctl setup k8s1.27.1-cluster 02 ---》安装etcd 集群

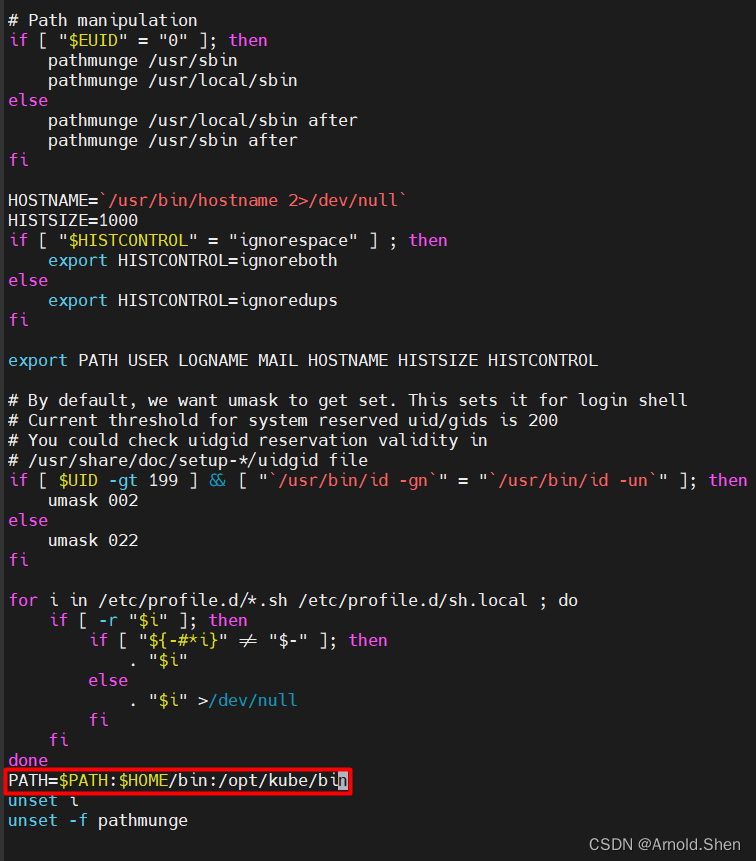

PATH=$PATH:$HOME/bin:/opt/kube/bin

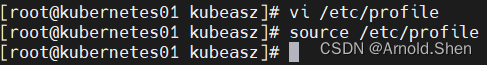

export NODE_IPS="172.18.10.11 172.18.10.12 172.18.10.13"

for ip in ${NODE_IPS}; do ETCDCTL_API=3 etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

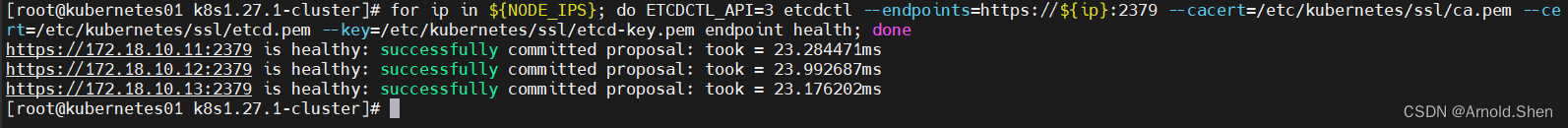

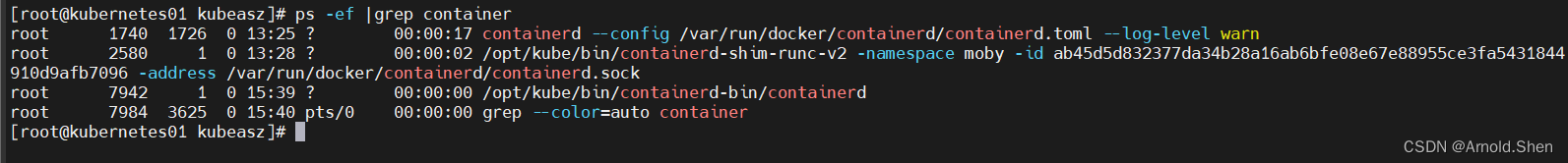

./ezctl setup k8s1.27.1-cluster 03 ---》 安装 容器运行时runtime

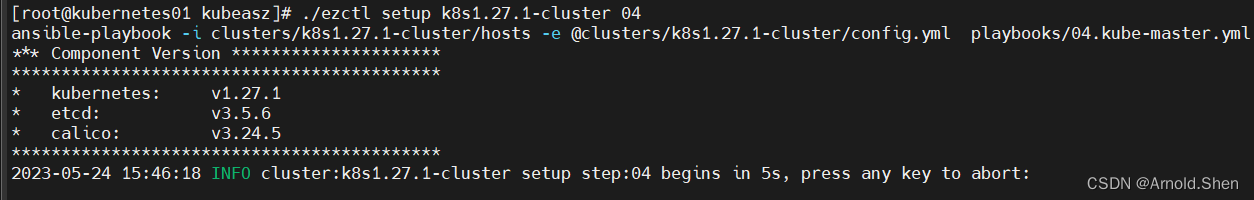

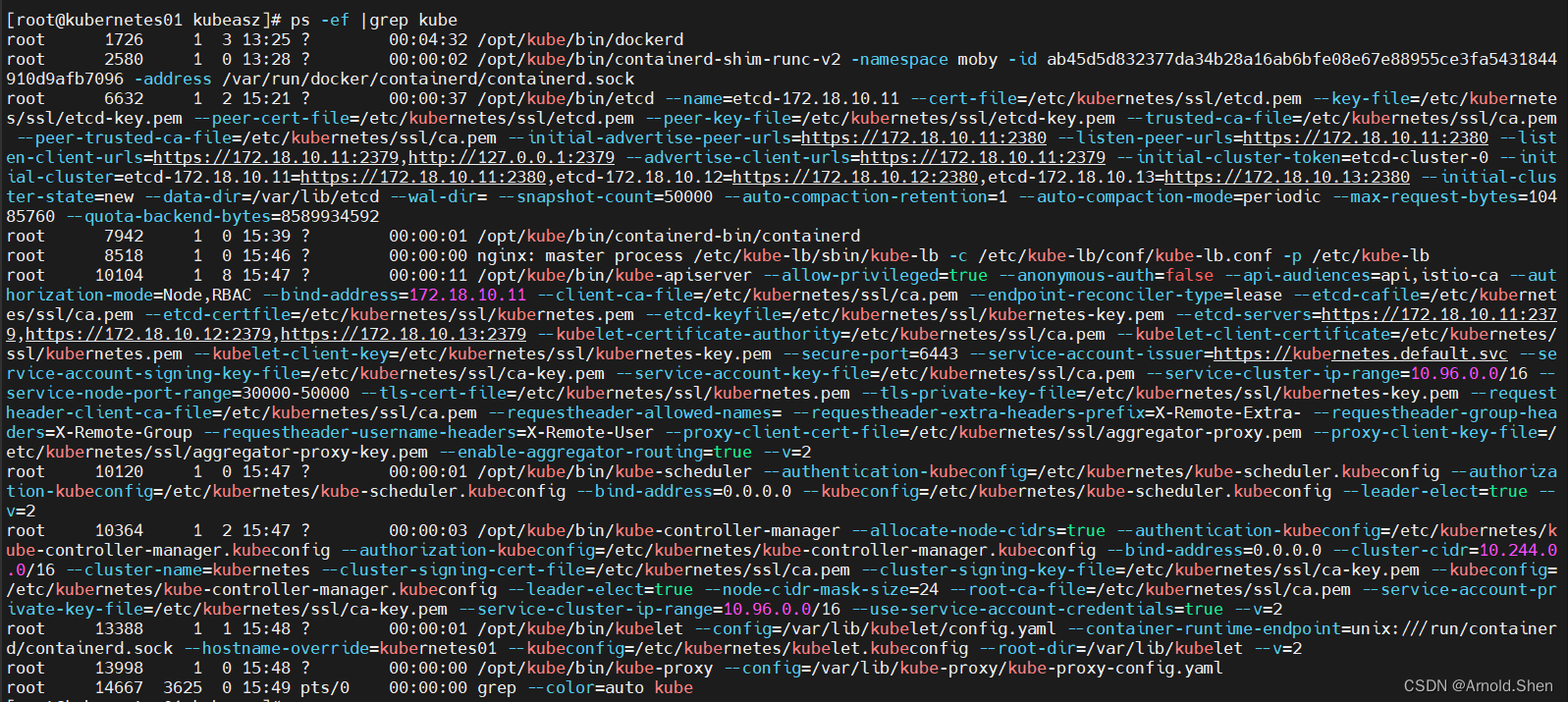

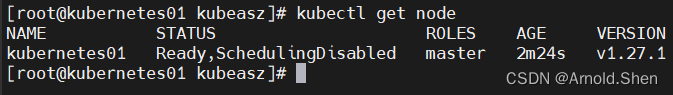

./ezctl setup k8s1.27.1-cluster 04 ---》 安装master

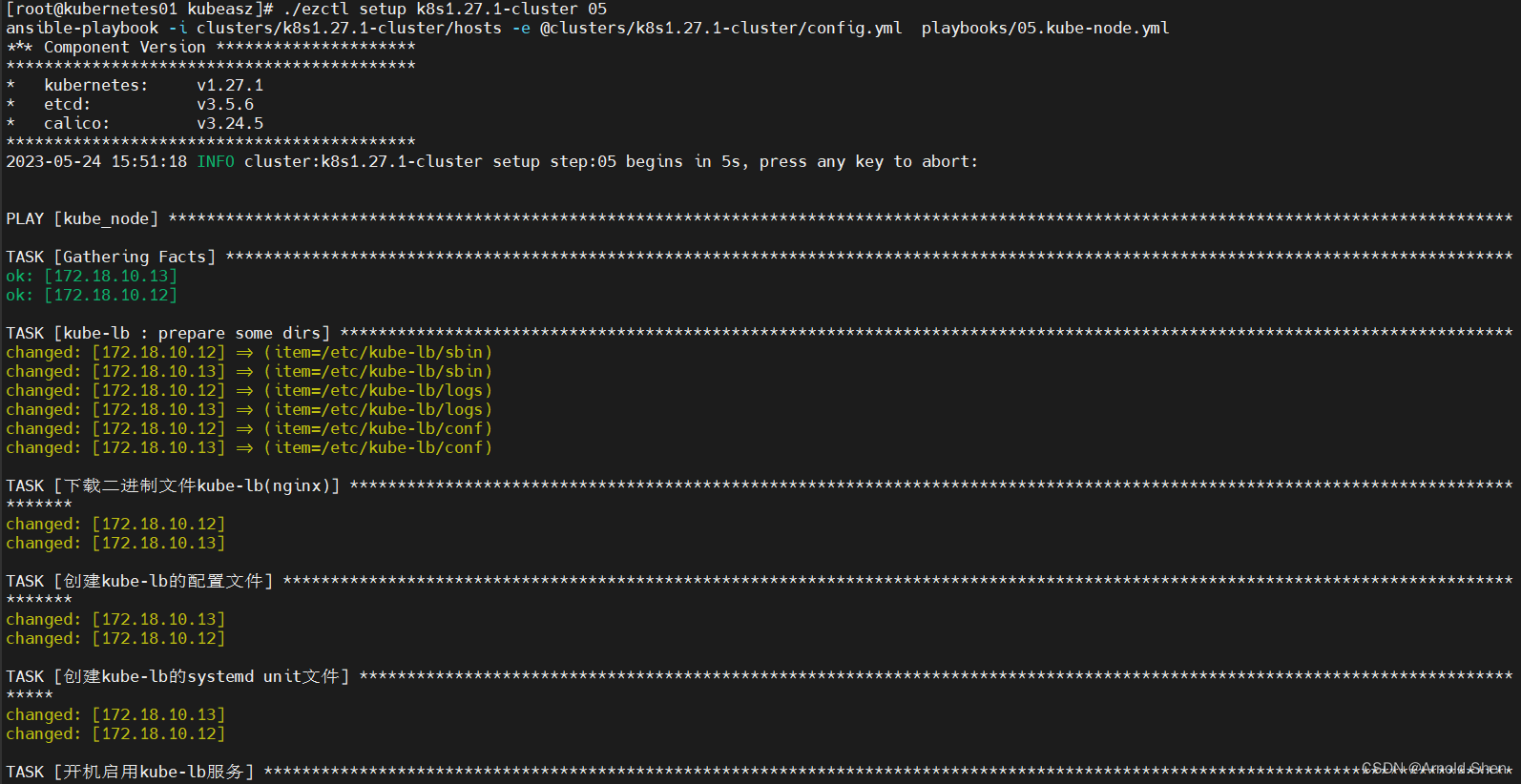

./ezctl setup k8s1.27.1-cluster 05 ---》 安装node

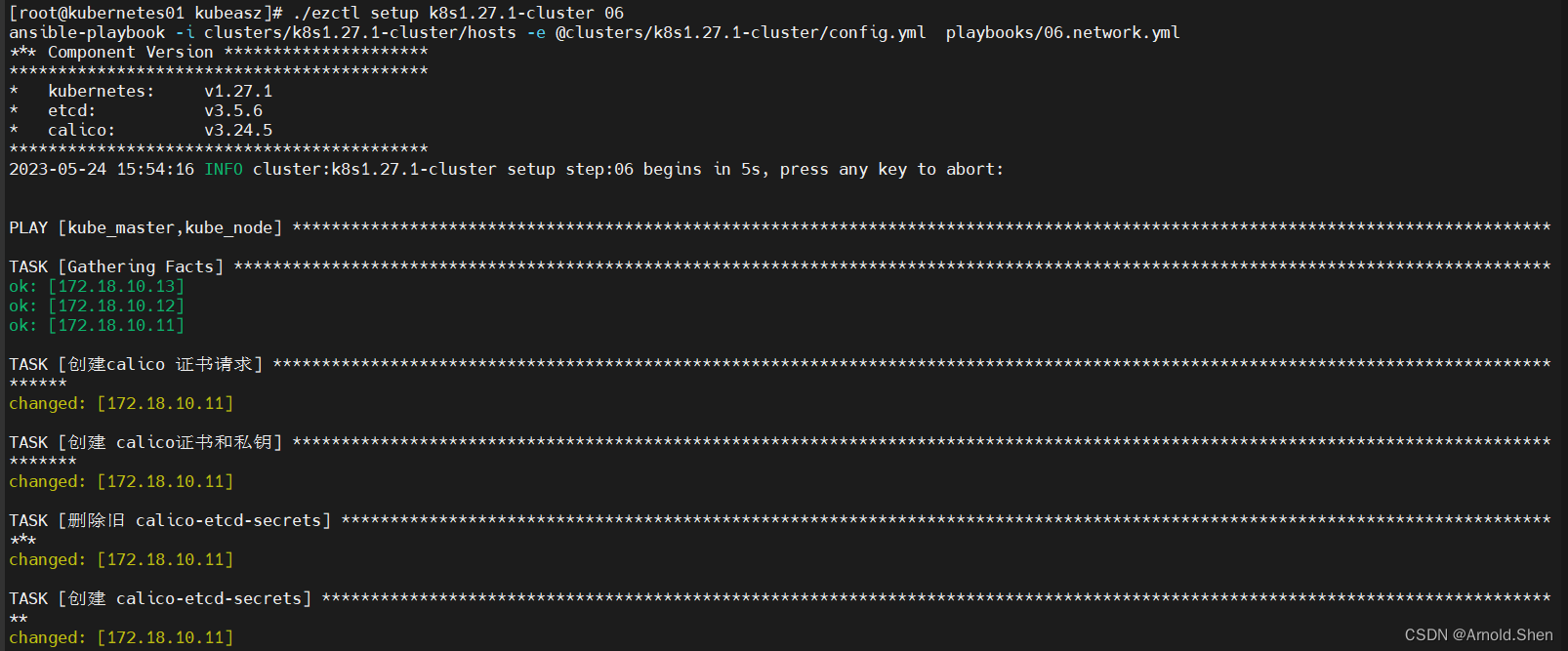

./ezctl setup k8s1.27.1-cluster 06 ---》 安装网络插件

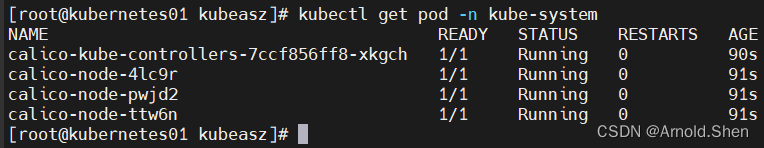

kubectl get pod -n kube-system

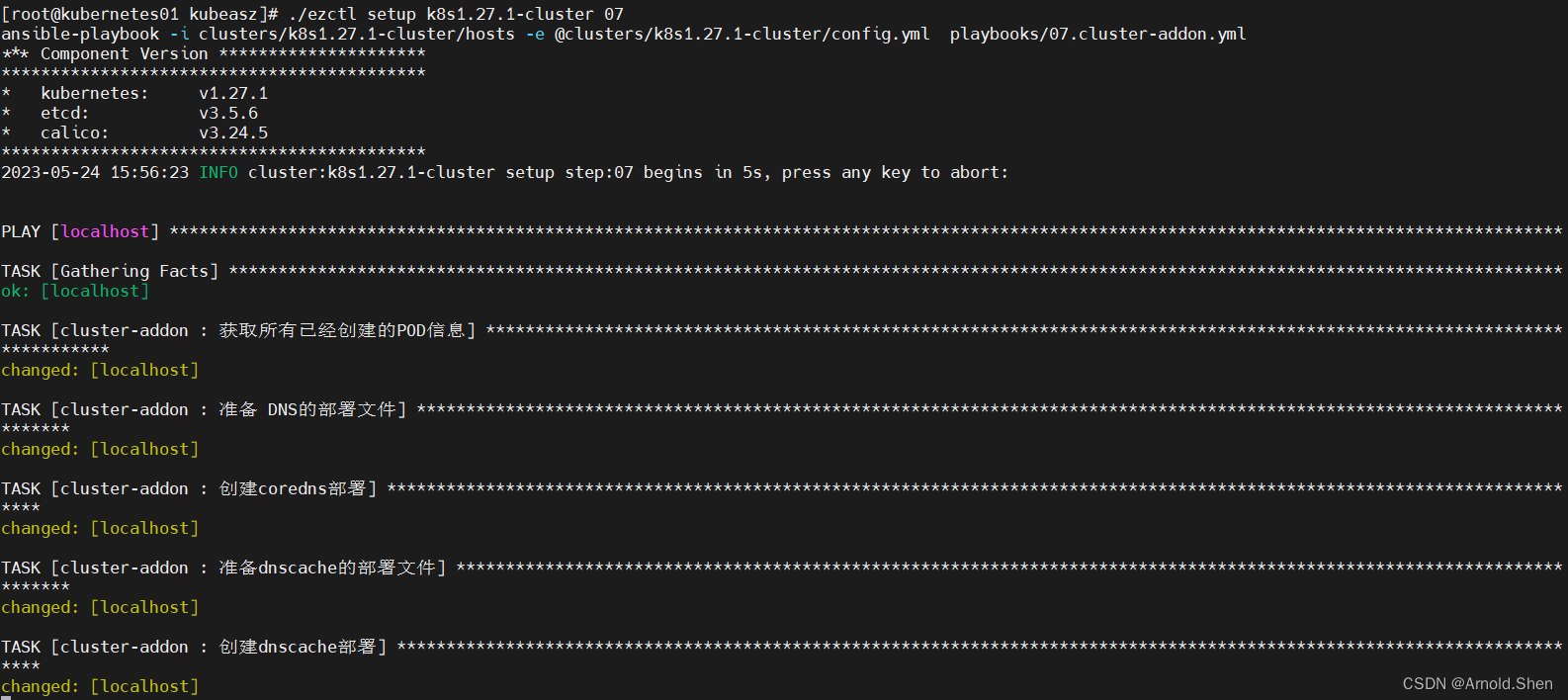

./ezctl setup k8s1.27.1-cluster 07 ---》 安装系统的其它应用插件

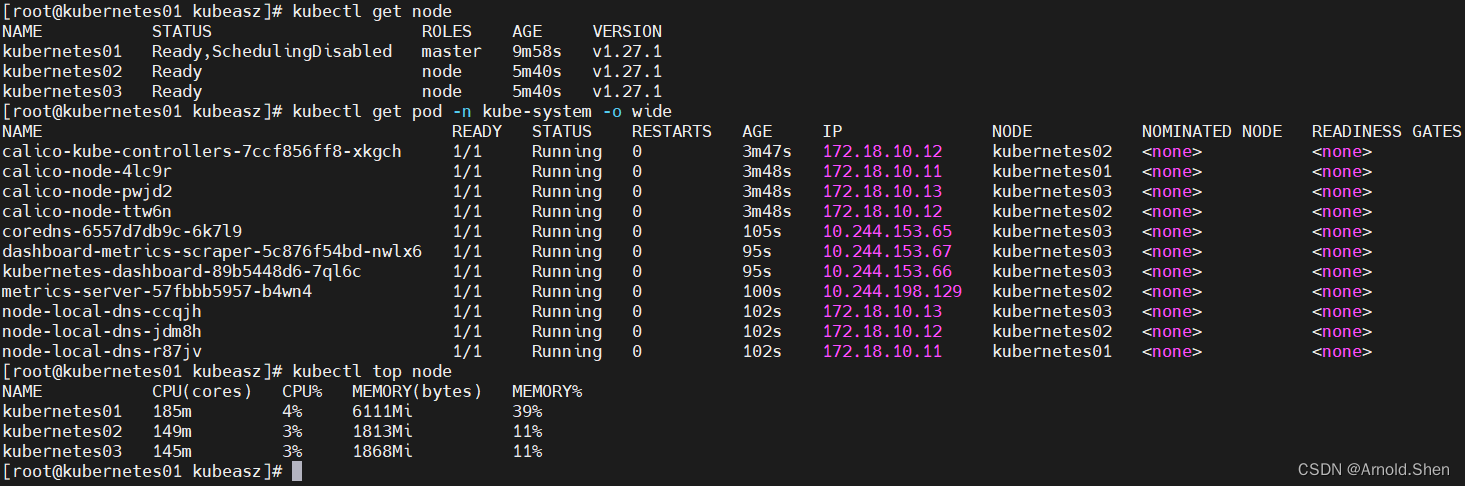

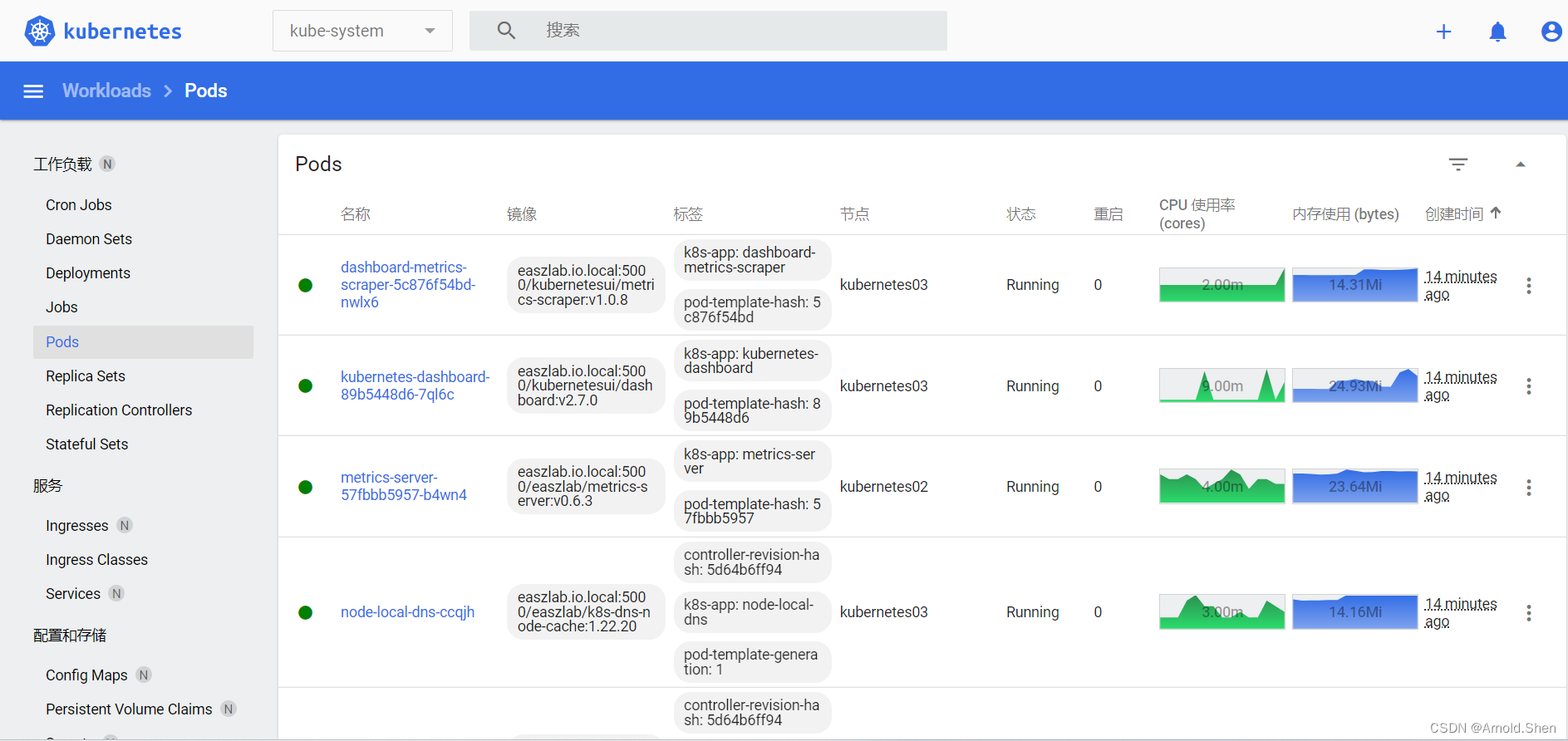

kubectl get pod -n kube-system

kubectl get pod -n kube-system -o wide

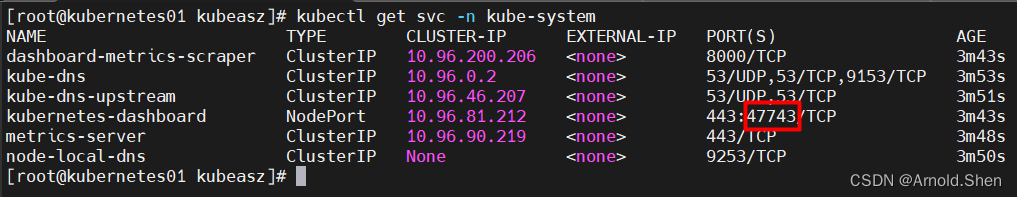

kubectl get svc -n kube-system

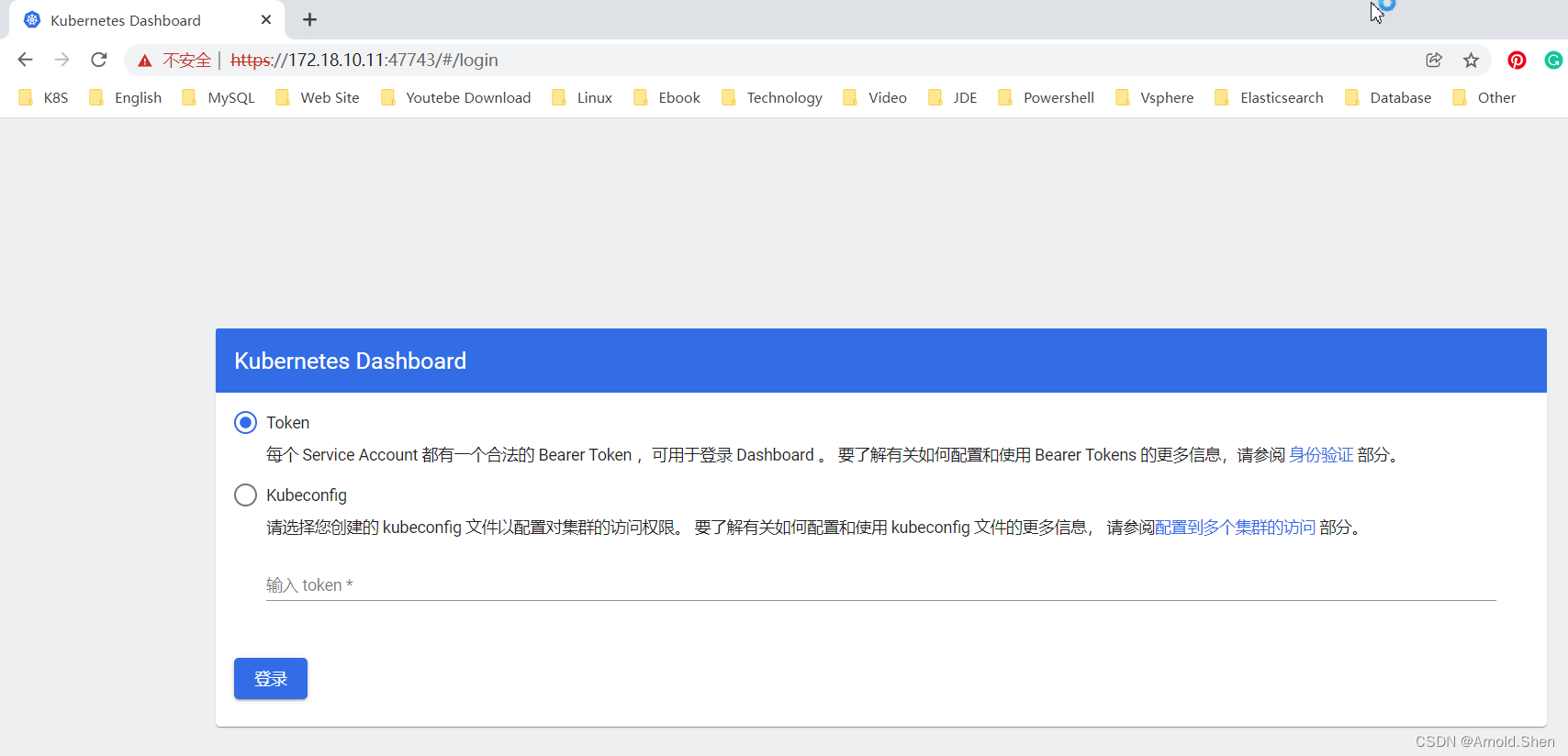

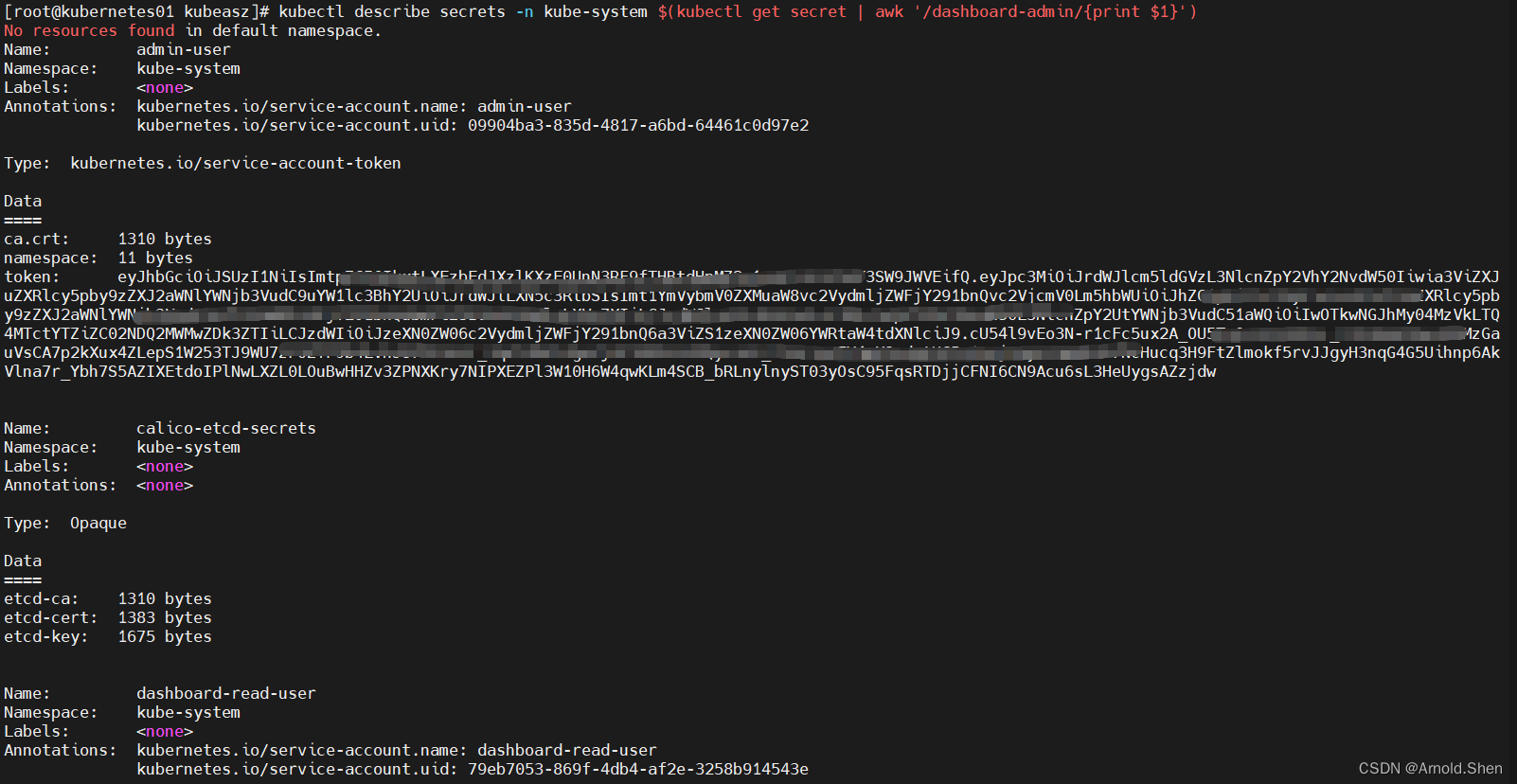

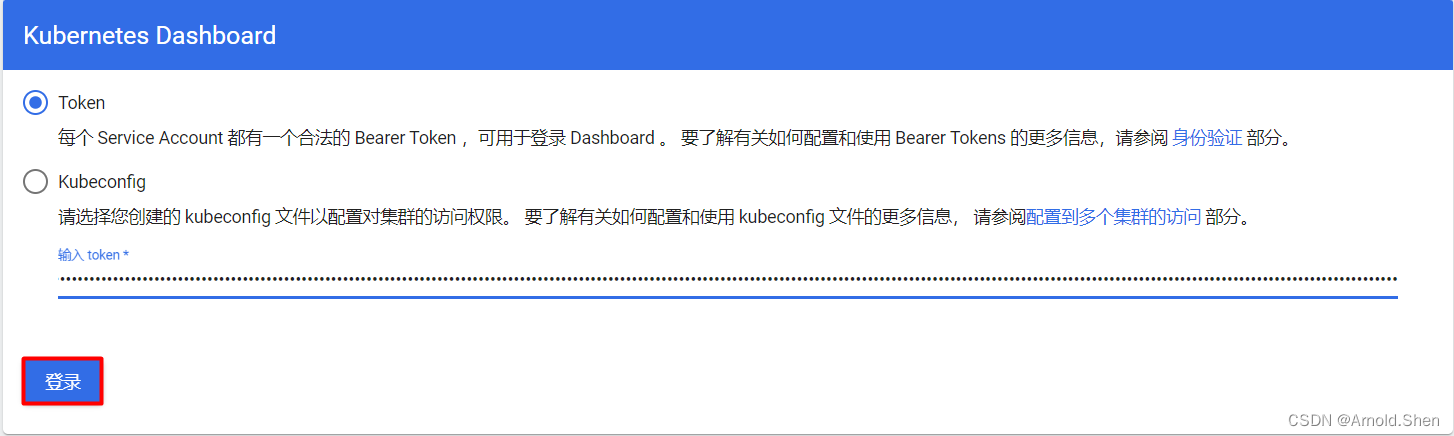

kubectl describe secrets -n kube-system $(kubectl get secret | awk '/dashboard-admin/{print $1}')

eyJhbGciOiJSUzI1NiIsImtpZCI6IkxtLXEzbEdJXzlKXzE0UnN3RF9fTHBtdHpMZ2o1eHFTeDJrb0Y3SW9JWVEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwOTkwNGJhMy04MzVkLTQ4MTctYTZiZC02NDQ2MWMwZDk3ZTIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.cU54l9vEo3N-r1cFc5ux2A_OU5Te0-PZzR9lN-_TYK4QnRJGwJOSMzGauVsCA7p2kXux4ZLepS1W253TJ9WU7zFOZfP9b4LvhJU7M7dv6T_9qZ93kK14ga7jHY38M2d-KMQycaU4_f8nF3oZFY4mX3sdgtHCPu1AojtWyLF8mMA367NcHucq3H9FtZlmokf5rvJJgyH3nqG4G5Uihnp6AkVlna7r_Ybh7S5AZIXEtdoIPlNwLXZL0LOuBwHHZv3ZPNXKry7NIPXEZPl3W10H6W4qwKLm4SCB_bRLnylnyST03yOsC95FqsRTDjjCFNI6CN9Acu6sL3HeUygsAZzjdw

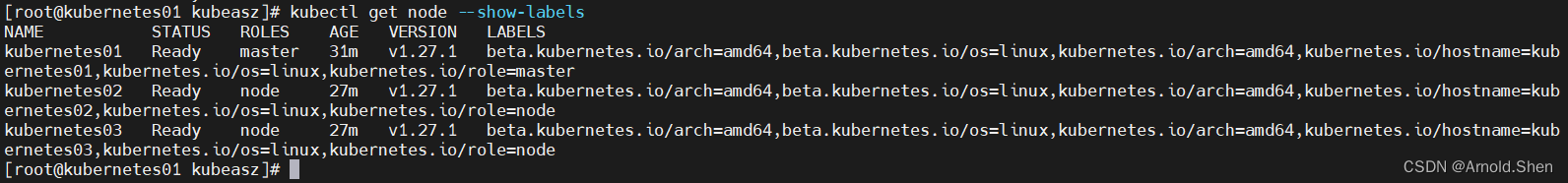

kubectl get node --show-labels

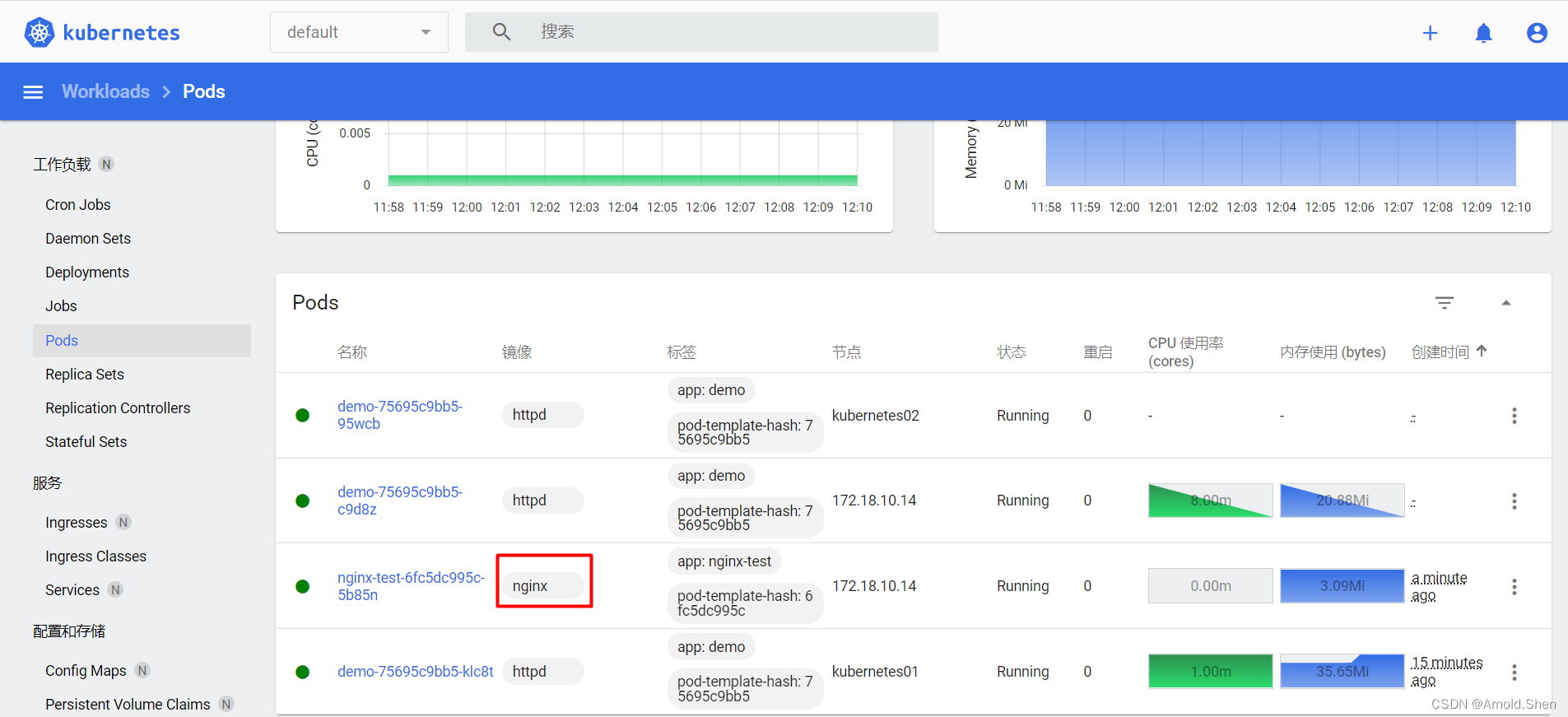

增加Kubernetes04 worker节点为node 节点

./ezctl add-node k8s1.27.1-cluster 172.18.10.14

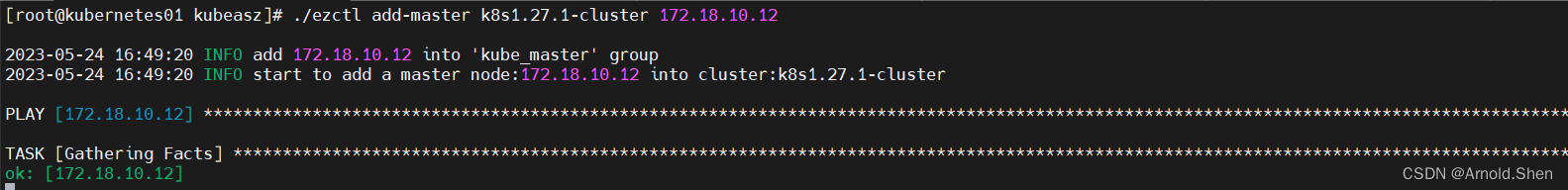

./ezctl add-master k8s1.27.1-cluster 172.18.10.12

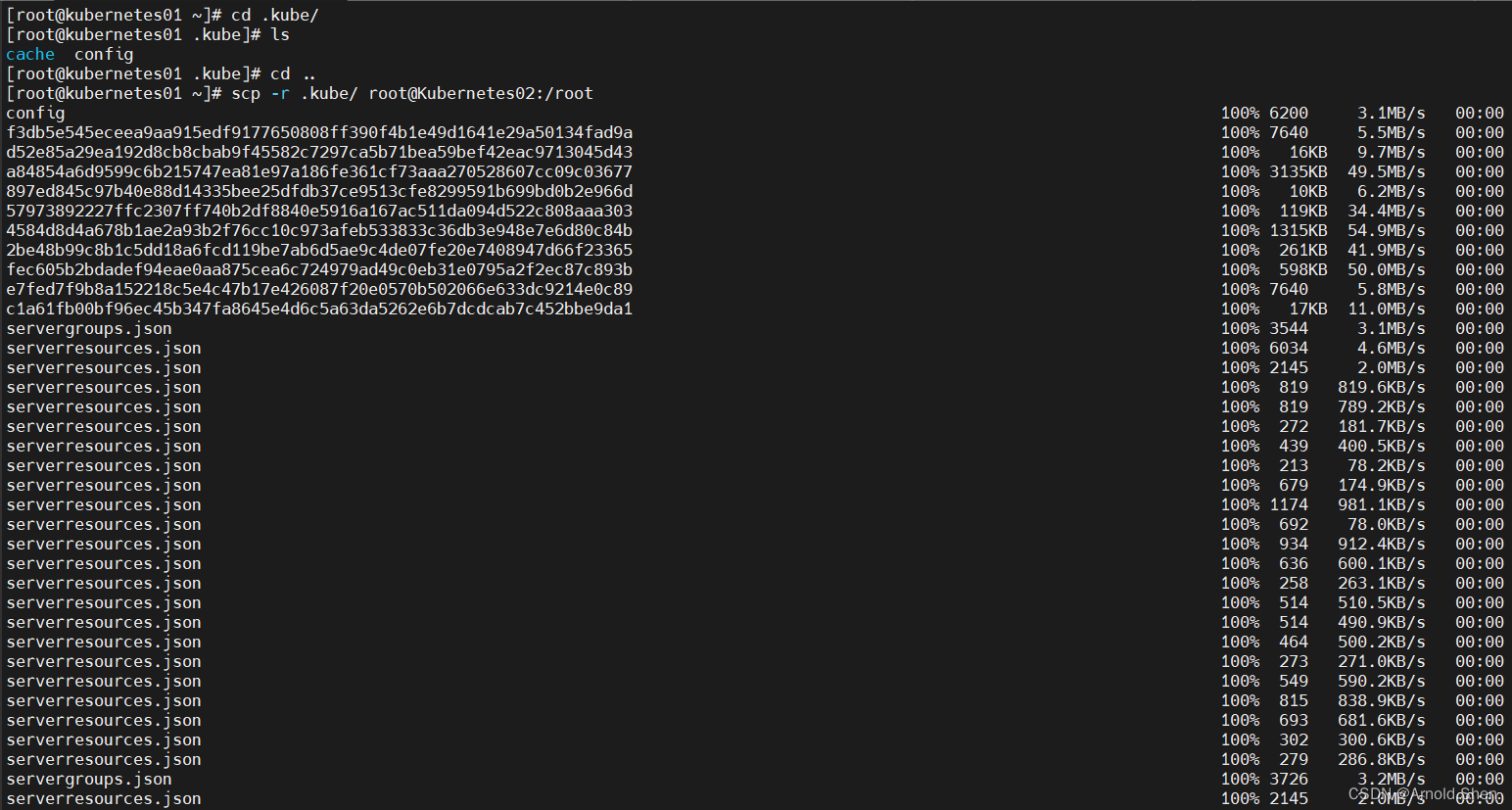

从Kubernetes01将文件拷贝到Kubernetes02上

scp -r .kube/ root@Kubernetes02:/root

scp /etc/profile root@Kubernetes02:/root/

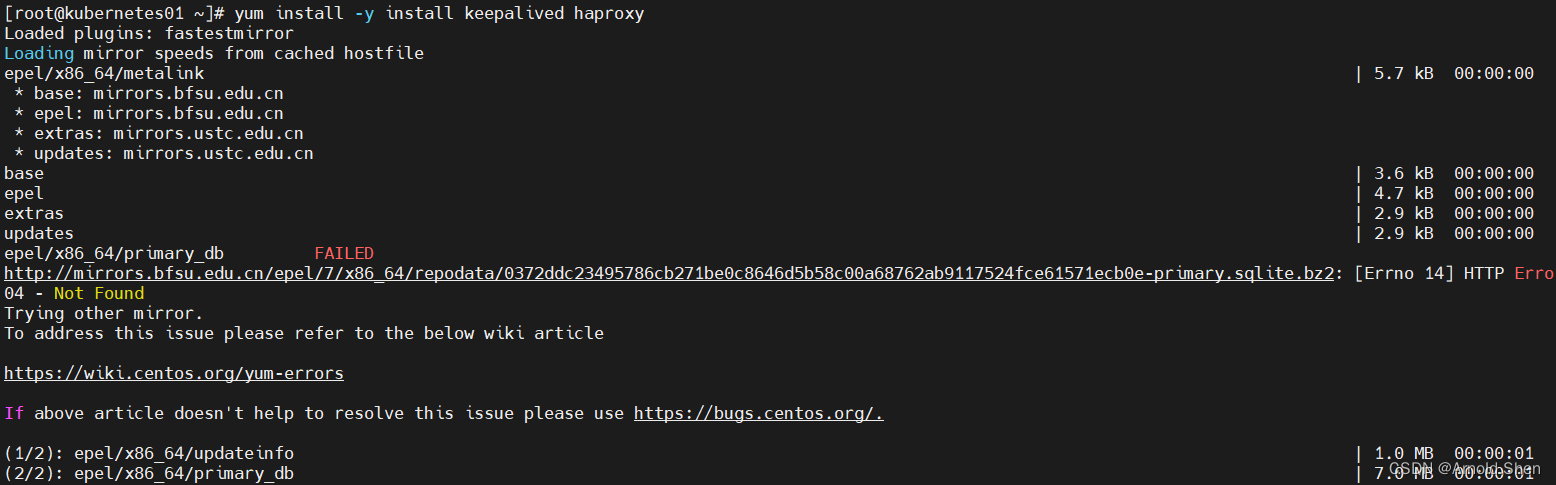

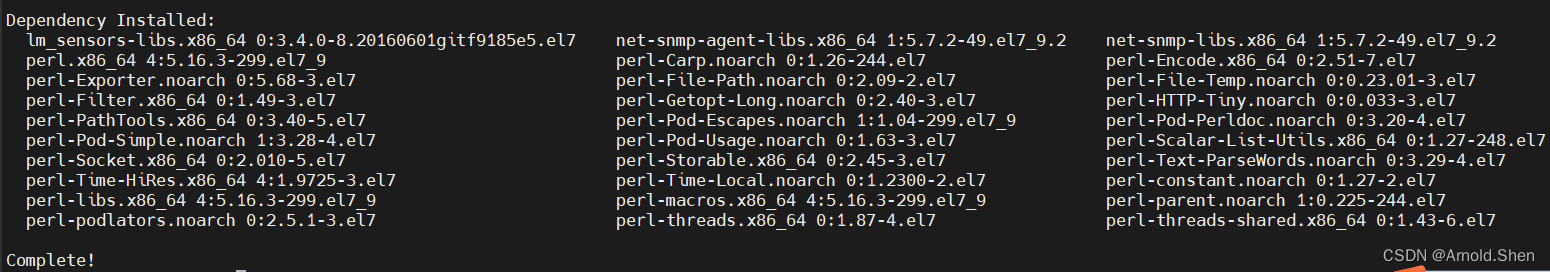

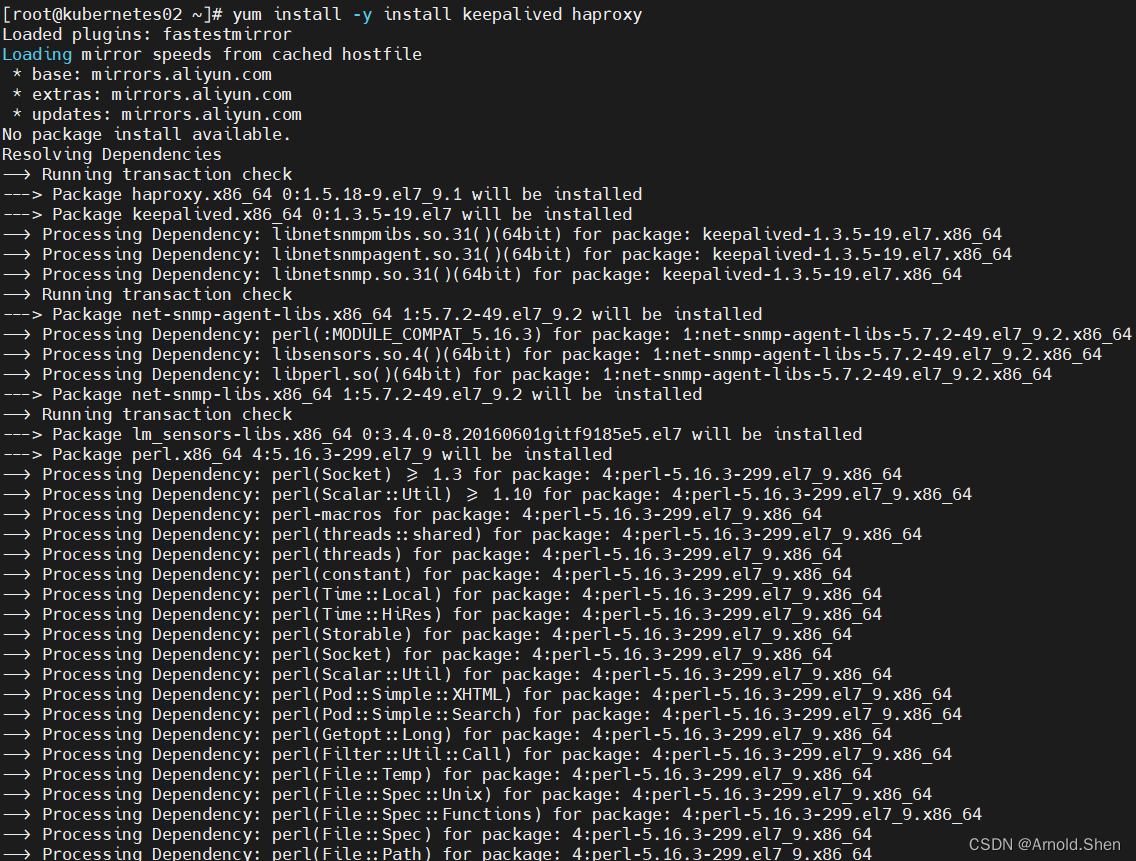

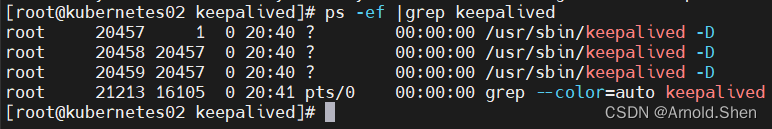

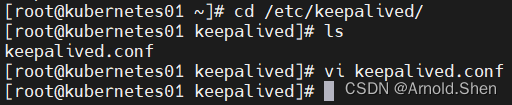

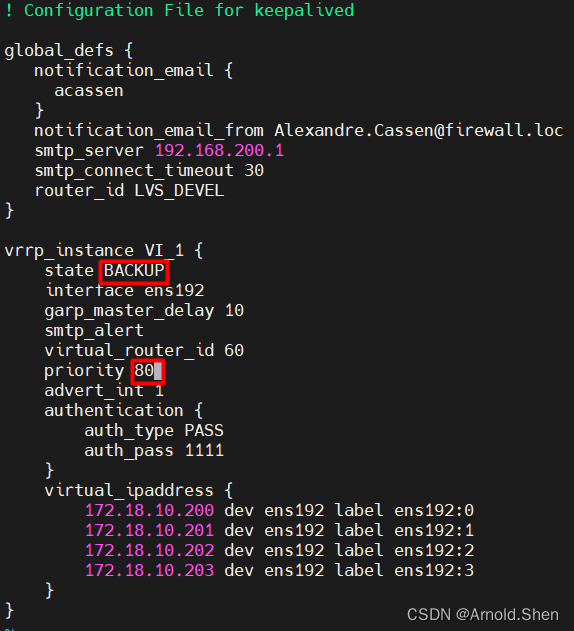

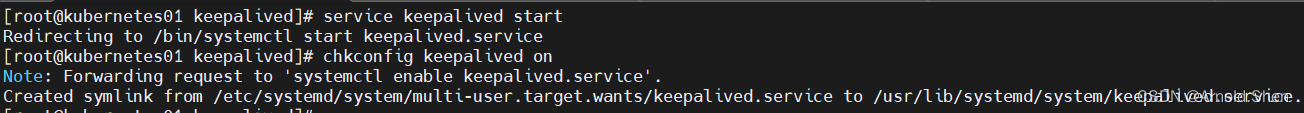

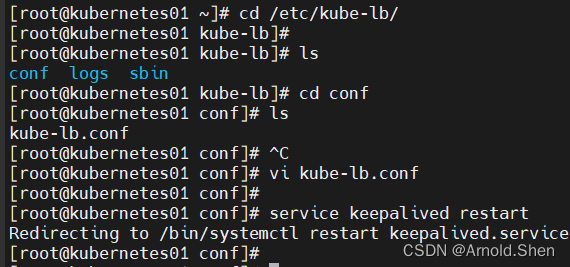

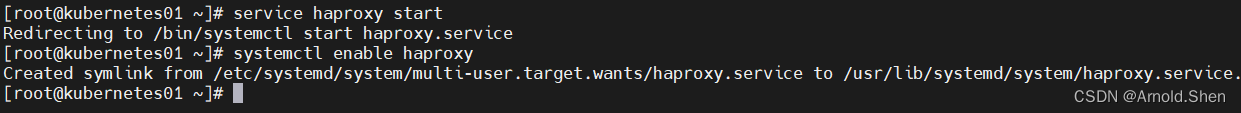

系统环境初始化 [所有master 节点全部安装]安装时02作为Master,01作为Backup,根据实际情况做修改参数即可)

yum install -y install keepalived haproxy

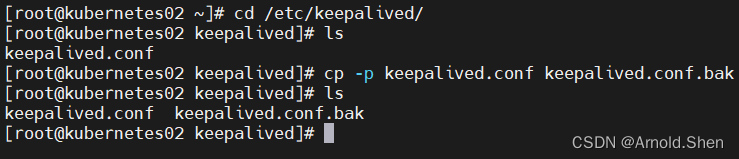

cp -p keepalived.conf keepalived.conf.bak

! Configuration File for keepalived

notification_email_from Alexandre.Cassen@firewall.loc

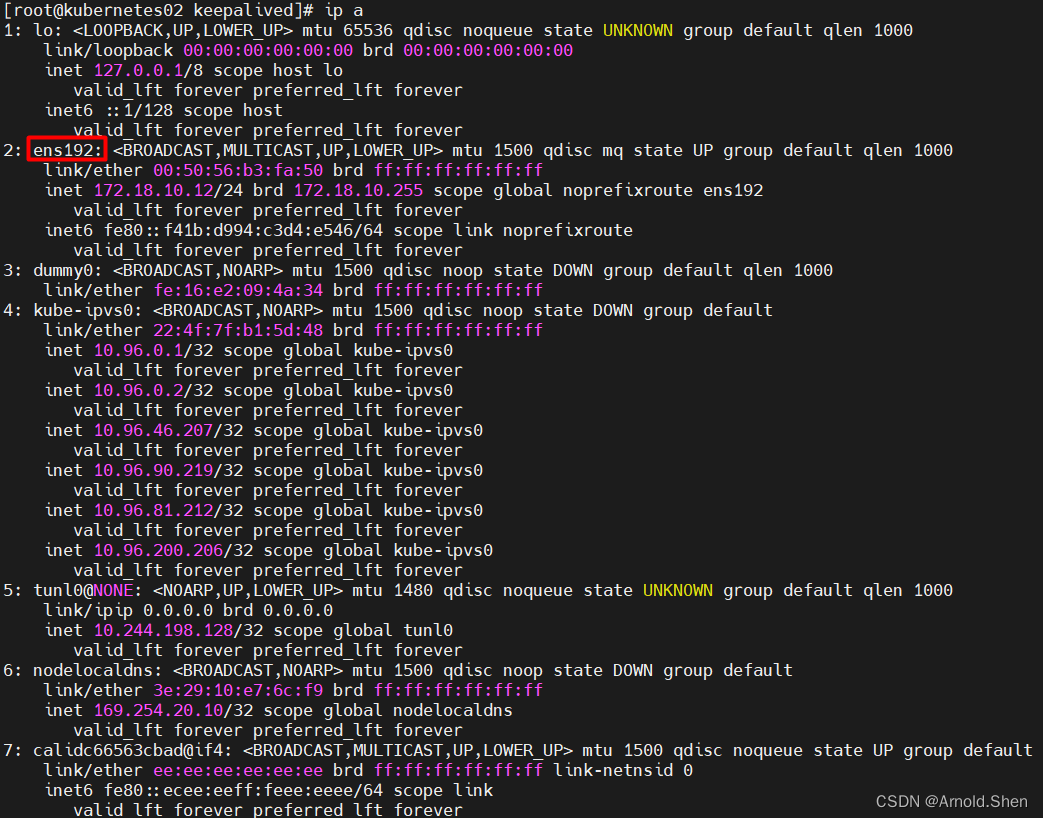

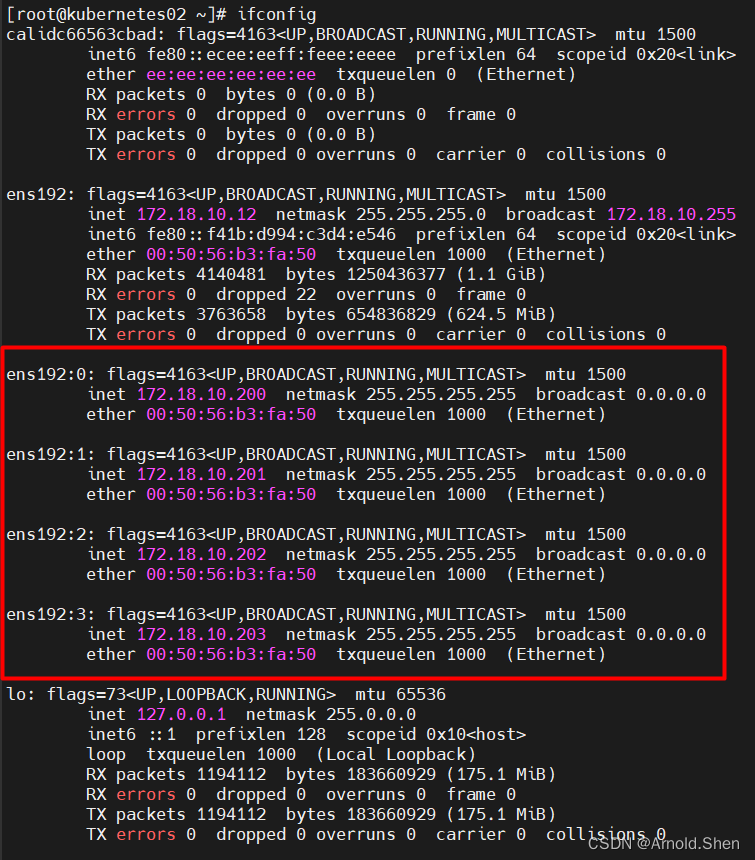

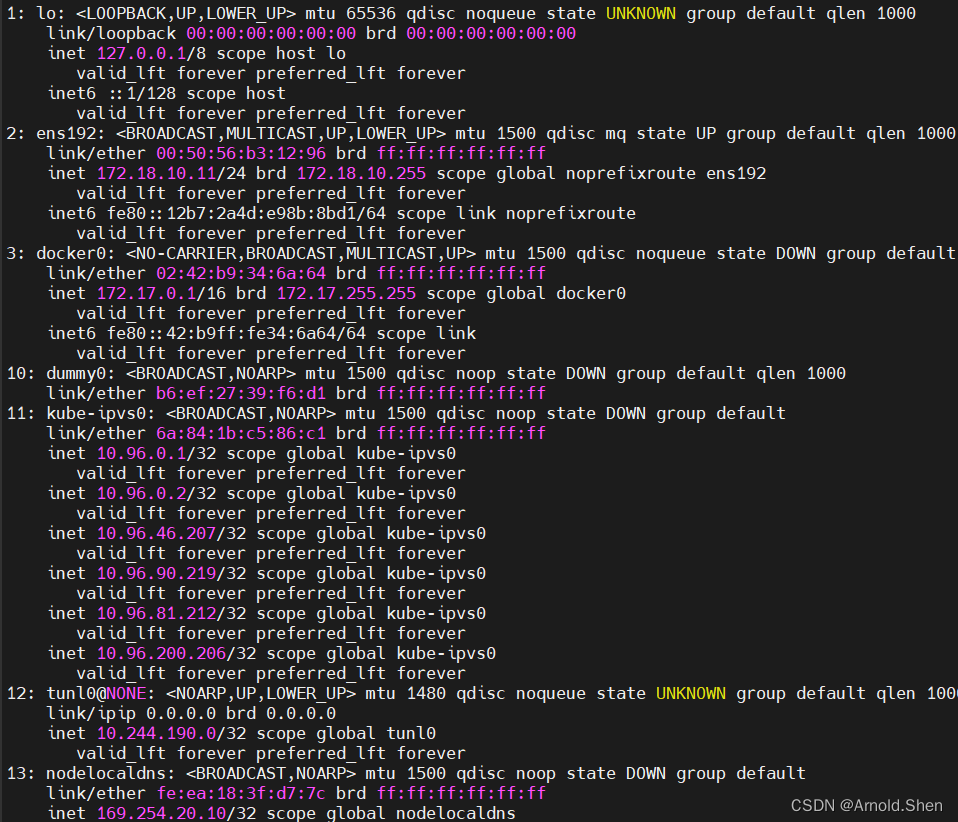

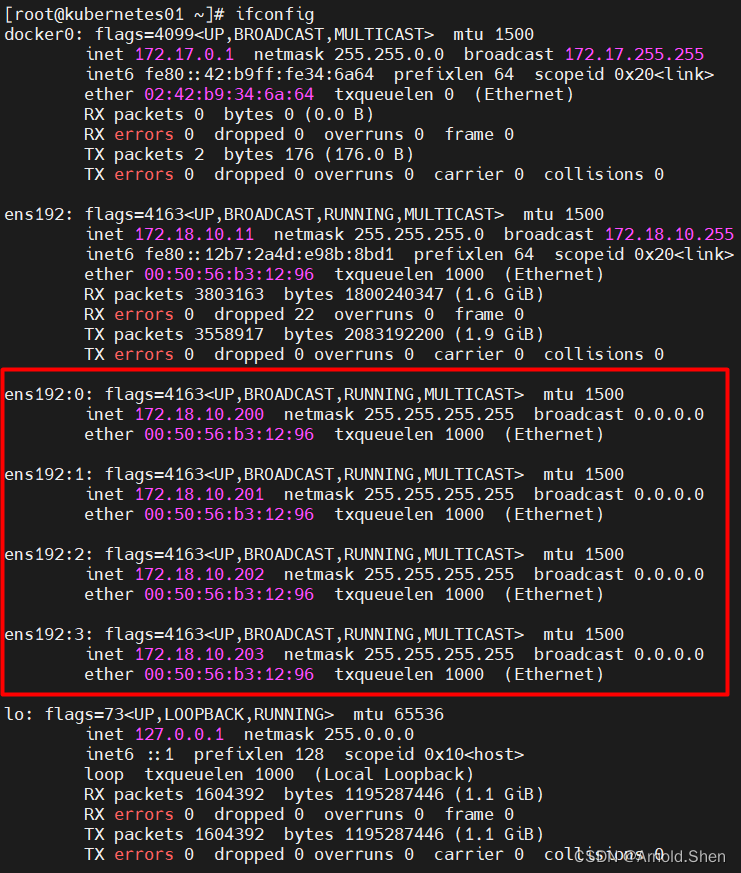

172.18.10.200 dev ens192 label ens192:0

172.18.10.201 dev ens192 label ens192:1

172.18.10.202 dev ens192 label ens192:2

172.18.10.203 dev ens192 label ens192:3

scp keepalived.conf root@Kubernetes01:/etc/keepalived/

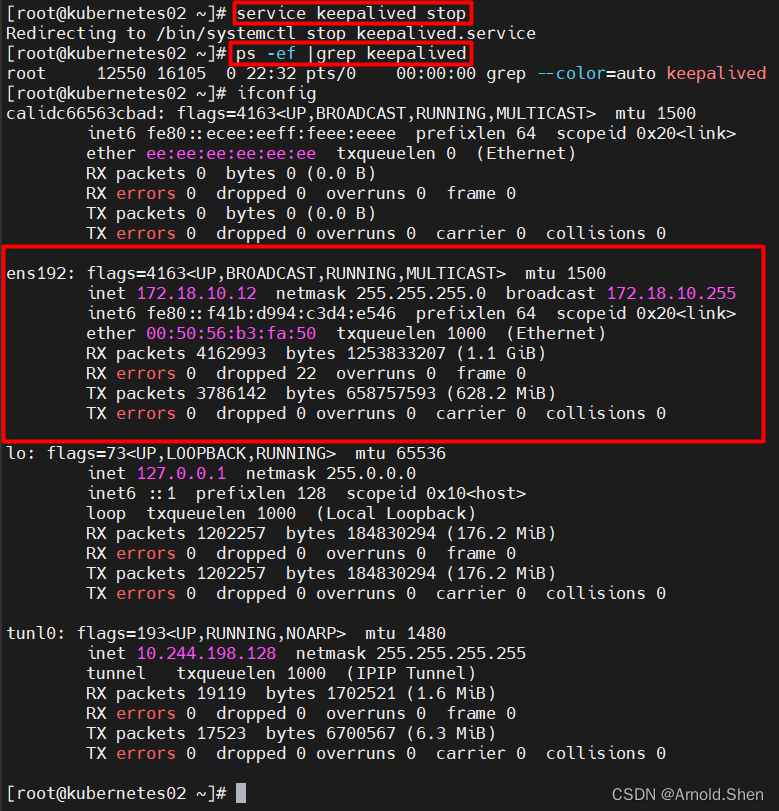

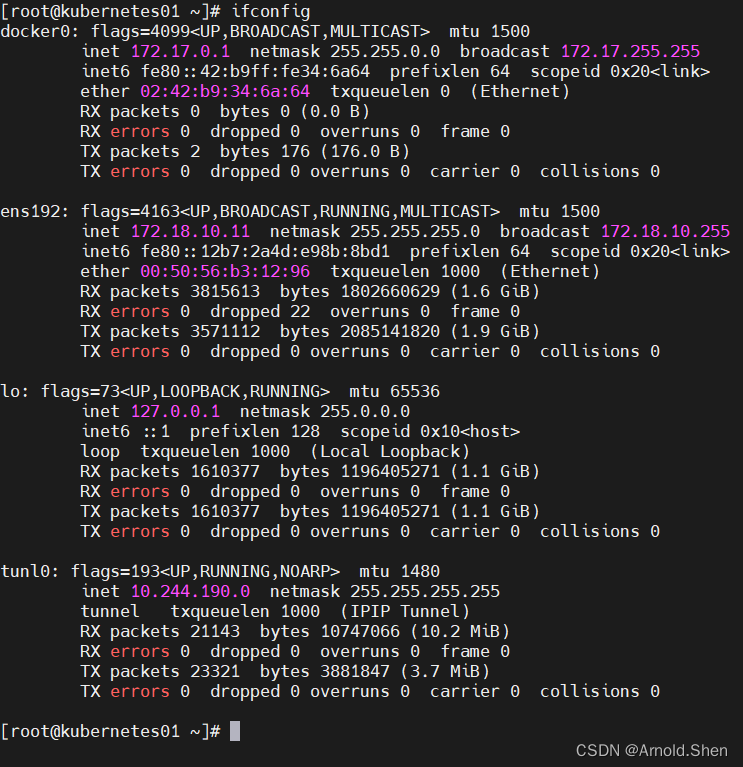

停掉Kubernetes02 的 keepalived 负载 VIP 会票移到Kubernetes01上面

在启动Kubernetes02的keepalived vip 会自动切回来

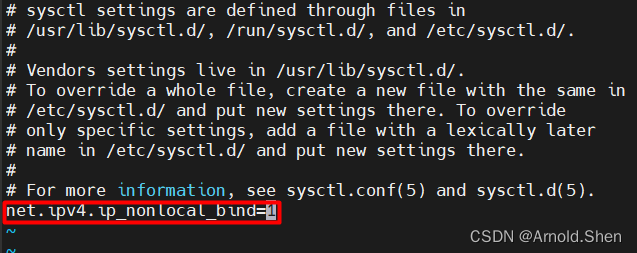

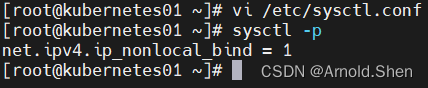

scp /etc/sysctl.conf root@Kubernetes02:/etc/

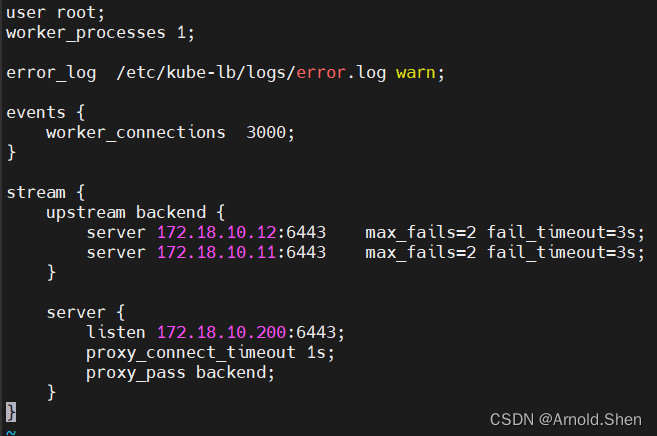

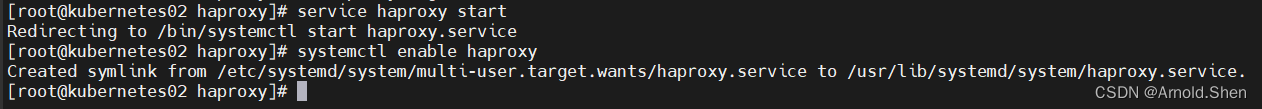

mv haproxy.cfg haproxy.cfg.bak

cat > /etc/haproxy/haproxy.cfg << EOF

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server Kubernetes01 172.18.10.11:6443 check

server Kubernetes02 172.18.10.12:6443 check

scp haproxy.cfg root@Kubernetes01:/etc/haproxy/

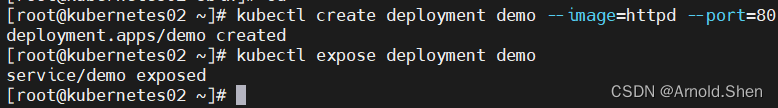

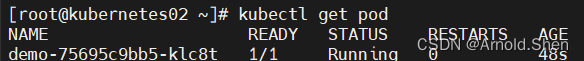

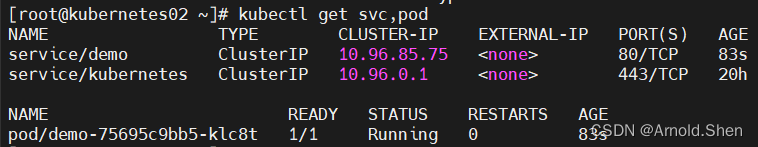

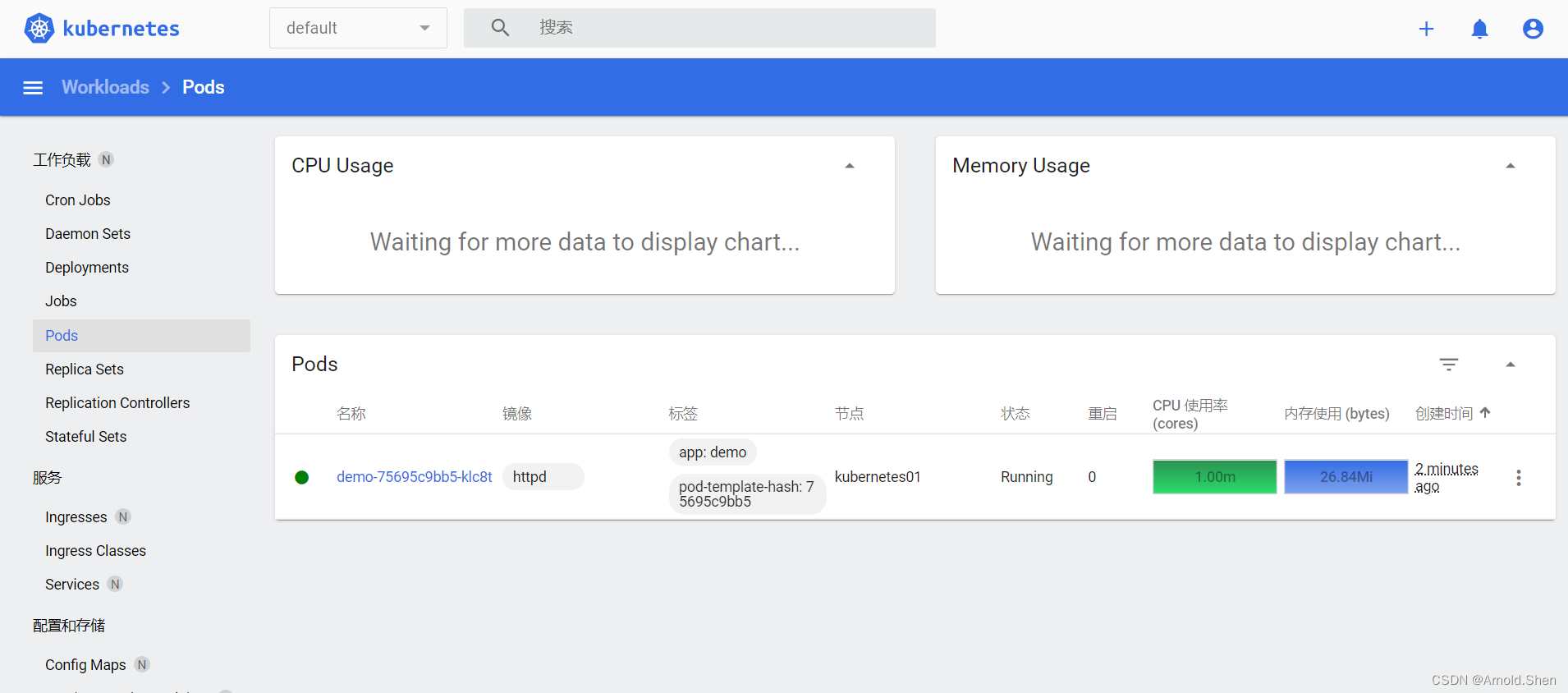

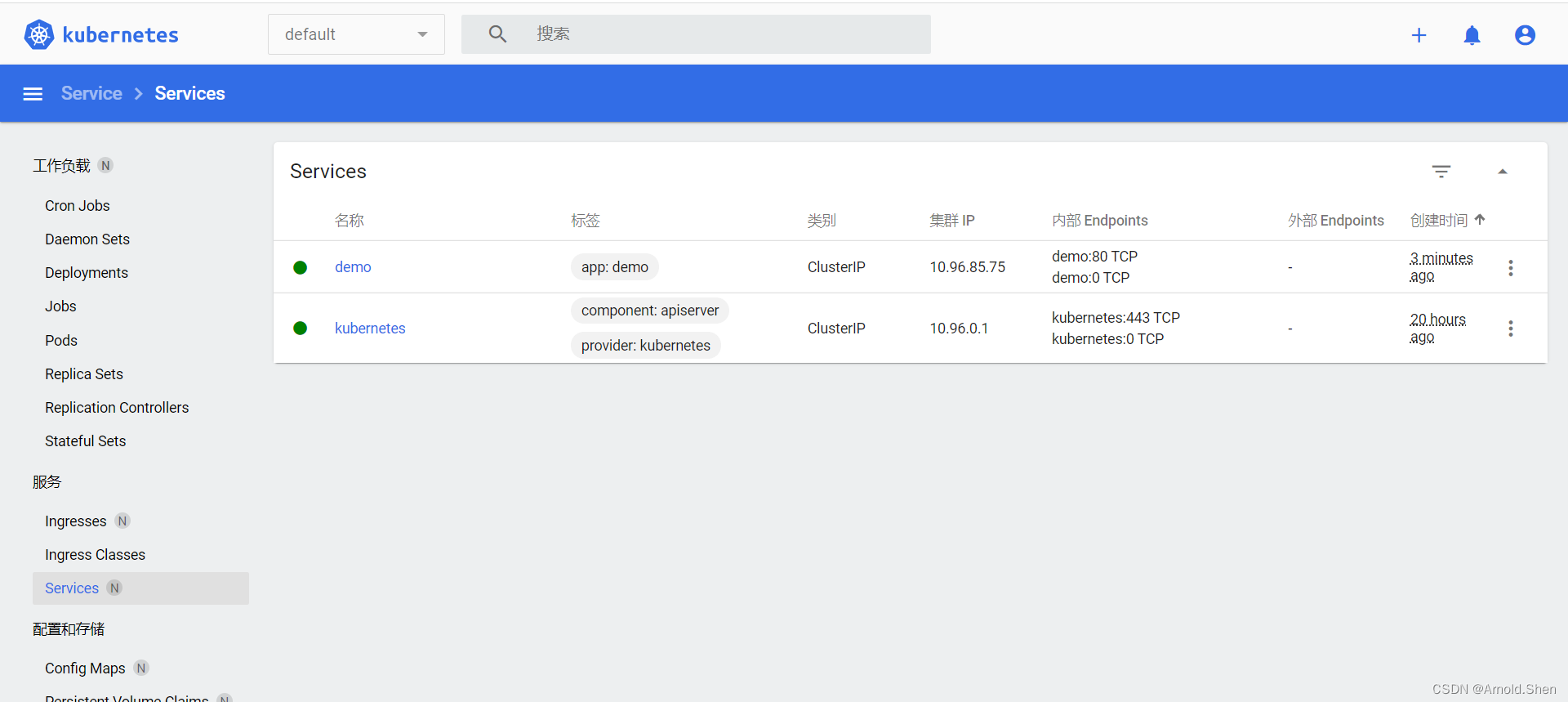

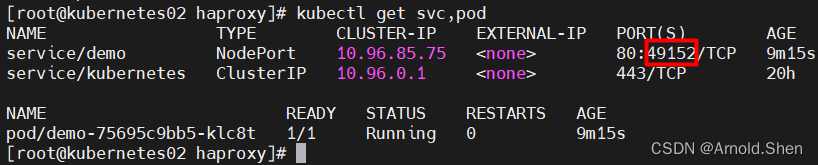

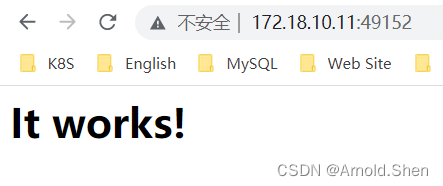

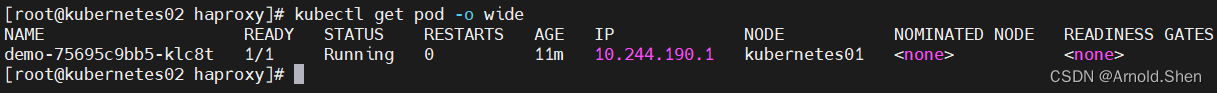

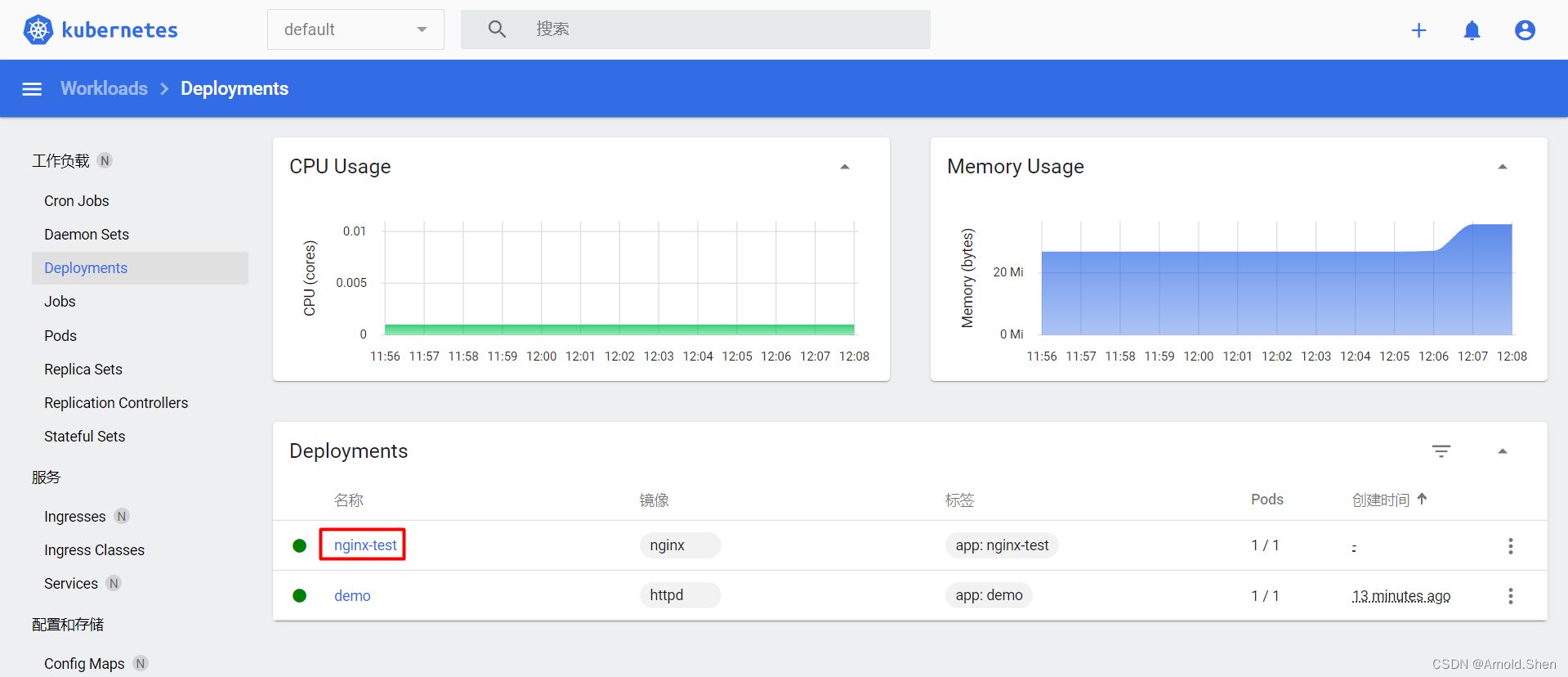

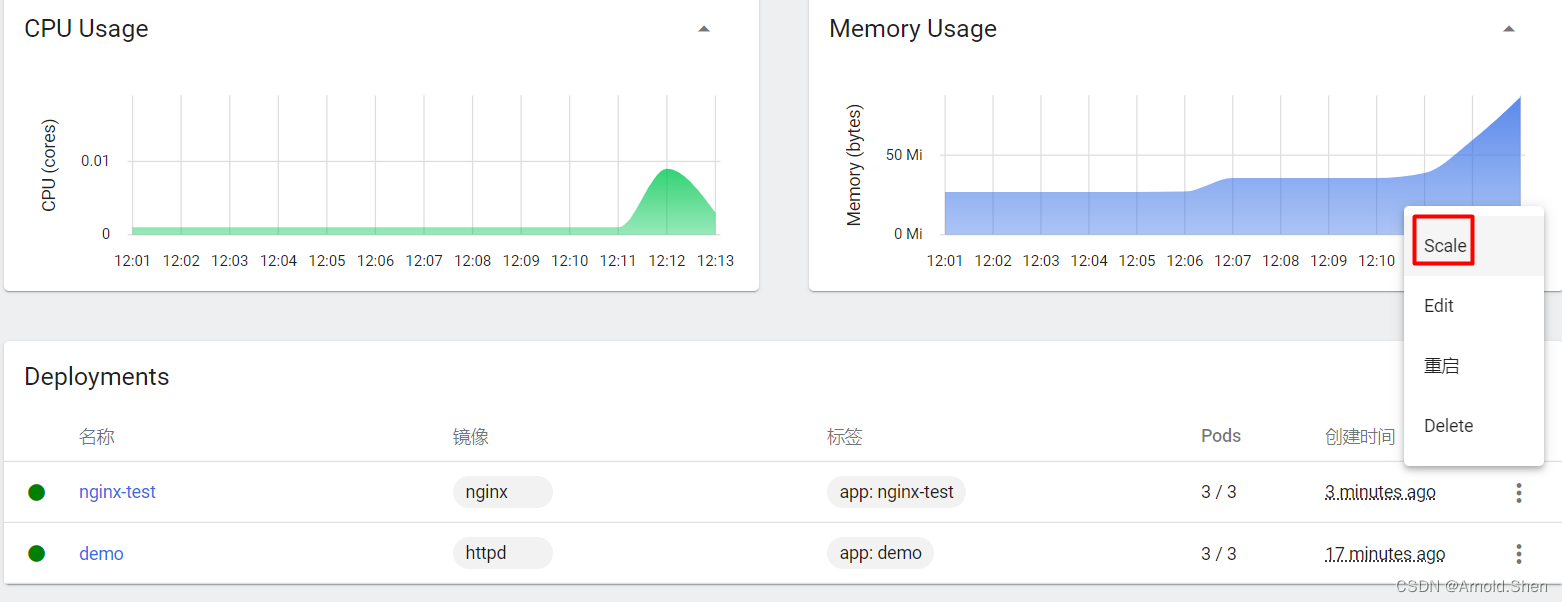

kubectl create deployment demo --image=httpd --port=80

kubectl expose deployment demo

kubectl get pod -n kube-system

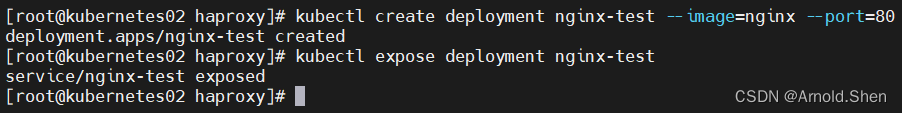

kubectl create deployment nginx-test --image=nginx --port=80