如何快速理解视频信息?通过Azure实现视频摘要生成_azure video analyzer

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

前言

生活在一个信息大爆炸的时代每天都会接收到大量缤纷繁杂的信息包括各种图像、视频、语音等等的消息种类那么如何能够快速的筛选信息从中获得自己有用或者感兴趣的知识呢针对最复杂的视频这一种类我们可以通过使用Azure的认知服务通过视频提取音频、语音转文本、文本翻译、文本摘要生成等多项技术实现视频理解。

输入视频

“元宇宙”场景会是怎样扎克伯格演示“元宇宙”的社交场景

输出视频摘要

想象一下你戴上眼镜或耳机立刻就进入了你的家庭空间作为虚拟重建的实体家庭的一部分它有着只有虚拟才能实现的东西它有着令人难以置信的令人振奋的视角让你看到最美的东西。马克怎么了有一位艺术家在四处走动所以帮助人们找到隐藏AR作品和3D街头艺术。

一、Azure服务开通

最近CSDN开展了《0元试用微软 Azure人工智能认知服务精美礼品大放送》通过添加客服小姐姐申请企业试用的账号可以白嫖Azure 认知服务个人的话还得需要visa卡。

申请成功后可以免费体验Azure人工智能认知服务包括语音转文本、文本转语音、语音翻译、文本分析、文本翻译、语言理解等功能。

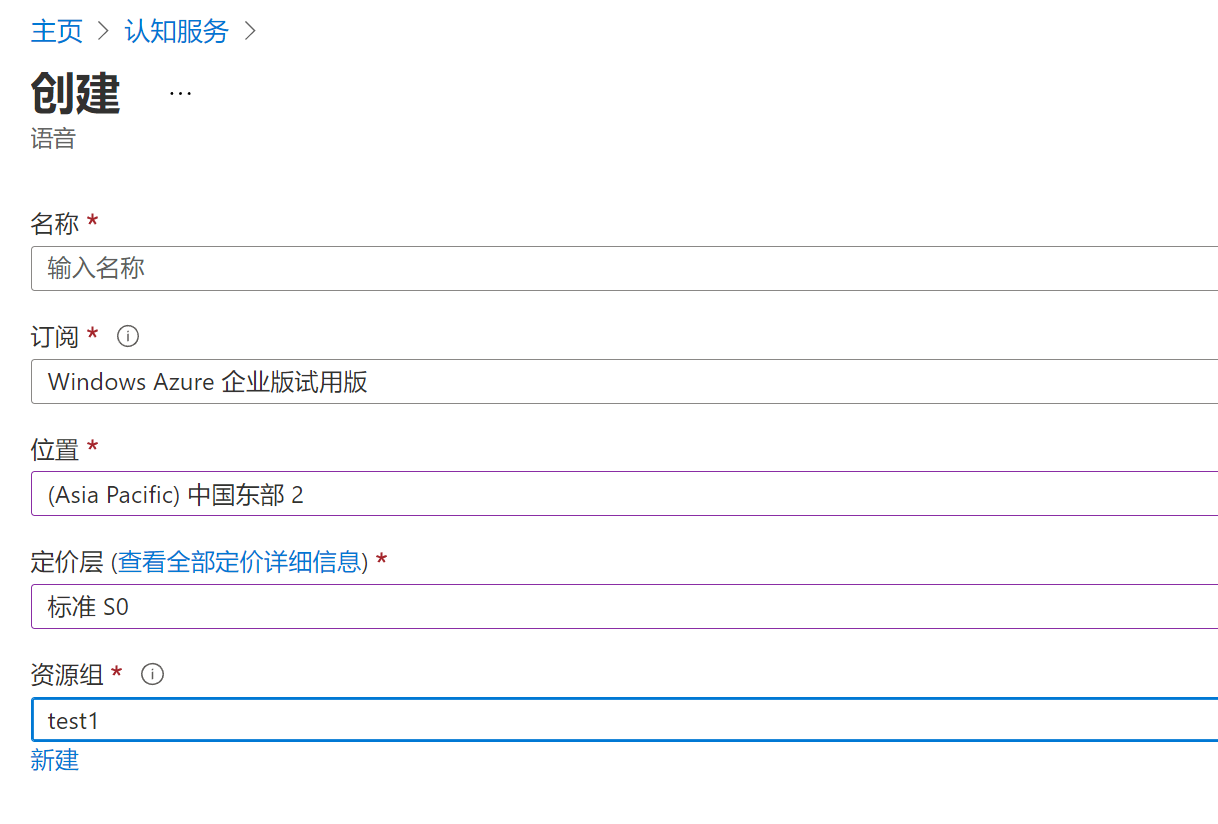

下面我们以语音转文本功能为例子看看如何试用Azure认知服务吧首先我们进入https://portal.azure.cn/并登录。

选择认知服务

选择对应的服务进行创建比如语音转文本则点击语音服务创建。

点击页面最下方的创建按钮就等待资源配置好。

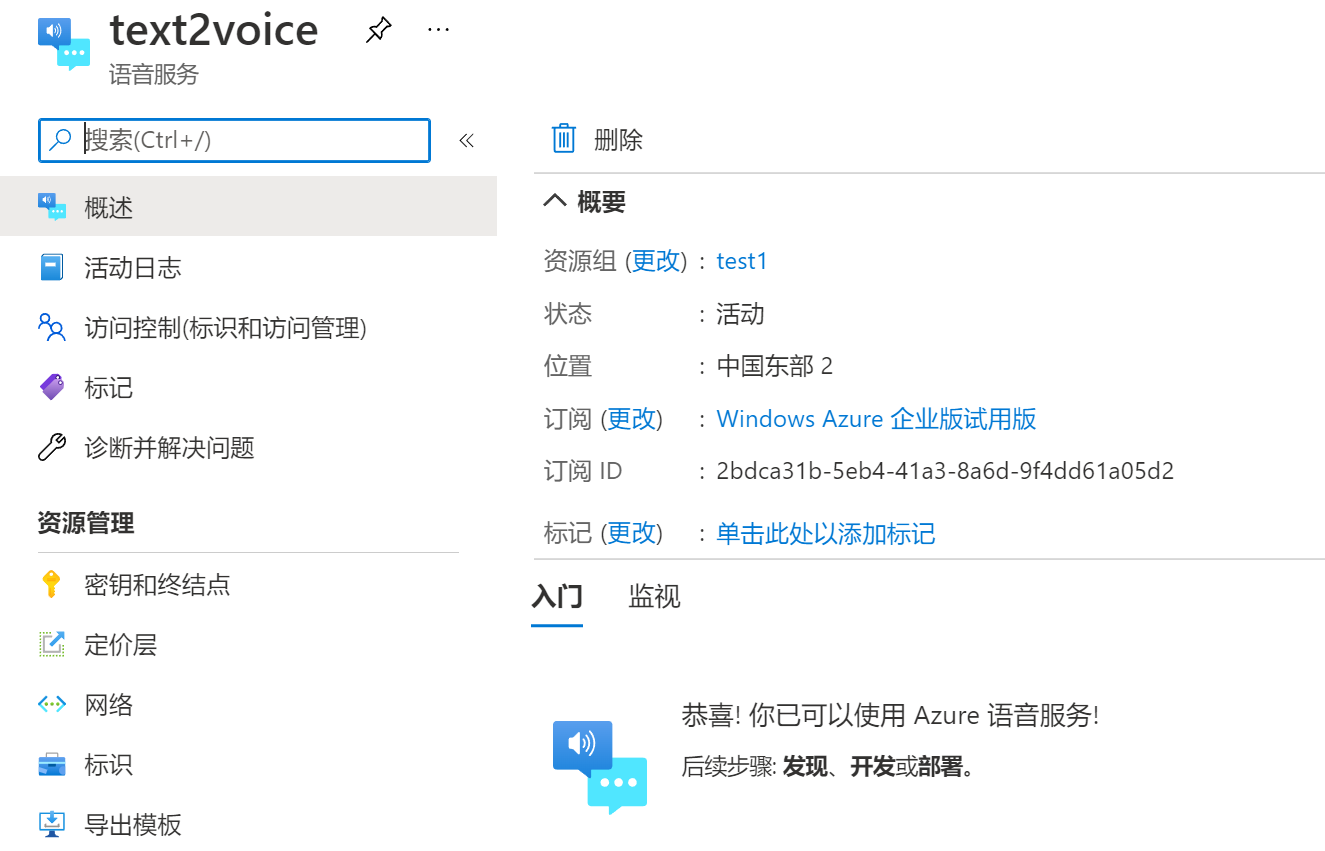

查看密钥和终结点。

查看密钥和终结点。

有两个密钥任选一个即可位置/区域也需要记录下来后面我们的程序就需要通过密钥和位置来调用。

之后下文涉及到的文本翻译、文本摘要生成等服务开通也按以上步骤进行获得密钥以及位置就可以了。

二、核心功能实现

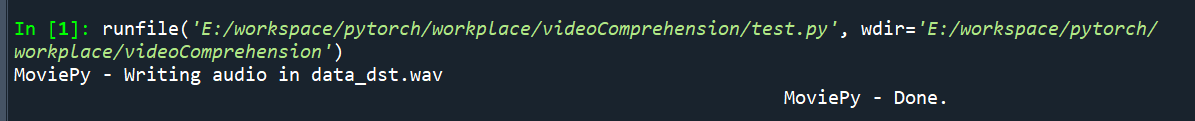

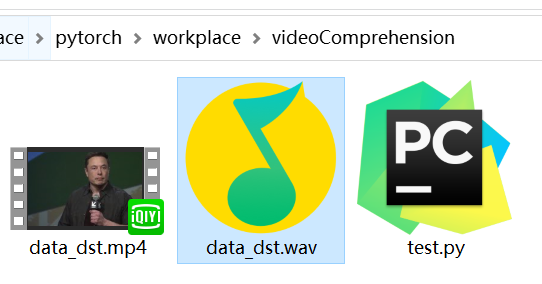

1.视频转音频

安装moviepy库

pip install moviepy提取音频

from moviepy.editor import AudioFileClip

my_audio_clip = AudioFileClip("data_dst.mp4")

my_audio_clip.write_audiofile("data_dst.wav")生成wav格式音频

2.语音转文本

参考Azure 认知服务文档语音转文本入门

首先安装和导入语音 SDK

pip install azure-cognitiveservices-speech

首先从文件识别语音转文本

import azure.cognitiveservices.speech as speechsdk

def from_file():

speech_config = speechsdk.SpeechConfig(subscription="<paste-your-speech-key-here>", region="<paste-your-speech-location/region-here>")

audio_input = speechsdk.AudioConfig(filename="your_file_name.wav")

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_input)

result = speech_recognizer.recognize_once_async().get()

print(result.text)

from_file()

3.文本摘要生成

参考Azure 认知服务文档文本分析快速入门

安装 Python 后可使用以下命令安装客户端库

pip install azure-ai-textanalytics==5.2.0b1

可以使用文本分析来汇总大型文本区块。 创建一个名为 summarization_example() 的新函数该函数采用客户端作为参数然后调用 begin_analyze_actions() 函数。 结果将是一个循环操作将轮询该操作以获得结果。

from azure.ai.textanalytics import TextAnalyticsClient

from azure.core.credentials import AzureKeyCredential

key = ""

endpoint = ""

def authenticate_client():

ta_credential = AzureKeyCredential(key)

text_analytics_client = TextAnalyticsClient(

endpoint=endpoint,

credential=ta_credential)

return text_analytics_client

client = authenticate_client()

def sample_extractive_summarization(client):

from azure.core.credentials import AzureKeyCredential

from azure.ai.textanalytics import (

TextAnalyticsClient,

ExtractSummaryAction

)

document = [

"The extractive summarization feature in Text Analytics uses natural language processing techniques to locate key sentences in an unstructured text document. "

"These sentences collectively convey the main idea of the document. This feature is provided as an API for developers. "

"They can use it to build intelligent solutions based on the relevant information extracted to support various use cases. "

"In the public preview, extractive summarization supports several languages. It is based on pretrained multilingual transformer models, part of our quest for holistic representations. "

"It draws its strength from transfer learning across monolingual and harness the shared nature of languages to produce models of improved quality and efficiency. "

]

poller = client.begin_analyze_actions(

document,

actions=[

ExtractSummaryAction(MaxSentenceCount=4)

],

)

document_results = poller.result()

for result in document_results:

extract_summary_result = result[0] # first document, first result

if extract_summary_result.is_error:

print("...Is an error with code '{}' and message '{}'".format(

extract_summary_result.code, extract_summary_result.message

))

else:

print("Summary extracted: \n{}".format(

" ".join([sentence.text for sentence in extract_summary_result.sentences]))

)

sample_extractive_summarization(client)其中文档内容为

"The extractive summarization feature in Text Analytics uses natural language processing techniques to locate key sentences in an unstructured text document. "

"These sentences collectively convey the main idea of the document. This feature is provided as an API for developers. "

"They can use it to build intelligent solutions based on the relevant information extracted to support various use cases. "

"In the public preview, extractive summarization supports several languages. It is based on pretrained multilingual transformer models, part of our quest for holistic representations. "

"It draws its strength from transfer learning across monolingual and harness the shared nature of languages to produce models of improved quality and efficiency. "

“文本分析中的提取摘要功能使用自然语言处理技术定位非结构化文本文档中的关键句子。”

“这些句子共同传达了文档的主要思想。此功能作为API提供给开发人员。”

“他们可以使用它根据提取的相关信息构建智能解决方案以支持各种用例。”

“在公开预览中摘录摘要支持多种语言。它基于预训练的多语言转换器模型这是我们寻求整体表示的一部分。”

“它从跨单语的迁移学习中汲取力量并利用语言的共享性来制作质量和效率更高的模型。”

摘要结果为

The extractive summarization feature in Text Analytics uses natural language processing techniques to locate key sentences in an unstructured text document. This feature is provided as an API for developers. They can use it to build intelligent solutions based on the relevant information extracted to support various use cases.

文本分析中的提取摘要功能使用自然语言处理技术定位非结构化文本文档中的关键句子。此功能作为API提供给开发人员。他们可以使用它根据提取的相关信息构建智能解决方案以支持各种用例。

4.文本翻译

参考Azure 认知服务文档文本翻译快速入门

import requests, uuid, json

# Add your subscription key and endpoint

subscription_key = "YOUR_SUBSCRIPTION_KEY"

endpoint = "https://api.translator.azure.cn"

# Add your location, also known as region. The default is global.

# This is required if using a Cognitive Services resource.

location = "YOUR_RESOURCE_LOCATION"

path = '/translate'

constructed_url = endpoint + path

params = {

'api-version': '3.0',

'from': 'en',

'to': ['zh']

}

constructed_url = endpoint + path

headers = {

'Ocp-Apim-Subscription-Key': subscription_key,

'Ocp-Apim-Subscription-Region': location,

'Content-type': 'application/json',

'X-ClientTraceId': str(uuid.uuid4())

}

# You can pass more than one object in body.

body = [{

'text': ''The extractive summarization feature in Text Analytics uses natural language processing techniques to locate key sentences in an unstructured text document. This feature is provided as an API for developers. They can use it to build intelligent solutions based on the relevant information extracted to support various use cases.'

}]

request = requests.post(constructed_url, params=params, headers=headers, json=body)

response = request.json()

print(json.dumps(response, sort_keys=True, ensure_ascii=False, indent=4, separators=(',', ': ')))

原文

The extractive summarization feature in Text Analytics uses natural language processing techniques to locate key sentences in an unstructured text document. This feature is provided as an API for developers. They can use it to build intelligent solutions based on the relevant information extracted to support various use cases.

翻译结果

文本分析中的摘录摘要功能使用自然语言处理技术在非结构化文本文档中定位关键句子。此功能作为开发人员的 API 提供。他们可以使用它来构建基于提取的相关信息的智能解决方案以支持各种使用案例。

三、搭建框架

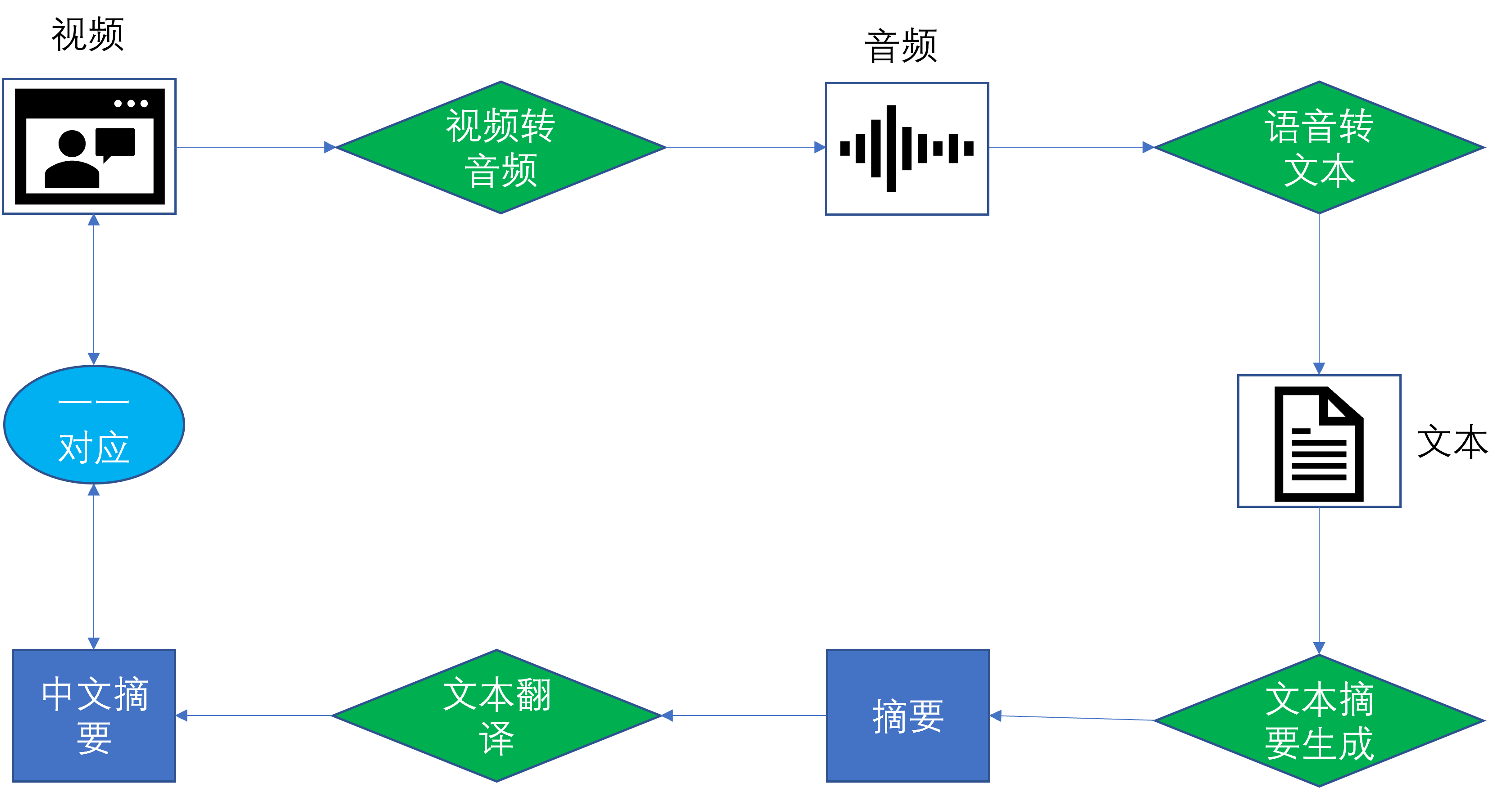

开发流程示意图

整体的流程如上图所示

将视频转成音频后输入到语音转文本服务中输出的文本信息输入到文本摘要生成服务中输入摘要信息再输入到文本翻译服务中翻译成中文最后就得到对应视频的中文摘要帮助快速理解视频信息。

代码实现

同样分成了四个模块

视频转音频 video2audio()

def video2audio(path):

my_audio_clip = AudioFileClip(path)

output_path=path[:-3]+"wav"

my_audio_clip.write_audiofile(output_path)

print("第一步视频转语音完成")

return output_path连续语音转文本 continuous_recognition()

def continuous_recognition(path):

audio_config = speechsdk.audio.AudioConfig(filename=path)

speech_config = speechsdk.SpeechConfig()

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config, audio_config=audio_config)

done = False

def stop_cb(evt):

print('CLOSING on {}'.format(evt))

speech_recognizer.stop_continuous_recognition()

nonlocal done

done = True

text=[]

speech_recognizer.recognized.connect(lambda evt: text.append(evt.result.text))

speech_recognizer.session_started.connect(lambda evt: print('SESSION STARTED: {}'.format(evt['text'])))

speech_recognizer.session_stopped.connect(lambda evt: print('SESSION STOPPED {}'.format(evt)))

speech_recognizer.canceled.connect(lambda evt: print(summary))

speech_recognizer.session_stopped.connect(stop_cb)

speech_recognizer.canceled.connect(stop_cb)

speech_recognizer.start_continuous_recognition()

while not done:

time.sleep(.5)

print("第二步语音转文本完成")

print(text)

return text文本摘要生成 sample_extractive_summarization()

def sample_extractive_summarization(client,text):

from azure.core.credentials import AzureKeyCredential

from azure.ai.textanalytics import (

TextAnalyticsClient,

ExtractSummaryAction

)

text="".join(text)

document = [text]

poller = client.begin_analyze_actions(

document,

actions=[

ExtractSummaryAction(MaxSentenceCount=4)

],

)

document_results = poller.result()

for result in document_results:

extract_summary_result = result[0] # first document, first result

if extract_summary_result.is_error:

summary=("...Is an error with code '{}' and message '{}'".format(

extract_summary_result.code, extract_summary_result.message

))

else:

print("第三步文本摘要生成完成")

summary=("Summary extracted: \n{}".format(

" ".join([sentence.text for sentence in extract_summary_result.sentences]))

)

print(summary)

return summary文本翻译 translate()

def translate(text):

import requests, uuid, json

subscription_key =

endpoint =

location =

path = '/translate'

constructed_url = endpoint + path

params = {

'api-version': '3.0',

'to': ['zh']

}

constructed_url = endpoint + path

headers = {

'Ocp-Apim-Subscription-Key': subscription_key,

'Ocp-Apim-Subscription-Region': location,

'Content-type': 'application/json',

'X-ClientTraceId': str(uuid.uuid4())

}

# You can pass more than one object in body.

body = [{

'text':text

}]

request = requests.post(constructed_url, params=params, headers=headers, json=body)

response = request.json()

translate_text=response[0]['translations'][0]['text']

# translate_text=(json.dumps(response, sort_keys=True, ensure_ascii=False, indent=4, separators=(',', ': ')))

print('第四步:文本翻译完成')

print(translate_text)

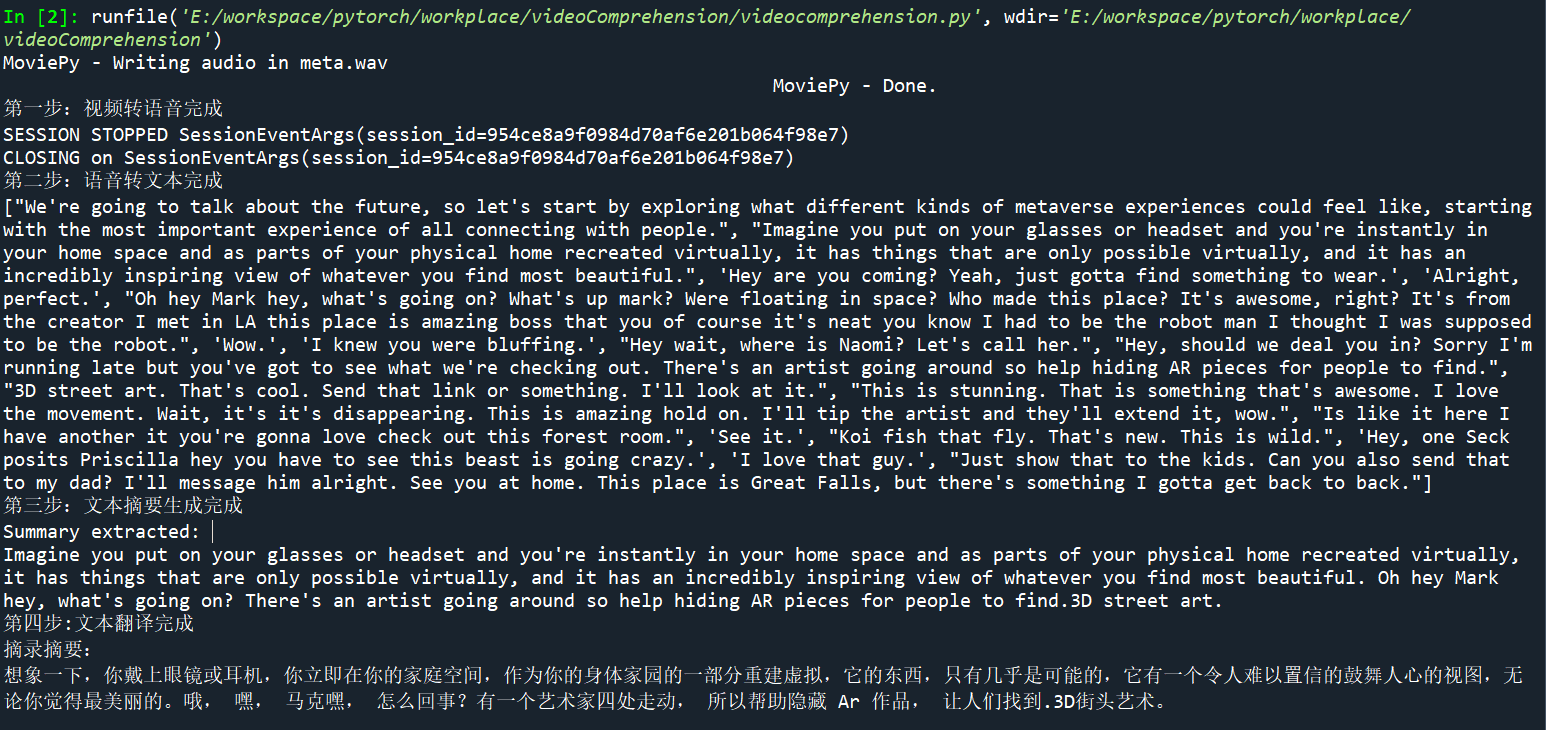

return translate_text最终输出结果

英文摘要结果

英文摘要结果

Imagine you put on your glasses or headset and you're instantly in your home space and as parts of your physical home recreated virtually, it has things that are only possible virtually, and it has an incredibly inspiring view of whatever you find most beautiful. Oh hey Mark hey, what's going on? There's an artist going around so help hiding AR pieces for people to find and 3D street art.

中文摘要结果

想象一下你戴上眼镜或耳机立刻就进入了你的家庭空间作为虚拟重建的实体家庭的一部分它有着只有虚拟才能实现的东西它有着令人难以置信的令人振奋的视角让你看到最美的东西。马克怎么了有一位艺术家在四处走动所以帮助人们找到隐藏AR作品和3D街头艺术。

四、总结

在试用过程中单项服务效果还行但是其中的摘要生成和文本翻译的准确度还是可以再次提高的。而且一开始使用语音转文本功能只能翻译十五秒所以之后选择了连续语音识别转文本才算是基本获取了该视频的所有语音。

总而来说通过这次Azure认知服务免费试用活动基本实现了预期设想的视频语义理解功能。

尤其是近两年随着短视频领域的火爆发展围绕短视频的业务场景应用也在增长工业界应用场景都对视频内容理解提出了迫切的落地需求。本篇实现的视频语义理解功能基本可以满足以上需求能够实现个性化推荐以及用户选择建议。