centos7搭建ceph集群

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

1 配置hosts

vim /etc/hosts

172.18.3.87 ceph1

172.18.3.88 ceph2

172.18.3.89 ceph32 配置ceph用户

groupadd ceph -g 2020 && useradd -u 2020 -g 2020 ceph && echo "123456" | passwd --stdin ceph

echo "ceph ALL = (root) NOPASSWD:ALL" >> /etc/sudoers3 配置免密登陆(只在ceph-deploy节点上执行)

su - ceph

ssh-keygen

ssh-copy-id ceph@ceph1

ssh-copy-id ceph@ceph2

ssh-copy-id ceph@ceph34 配置时间同步

crontab -e:

*/5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com5 安装

# 配置yum源

cat > /etc/yum.repos.d/ceph.repo<<'EOF'

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirror.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirror.tuna.tsinghua.edu.cn/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirror.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirror.tuna.tsinghua.edu.cn/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=https://mirror.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirror.tuna.tsinghua.edu.cn/ceph/keys/release.asc

priority=1

EOF

yum clean all && yum makecache# 所有节点安装epel-release

yum install -y epel-release python-pip5.1 管理节点安装ceph-deploy

# admin用户安装ceph-deploy

sudo yum install -y ceph-deploy python-setuptools python2-subprocess35.2 管理节点初始化mon节点

mkdir ceph_cluster

cd ceph_cluster

ceph-deploy new ceph1 ceph2 ceph3

vim ceph.conf增加如下配置:

public network = 172.18.3.0/24

cluster network = 172.18.3.0/24

osd pool default size = 3

osd pool default min size = 2

osd pool default pg num = 128

osd pool default pgp num = 128

osd pool default crush rule = 0

osd crush chooseleaf type = 1

max open files = 131072

ms bind ipv6 = false

[mon]

mon clock drift allowed = 10

mon clock drift warn backoff = 30

mon osd full ratio = .95

mon osd nearfull ratio = .85

mon osd down out interval = 600

mon osd report timeout = 300

mon allow pool delete = true

[osd]

osd recovery max active = 3

osd max backfills = 5

osd max scrubs = 2

osd mkfs type = xfs

osd mkfs options xfs = -f -i size=1024

osd mount options xfs = rw,noatime,inode64,logbsize=256k,delaylog

filestore max sync interval = 5

osd op threads = 2安装Ceph软件到指定节点

ceph-deploy install --no-adjust-repos ceph1 ceph2 ceph3部署初始的monitors并获得keys

ceph-deploy mon create-initial将配置文件和密钥复制到集群各节点

ceph-deploy admin ceph1 ceph2 ceph3各节点查看认证文件

ll /etc/ceph/认证文件的属主和属组为了安全考虑默认设置为了root用户和root组如果需要ceph用户也能执行ceph命令那么就需要对ceph用户进行授权

setfacl -m u:ceph:rw /etc/ceph/ceph.client.admin.keyring # 所有节点执行测试ceph命令

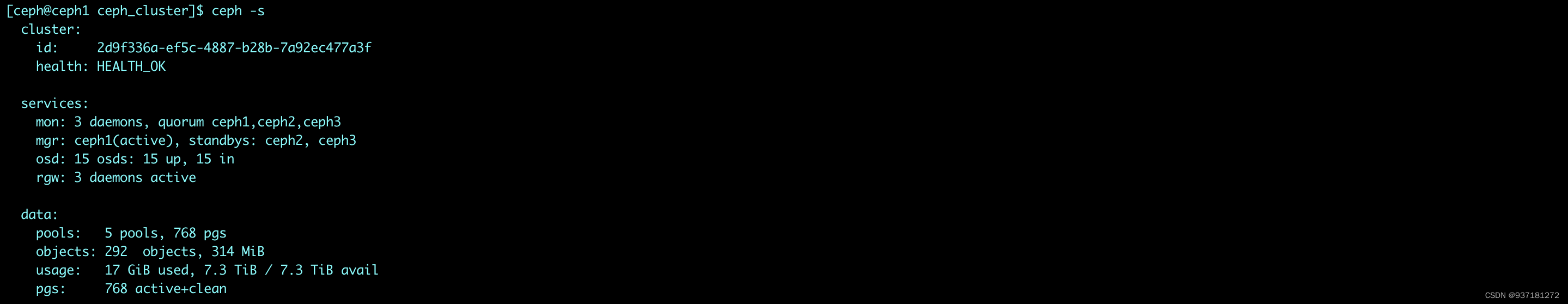

ceph -s

如果报错mon is allowing insecure global_id reclaim #需要禁用非安全模式通信

ceph config set mon auth_allow_insecure_global_id_reclaim false5.3 管理节点安装ceph-mgr

ceph-deploy mgr create ceph1 ceph2 ceph35.4 安装osd

ceph-deploy install --release pacific ceph1 --repo-url https://mirrors.aliyun.com/ceph/rpm-luminous/el7/

ceph-deploy install --release pacific ceph2 --repo-url https://mirrors.aliyun.com/ceph/rpm-luminous/el7/

ceph-deploy install --release pacific ceph3 --repo-url https://mirrors.aliyun.com/ceph/rpm-luminous/el7/

ceph-deploy disk list ceph1

# 擦除磁盘

ceph-deploy disk zap ceph1 /dev/sdb

ceph-deploy disk zap ceph1 /dev/sdc

ceph-deploy disk zap ceph1 /dev/sdd

ceph-deploy disk zap ceph1 /dev/sde

ceph-deploy disk zap ceph1 /dev/sdf

# 擦除磁盘

ceph-deploy disk zap ceph2 /dev/sdb

ceph-deploy disk zap ceph2 /dev/sdc

ceph-deploy disk zap ceph2 /dev/sdd

ceph-deploy disk zap ceph2 /dev/sde

ceph-deploy disk zap ceph2 /dev/sdf

# 擦除磁盘

ceph-deploy disk zap ceph3 /dev/sdb

ceph-deploy disk zap ceph3 /dev/sdc

ceph-deploy disk zap ceph3 /dev/sdd

ceph-deploy disk zap ceph3 /dev/sde

ceph-deploy disk zap ceph3 /dev/sdf

# 添加osd

ceph-deploy osd create ceph1 --data /dev/sdb

ceph-deploy osd create ceph1 --data /dev/sdc

ceph-deploy osd create ceph1 --data /dev/sdd

ceph-deploy osd create ceph1 --data /dev/sde

ceph-deploy osd create ceph1 --data /dev/sdf

ceph-deploy osd create ceph2 --data /dev/sdb

ceph-deploy osd create ceph2 --data /dev/sdc

ceph-deploy osd create ceph2 --data /dev/sdd

ceph-deploy osd create ceph2 --data /dev/sde

ceph-deploy osd create ceph2 --data /dev/sdf

ceph-deploy osd create ceph3 --data /dev/sdb

ceph-deploy osd create ceph3 --data /dev/sdc

ceph-deploy osd create ceph3 --data /dev/sdd

ceph-deploy osd create ceph3 --data /dev/sde

ceph-deploy osd create ceph3 --data /dev/sdf

# 设置OSD服务自启动 # 三台服务器运行

# ceph1

systemctl enable ceph-osd@0.service

systemctl enable ceph-osd@1.service

systemctl enable ceph-osd@2.service

systemctl enable ceph-osd@3.service

systemctl enable ceph-osd@4.service

# ceph2

systemctl enable ceph-osd@5.service

systemctl enable ceph-osd@6.service

systemctl enable ceph-osd@7.service

systemctl enable ceph-osd@8.service

systemctl enable ceph-osd@9.service

# ceph3

systemctl enable ceph-osd@10.service

systemctl enable ceph-osd@11.service

systemctl enable ceph-osd@12.service

systemctl enable ceph-osd@13.service

systemctl enable ceph-osd@14.service

卸载osd:

# ceph1

ceph osd out 0

ceph osd out 1

ceph osd out 2

ceph osd out 3

ceph osd out 4

ceph osd out 5

ceph osd out 6

ceph osd out 7

ceph osd out 8

ceph osd out 9

systemctl stop ceph-osd@0

systemctl stop ceph-osd@1

systemctl stop ceph-osd@2

systemctl stop ceph-osd@3

systemctl stop ceph-osd@4

systemctl stop ceph-osd@5

systemctl stop ceph-osd@6

systemctl stop ceph-osd@7

systemctl stop ceph-osd@8

systemctl stop ceph-osd@9

ceph osd purge 0 --yes-i-really-mean-it

ceph osd purge 1 --yes-i-really-mean-it

ceph osd purge 2 --yes-i-really-mean-it

ceph osd purge 3 --yes-i-really-mean-it

ceph osd purge 4 --yes-i-really-mean-it

ceph osd purge 5 --yes-i-really-mean-it

ceph osd purge 6 --yes-i-really-mean-it

ceph osd purge 7 --yes-i-really-mean-it

ceph osd purge 8 --yes-i-really-mean-it

ceph osd purge 9 --yes-i-really-mean-it

# ceph2

ceph osd out 10

ceph osd out 11

ceph osd out 12

ceph osd out 13

ceph osd out 14

ceph osd out 15

ceph osd out 16

ceph osd out 17

ceph osd out 18

ceph osd out 19

systemctl stop ceph-osd@10

systemctl stop ceph-osd@11

systemctl stop ceph-osd@12

systemctl stop ceph-osd@13

systemctl stop ceph-osd@14

systemctl stop ceph-osd@15

systemctl stop ceph-osd@16

systemctl stop ceph-osd@17

systemctl stop ceph-osd@18

systemctl stop ceph-osd@19

ceph osd purge 10 --yes-i-really-mean-it

ceph osd purge 11 --yes-i-really-mean-it

ceph osd purge 12 --yes-i-really-mean-it

ceph osd purge 13 --yes-i-really-mean-it

ceph osd purge 14 --yes-i-really-mean-it

ceph osd purge 15 --yes-i-really-mean-it

ceph osd purge 16 --yes-i-really-mean-it

ceph osd purge 17 --yes-i-really-mean-it

ceph osd purge 18 --yes-i-really-mean-it

ceph osd purge 19 --yes-i-really-mean-it

# ceph3

ceph osd out 20

ceph osd out 21

ceph osd out 22

ceph osd out 23

ceph osd out 24

ceph osd out 25

ceph osd out 26

ceph osd out 27

ceph osd out 28

ceph osd out 29

systemctl stop ceph-osd@20

systemctl stop ceph-osd@21

systemctl stop ceph-osd@22

systemctl stop ceph-osd@23

systemctl stop ceph-osd@24

systemctl stop ceph-osd@25

systemctl stop ceph-osd@26

systemctl stop ceph-osd@27

systemctl stop ceph-osd@28

systemctl stop ceph-osd@29

ceph osd purge 20 --yes-i-really-mean-it

ceph osd purge 21 --yes-i-really-mean-it

ceph osd purge 22 --yes-i-really-mean-it

ceph osd purge 23 --yes-i-really-mean-it

ceph osd purge 24 --yes-i-really-mean-it

ceph osd purge 25 --yes-i-really-mean-it

ceph osd purge 26 --yes-i-really-mean-it

ceph osd purge 27 --yes-i-really-mean-it

ceph osd purge 28 --yes-i-really-mean-it

ceph osd purge 29 --yes-i-really-mean-it

# 设置OSD服务自启动 # 三台服务器运行

# ceph1

systemctl enable ceph-osd@0

systemctl enable ceph-osd@1

systemctl enable ceph-osd@2

systemctl enable ceph-osd@3

systemctl enable ceph-osd@4

# ceph2

systemctl enable ceph-osd@5

systemctl enable ceph-osd@6

systemctl enable ceph-osd@7

systemctl enable ceph-osd@8

systemctl enable ceph-osd@9

# ceph3

systemctl enable ceph-osd@10

systemctl enable ceph-osd@11

systemctl enable ceph-osd@12

systemctl enable ceph-osd@13

systemctl enable ceph-osd@145.5 安装rgw

ceph-deploy --overwrite-conf rgw create ceph1 ceph2 ceph3验证

5.6 验证ceph集群

5.6 验证ceph集群