【hadoop】 2003-hdfs的java接口

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |

一、下载Eclipse版本

http://www.eclipse.org/downloads/?osType=linux

二、Linux下安装Eclipse

1、上传eclipse

通过xshell工具上传eclipse-jee-luna-SR1a-linux-gtk.tar.gz 文件到指定目录

2、解压 eclipse-jee-luna-SR1a-linux-gtk.tar.gz

tar -zxvfeclipse-jee-luna-SR1a-linux-gtk.tar.gz -C app/

三、基于命令行模式开发HDFS程序

1.0查看帮助

hadoop fs -help <cmd>

1.1上传

hadoop fs -put <linux上文件> <hdfs上的路径>

1.2查看文件内容

hadoop fs -cat <hdfs上的路径>

1.3查看文件列表

hadoop fs -ls /

1.4下载文件

hadoop fs -get <hdfs上的路径> <linux上文件>

四、基于Eclipse界面开发HDFS程序

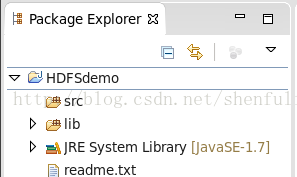

1、新建HDFSDemo

2、在HDFSDemo工程下新建lib

3、拷贝hadoop相关jar到lib,采用命令行模式

[hadoop@cloud01 hadoop]$ pwd

/home/hadoop/app/hadoop-2.4.1/share/hadoop

[hadoop@cloud01 hadoop]$ ls

common hdfs httpfs mapreduce tools yarn

以common目录为例

[hadoop@cloud01 common]$ cd /home/hadoop/app/hadoop-2.4.1/share/hadoop/common

[hadoop@cloud01 common]$ cp hadoop-* /home/hadoop/workspace/HDFSdemo/lib/

[hadoop@cloud01 common]$ cp lib/* /home/hadoop/workspace/HDFSdemo/lib/

其他的目录采用同样的方式拷贝即可

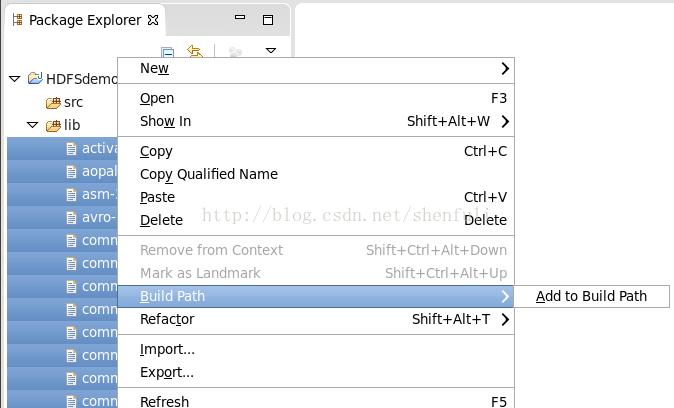

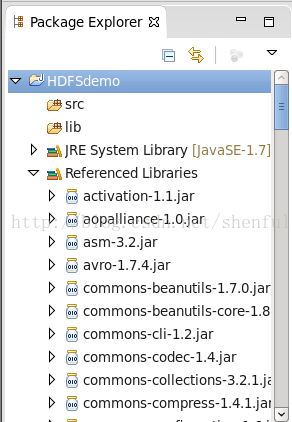

4、选择lib目录,然后添加jar包到PATH下

5、通过Eclipse开发工具演示文件的上传、下载、查看HDFS文件列表

package hdfs;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import java.text.SimpleDateFormat;

import java.util.Date;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.permission.FsPermission;

import org.apache.hadoop.io.IOUtils;

import org.junit.Before;

import org.junit.Test;

/**

* @function

: Java 操作HDFS

* @author shenfl

* @date 2015/2/21

*/

public class HDFSDemo {

private static final String HDFS_PATH = "hdfs://192.168.2.31:9000" ;

FileSystem fileSystem = null

;

@Before

public void init(){

/**

* Returns the FileSystem for this URI's scheme and authority. The

* scheme of the URI determines a configuration property name,

* <tt> fs. <i> scheme </i>

.class

</tt>

whose value names the FileSystem

* class. The entire URI is passed to the FileSystem instance's

* initialize method.

*/

try {

fileSystem = FileSystem. get( new URI( HDFS_PATH

),

new Configuration());

} catch (Exception e ) {

e .printStackTrace();

}

}

/**

* @eg

: [hadoop@cloud01 ~]$ hadoop fs

-

put

-

put: Not enough arguments:

* expected 1 but got 0 Usage: hadoop fs [generic options] - put [ -

f]

* [ - p] <localsrc> ... <dst>

*

*

* hadoop fs

-

put

-

f readme.txt hdfs://cloud01:9000/

* @description

: upload file from localsrc to hdfs

* @author shenfl

* @Date 2015 - 2

-

20

*/

@Test

public void testPut() {

InputStream in = null

;

OutputStream out = null

;

int buffSize = 1024 * 4;

try {

in = new FileInputStream( new File( "/home/hadoop/workspace/HDFSdemo/src/hdfs/readme2.txt" ));

/**

* Create an FSDataOutputStream at the indicated Path.

*

* @param f

* the file to create

* @param overwrite

* if a file with this name already exists, then if true,

* the file will be overwritten, and if false an

* exception will be thrown.

*/

boolean overwrite = true

;

out = fileSystem

.create(

new Path( HDFS_PATH

+

"/readme2.txt"

),

overwrite

);

boolean close = true

;

/**

* Copies from in stream to out stream.

*

* @param in

* InputStrem to read from

* @param out

* OutputStream to write to

* @param buffSize

* the size of the buffer

*/

IOUtils. copyBytes( in , out

,

buffSize

,

close

);

} catch (Exception e ) {

e .printStackTrace();

}

}

/**

* [ hadoop@cloud01 ~]$ hadoop fs

-

get

-

get: Not enough arguments: expected 1

* but got 0 Usage: hadoop fs [generic options] - get [ -

p] [

-

ignoreCrc]

* [ - crc] <src> ... <localdst> [hadoop@cloud01 ~]$ hadoop fs

-

get

* hdfs://cloud01:9000/readme.txt readme1.txt

*

* @description

: download file from hdfs

*/

@Test

public void testGet() {

InputStream in = null

;

OutputStream out = null

;

int buffSize = 1024 * 4;

boolean close = true

;

try {

/**

* Opens an FSDataInputStream at the indicated Path.

*

* @param f

* the file to open

*/

in = fileSystem

.open(

new Path( "hdfs://cloud01:9000/readme2.txt" ));

// get OutputStream

out = System. out

;

IOUtils. copyBytes( in , out

,

buffSize

,

close

);

} catch (Exception e ) {

e .printStackTrace();

}

}

/**

*

@eg

: hadoop cmd

[ hadoop@cloud01 ~]$ hadoop fs

-

ls /

Found 2 items

- rw

-

r

--

r

--

1 hadoop supergroup 41 2015 - 02 -

20 07:05 /readme.txt

- rw

-

r

--

r

--

3 hadoop supergroup 16 2015 - 02 -

21 04:18 /readme2.txt

@return

rw

-

r

--

r

--

File 1 hadoop supergroup 2015 - 02 -

20 07:05:32 hdfs://192.168.2.31:9000/readme.txt

rw

-

r

--

r

--

File 3 hadoop supergroup 2015 - 02 -

21 04:18:14 hdfs://192.168.2.31:9000/readme2.txt

*/

@Test

public void testViewHdfsFiles () {

FileStatus[] listStatus = null

;

try {

listStatus = fileSystem

.listStatus(

new Path( "/" ));

StringBuffer sb = new StringBuffer();

for (FileStatus fileStatus :

listStatus

) {

short replication = fileStatus

.getReplication();

String type = fileStatus

.isDirectory() ?

"Directory"

:

"File"

;

FsPermission permission = fileStatus

.getPermission();

String path = fileStatus

.getPath().toString();

String owner = fileStatus

.getOwner();

String group = fileStatus

.getGroup();

String modifiyDate = secondTranToDate( fileStatus

.getModificationTime());

sb .append( permission

).append(

"\t"

).append(

type

).append(

"\t"

).append(

replication

).append(

"\t"

)

.append( owner ).append( "\t"

).append(

group

).append(

"\t"

).append(

modifiyDate

).append(

"\t"

)

.append( path ).append( "\n"

);

}

System. out

.println(

sb

.toString());

} catch (Exception e ) {

e .printStackTrace();

}

}

/**

*

* @param second

* @return

*/

private String secondTranToDate( long second ) {

SimpleDateFormat sdf = new SimpleDateFormat( "yyyy-MM-dd hh:mm:ss" );

Date date = new Date( second );

String modifiyDate = sdf

.format(

date

);

return modifiyDate ;

}

}

| 阿里云国内75折 回扣 微信号:monov8 |

| 阿里云国际,腾讯云国际,低至75折。AWS 93折 免费开户实名账号 代冲值 优惠多多 微信号:monov8 飞机:@monov6 |